Video Privacy Protection Act: What’s Next After Sixth Circuit Creates Split

The Video Privacy Protection Act (VPPA) is a federal law aimed at prohibiting the unauthorized disclosure of a person’s video viewing history. While the VPPA was originally enacted to prevent disclosure of information regarding an individual’s video rental history from businesses like Blockbuster in 1988, the explosion of the internet in the decades since has greatly expanded its potential reach, giving rise to countless lawsuits targeting businesses’ websites. One such case, involving the alleged disclosure of the plaintiff’s video viewing history through use of Meta’s data-tracking Pixel, was recently decided by the United States Court of Appeals for the Sixth Circuit, in a decision that serves to narrow the reach of the VPPA.

In a published opinion, the Sixth Circuit addressed the issue of who can be considered a “consumer” – and thus able to bring a claim – under the VPPA. The VPPA defines the term “consumer” to mean “any renter, purchaser, or subscriber of goods or services from a video tape service provider.” Citing longstanding canons of statutory construction, the Sixth Circuit reasoned that, when read in context of its surrounding text, the phrase “goods and services” is limited to audiovisual goods and services. The plaintiff, a subscriber to 247Sports.com’s newsletter which contained links to videos that were accessible to anyone on the website, failed to allege that the newsletter itself was audiovisual material, and thus was not protected under the VPPA.

Notably, the Sixth Circuit’s decision was contrary to the conclusions previously reached by other Federal Courts of Appeals, specifically the Second and Seventh Circuits. Those courts had endorsed a broader interpretation of the term, considering a subscriber of any of the provider’s goods or services to be a “consumer” under the VPPA, regardless of whether the subscription was specifically for audiovisual materials. By defying this trend, the Sixth Circuit creates a circuit split that may be resolved by the Supreme Court of the United States. The defendant in the Second Circuit case on this issue has petitioned the Supreme Court to review the decision. Now, with a circuit split apparent, the Supreme Court may be more likely to intervene.

Against this uncertain backdrop, and with the wave of Meta Pixel and similar lawsuits continuing, businesses will need to carefully evaluate the operation of their websites and whether they may be subjected to a VPPA claim. The review should also include an analysis of the effectiveness of any consent provisions that the business may be relying on to avoid liability. Businesses should be aware of the risks presented by the entities they acquire or merge with whose data sharing practices may implicate the VPPA. To mitigate the risk of liability, due diligence in any such transaction should include a thorough review of the target company’s data practices, compliance with privacy regulations, and any ongoing or potential lawsuits tied to the use of tracking technology.

Recent Federal Developments for April 15, 2025

TSCA/FIFRA/TRI

EPA Releases New TSCA And FIFRA Enforcement Policies: On January 17, 2025, days before the end of President Biden’s term, the U.S. Environmental Protection Agency (EPA) released two new enforcement documents: (1) Expedited Settlement Agreement Pilot Program Under the Federal Insecticide, Fungicide, and Rodenticide Act (FIFRA) (FIFRA Settlement Pilot Program or Pilot Program); and (2) Interim Consolidated Enforcement Response and Penalty Policy (CERPP) for the Toxic Substances Control Act (TSCA) New and Existing Chemicals Program. In that both of these enforcement documents were prepared by the prior Administration, their enduring relevance, like so many other issues at EPA, is unclear. As new leadership populates the ranks at EPA program offices, including the Office of Enforcement and Compliance Assurance, we may learn more. For more information on these enforcement documents, please read our March 21, 2025, memorandum.

EPA Argues For Remand Of Final Rule Amending Risk Evaluation Framework: On March 21, 2025, the U.S. Court of Appeals for the District of Columbia Circuit heard oral argument in a case challenging EPA’s May 3, 2024, final rule amending the procedural framework rule for conducting risk evaluations under TSCA. United Steel, Paper and Forestry, Rubber, Manufacturing, Energy, Allied Industrial and Service Workers International Union (USW) v. EPA, Consolidated Case No. 24-1151. If you have a couple of hours to spare, listening to the argument is well worth the time. The court was uniquely curious about the litigants’ request for a remand and probed deeply into the difference between a remand and a vacatur. Judge Rao bluntly questioned on what authority the court could rely to remand the case. An answer was not forthcoming, fueling speculation the court will rule on the merits. According to EPA, the court should not rule on the case when the Agency plans to revise and issue a new final rule by April 2026. The court expressed skepticism that EPA can complete a rulemaking so quickly. The court also questioned when TSCA requires that conditions of use (COU) be identified, whether making a single risk determination for a chemical is consistent with TSCA, and whether USW has standing to challenge the May 2024 rule’s provisions regarding personal protective equipment (PPE). More information is available in our March 31, 2025, blog item.

EPA Postpones Effective Date Of Certain Provisions Of TCE Risk Management Rule To June 20, 2025: On April 2, 2025, EPA announced that it is postponing the effectiveness of certain provisions of its December 17, 2024, final risk management rule for trichloroethylene (TCE) until June 20, 2025. 90 Fed. Reg. 14415. EPA states in the April 2, 2025, notice that, in light of pending litigation, it has reconsidered its position from its earlier denial of an administrative stay pending judicial review and determined that justice requires a 90-day postponement of the effective date of the conditions for each of the TSCA Section 6(g) exemptions. According to EPA, petitioners allege that because the interim workplace conditions would require petitioners to reduce TCE exposure levels to the interim existing chemical exposure limit (ECEL) of 0.2 parts per million (ppm), the final rule effectively requires the use of PPE “that cannot feasibly be worn all day, and therefore could cause petitioners to cease operations.” EPA notes that although it does not concede these allegations, “petitioners have raised significant legal challenges and allege significant harms as a result of the workplace conditions required by the final rule’s TSCA section 6(g) exemptions.” EPA states that “[m]oreover, a limited postponement that maintains the status quo for these uses appropriately balances the alleged harm to petitioners and other entities with critical uses against the public interest in the health protections that will be afforded by the broader TCE prohibitions and workplace protections going into effect.”

EPA Announces Changes To Pesticide Data Submission Process For Data Matrix Form: On April 3, 2025, EPA announced changes on how the data matrix form (EPA Form 8570-35) is submitted to EPA, stating this change is an improvement to simplify the process for how companies submit data to EPA as part of a pesticide registration package. EPA states these improvements also will make EPA’s processing of this information more efficient. Companies are required to submit a data matrix form when their pesticide registration packages contain submitted data or cited data from outside sources. Previously, companies submitted two versions of the data matrix form (in either paper or electronic format): one for internal EPA use and one with reference data redacted for public use. EPA states in the interest of reducing burden and, according to EPA, because no information on the data matrix form is confidential business information (CBI), it determined that there is no need for a redacted version and is now only requiring one unredacted version of the form to be submitted for both internal and public use. Additionally, EPA will no longer accept paper submissions of this form and will only accept this information via a web-based portal.

Additional information on the new update is available in EPA’s recently issued Pesticide Registration (PR) Notice 2025-1. Instructions on how to complete and submit the revised forms will be available in the updated Pesticide Registration Manual.

EPA Proposes SNURs For Certain Chemical Substances: EPA proposed significant new use rules (SNUR) on April 4, 2025, for certain chemical substances that were the subject of premanufacture notices (PMN) and are also subject to an Order issued by EPA pursuant to TSCA. 90 Fed. Reg. 14743. The SNURs require persons who intend to manufacture (defined by statute to include import) or process any of these chemical substances for an activity that is proposed as a significant new use by this rulemaking to notify EPA at least 90 days before commencing that activity. The required notification initiates EPA’s evaluation of the conditions of that use for that chemical substance. In addition, the manufacture or processing for the significant new use may not commence until EPA has conducted a review of the required notification, made an appropriate determination regarding that notification, and taken such actions as required by that determination. Comments are due May 5, 2025.

RCRA/CERCLA/CWA/CAA/PHMSA/SDWA

States Take Action To Regulate And Limit PFAS In Industrial Effluent Despite Federal Inaction: On January 21, 2025, EPA’s proposed rule seeking to set effluent limitation guidelines for certain PFAS under the Clean Water Act (CWA) was withdrawn from Office of Management and Budget (OMB) review following President Trump’s Executive Order (EO) implementing a regulatory freeze. Federal action may be halted, but states are beginning to enact legislation that seeks to address PFAS contained in industrial effluent. These laws are currently sparse, with Maryland being the most recent state to establish a robust framework that requires industrial sources to limit PFAS in effluent. A handful of other states have laws establishing monitoring and reporting protocols for PFAS in industrial effluent, and other states have similar frameworks planned for future implementation. While these efforts are not yet widespread, heightened scrutiny of PFAS use suggests that more and more states will seek to monitor and limit PFAS in industrial effluent. For more information, please read our March 28, 2025, memorandum.

EPA Accepts Requests For Presidential Exemption Under CAA Section 112: On March 12, 2025, EPA set up an electronic mailbox to allow the regulated community to request a Presidential Exemption under Section 112(i)(4) of the Clean Air Act (CAA). EPA states that the CAA allows the President to exempt stationary sources of air pollution from compliance with any standard or limitation under Section 112 for up to two years if the technology to implement the standard is not available and it is in the national security interests of the United States to do so. EPA notes that submitting a request does not entitle the submitter to an exemption and that the President will make a decision on the merits. An exemption may be extended for up to two additional years and can be renewed, if appropriate. Requests were due March 31, 2025.

EPA Extends Reporting Deadline Under GHG Reporting Rule For 2024 Data: On March 20, 2025, EPA extended the reporting deadline under the Greenhouse Gas (GHG) Reporting Rule for reporting year 2024 data from March 31, 2025, to May 30, 2025. 90 Fed. Reg. 13085. The rule changes only the reporting deadline for annual GHG reports for reporting year 2024. It does not change the reporting deadline for future years, and it does not change the requirements for what regulated entities must report. The rule was effective March 20, 2025.

EPA And Army Corps Of Engineers Announce WOTUS Listening Sessions And Solicit Stakeholder Feedback: On March 24, 2025, EPA and U.S. Army Corps of Engineers announced that they will hold listening sessions on specific key topic areas to hear interested stakeholders’ perspectives on defining “waters of the United States” (WOTUS) consistent with the Supreme Court’s interpretation of the scope of CWA jurisdiction and how to implement that interpretation as the agencies consider next steps. 90 Fed. Reg. 13428. The listening sessions will be held in-person with a virtual option for states, Tribes, industry and agricultural stakeholders, environmental and conservation stakeholders, and the general public. The agencies seek input from a full spectrum of co-regulators and stakeholders on key topic areas related to the definition of WOTUS in light of Sackett v. EPA regarding “continuous surface connection,” “relatively permanent,” and jurisdictional versus non-jurisdictional ditches. The agencies also seek input on implementation challenges related to those key topic areas. Information on the listening sessions is available on EPA’s website. The agencies are also accepting written recommendations from members of the public via a recommendations docket. Written recommendations are due April 23, 2025.

EPA Issues Partial Stay Of Integrated Iron And Steel Manufacturing Facilities Technology Review: By a letter dated August 14, 2024, and supplemented by a letter dated March 5, 2025, the EPA’s Office of Air and Radiation announced the convening of a proceeding for reconsideration of certain requirements in the final rule “National Emission Standards for Hazardous Air Pollutants: Integrated Iron and Steel Manufacturing Facilities Technology Review,” published on April 3, 2024. On March 31, 2025, EPA issued a final rule that stayed provisions establishing compliance deadlines in 2025 for requirements that were added or revised by the April 3, 2024, final rule for 90 days pending reconsideration. 90 Fed. Reg. 14207. EPA states that it will reconsider the following topics from two petitions pursuant to CAA Section 307(d)(7)(B): work practice standards for unmeasured fugitive and intermittent particulate from unplanned bleeder valve openings; the opacity limit for planned bleeder valve openings; work practice standards for bell leaks; and the opacity limit for slag processing and handling. The final rule was effective March 31, 2025.

EPA Will Review NESHAP For Brick And Structural Clay Products Manufacturing And Clay Ceramics Manufacturing: On March 31, 2025, EPA requested comments for a review pursuant to Section 610 of the Regulatory Flexibility Act of the National Emission Standards for Hazardous Air Pollutants (NESHAP) for Brick and Structural Clay Products Manufacturing; and Clay Ceramics Manufacturing (Brick and Clay 610 Review). 90 Fed. Reg. 14227. EPA states that as part of this review, it will consider comments on the following factors: the continued need for the rule; the nature of complaints or comments received concerning the rule; the complexity of the rule; the extent to which the rule overlaps, duplicates, or conflicts with other federal, state, or local government rules; and the degree to which the technology, economic conditions, or other factors have changed in areas affected by the rule. Comments are due May 30, 2025.

EPA Will Review New Science On Fluoride In Drinking Water: EPA announced on April 7, 2025, that it will “expeditiously review new scientific information on potential health risks of fluoride in drinking water.” According to EPA, the evaluation will inform its decisions on the standard for fluoride under the Safe Drinking Water Act (SDWA). EPA notes that the National Toxicology Program (NTP) released a report in August 2024 concluding with “moderate confidence” that fluoride exposure above 1.5 milligrams per liter is associated with lower intelligence quotient (IQ) in children and that more research is needed to understand better if there are health risks associated with exposure to lower fluoride concentrations. EPA states that it “is committing to conduct a thorough review of these findings and additional peer reviewed studies to prepare an updated health effects assessment for fluoride that will inform any potential revisions to EPA’s fluoride drinking water standard.”

FDA

FDA Provides Summary Data On Cosmetic Product Facility And Product Registration Listing: On March 13, 2025, the U.S. Food and Drug Administration (FDA) updated constituents by providing a tabulated summary of the data collected from its mandatory registration of cosmetic product facilities and listings of cosmetic products under the Modernization of Cosmetics Regulation Act of 2022 (MoCRA). MoCRA requires manufacturers and processors to register their facilities and renew registrations every two years. This requirement includes providing details on the type of cosmetic products manufactured or processed, and a list of ingredients used in those products at each facility. The tabulated list includes the number of domestic and foreign registered facilities as of January 1, 2025. There are 1,800 domestic facilities registered and 7,732 foreign facility registrations, with the highest number of facility registrations being noted in China with 4,260.

FDA Announces Chemical Contaminants Tool: On March 20, 2025, FDA announced the Chemical Contaminants Transparency Tool for foods, which is an online database providing a list of contaminant levels used by FDA to evaluate potential health risks of contaminants in human foods. The tool lists thresholds (e.g., action levels, recommended maximum levels) for contaminants such as heavy metals and pesticides, in foods. The FDA Acting Commissioner stated that “Ideally there would be no contaminants in our food supply, but chemical contaminants may occur in food when they are present in the growing, storage or processing environments.”

FDA Intends To Extend Compliance Date For FSMA Program: On March 20, 2025, FDA announced an intention to extend the compliance date for the Food Safety Modernization Act (FSMA) Food Traceability Rule by 30 months. An excerpt from the announcement states that “FDA intends to use the extended time period to continue the agency’s work with stakeholders, including by participating in cross-sector dialogue to identify solutions to implementation challenges and by continuing to provide technical assistance, tools, and other resources to assist industry with implementation.” Additional details for the Food Traceability Rule are available at the link here.

NANOTECHNOLOGY

EC Scientific Committee Begins Public Consultation On Preliminary Opinion On Hydroxyapatite (Nano): On April 3, 2024, the European Commission’s (EC) Scientific Committee on Consumer Safety (SCCS) began a public consultation on its preliminary opinion on the safety of hydroxyapatite (nano) in oral products. The EC asked SCCS if it considers hydroxyapatite (nano) safe when used in toothpaste up to a maximum concentration of 29.5 percent and in mouthwash up to a maximum concentration of ten percent according to the specifications as reported in the submission, taking into account reasonably foreseeable exposure conditions. According to the preliminary opinion, based on the data provided, SCCS considers hydroxyapatite (nano) safe when used at concentrations up to 29.5 percent in toothpaste, and up to ten percent in mouthwash. Comments on the preliminary opinion are due May 30, 2025. More information is available in our April 9, 2025, blog item.

EUON Publishes Nanopinion On Enhancing The Regulatory Application Of NAMs To Assess Nanomaterial Risks In The Food And Feed Sector: On April 8, 2025, the EU Observatory for Nanomaterials (EUON) published a Nanopinion entitled “A Qualification System to Accelerate Development and Regulatory Implementation of New Approach Methodologies (NAMs).” The authors explain how the NAMs4NANO project, funded by the European Food Safety Authority (EFSA), enhances the regulatory application of NAMs for assessing nanomaterial risks in the food and feed sector. The authors propose to establish three qualification programs, covering NAMs for nanomaterial physicochemical characterization, characterization of nanomaterials in relevant biological fluid and toxicity screening. More information is available in our April 15, 2025, blog item.

PUBLIC POLICY AND REGULATION

What It Means To Be “Essential” In The Federal Workforce: Current news on the government efficiency and reform front concerns the near-miss of a government shutdown (the budget would have lapsed at midnight on March 14, 2025). One reason some cited against allowing a shutdown to occur is how it might encourage or otherwise aid in attempts to eliminate positions if they were deemed “essential” or not. The recent potential shutdown was averted, but the essential/non-essential distinction will have little meaningful impact on workforce planning. Federal agencies have long had plans for a possible shutdown, especially in recent years, distinguishing who or what positions were needed if the budget was not authorized in time. These designations are already made, so if the categorization was useful as some kind of autonomous decision mechanism to make personnel decisions, shutdown or no shutdown would not make a difference. For more thoughts on this issue, please read our March 19, 2025, blog item.

Reorganize EPA? A Very Old Idea: Recent press reports tell of rumors of impactful (some fear catastrophic) budget cuts to EPA. The organization of EPA, and how that organization impacts its effectiveness, has been an issue since its founding. From its earliest days, there have been proposals for making EPA a cabinet-level Department. During the George H.W. Bush Administration, to knit together the programs and statutes more coherently, the EPA policy office developed a comprehensive draft of possible ways to reorganize the underlying environmental legislation and a parallel EPA structure. Most recently, and perhaps most important given the current Administration’s efforts at government reform, the subject of “reorganizing” EPA is included as a chapter in the Project 2025 report. That chapter has led to fears from many that budget and personnel cuts are part of a larger plan to upend the Agency. Recent press reports (like The Washington Post’s March 27, 2025, article, “Internal White House document details layoff plans across U.S. agencies”) indicate that the “plan” for EPA is to cut 10 percent of its workforce — which would seem less than some aggregated possibilities discussed in the Project 2025 chapter but could still include “firing up to 1,115 people” from the Office of Research and Development (ORD) alone. Project 2025 suggests large cuts to regional offices, the elimination of the Integrated Risk Information System (IRIS) program, a “reorganization” of the enforcement office and environmental justice programs, and other changes which would seem to add up to less than a 10 percent cut to the workforce. Attrition rates alone are estimated to be an average of 6 percent, and early retirements accelerated by, among other things, the fear of possible cuts, would likely add up to 10 percent or more.

But the goal of reforming, reorganizing, or reducing the workforce is neither a new idea nor one lacking merit. EPA’s organizational structure has been under review since its inception. In the present moment, however, the lack of a cohesive or consistent approach leaves significant questions not only about the underlying motivation but also about the final impact of the effort. “Less bureaucracy” does not necessarily equate to reduced numbers of staff. And as some government functions will now contend with seemingly disorganized staff reductions, public resentment about “the bureaucracy” may only increase. Something to think about as we wait (and hope) to get our social security check or passport — or pesticide registration — on time. More information is available in our April 2, 2025, blog.

The Clock Is Ticking For Republicans To Use The Congressional Review Act: Congress has approximately one month to use the Congressional Review Act (CRA) to undo qualifying Biden Administration-issued regulations. According to an updated analysis by Bloomberg Government, the estimated period to expedite repeal of Biden Administration rules ends May 8, 2025. This gives Congress approximately four weeks to act on the dozens of pending CRA bills. More information is available in our April 10, 2025, blog item.

LEGISLATIVE

House Bill Would Codify EPA’s Office Of Children’s Health Protection: On March 25, 2025, Representatives Jerrold Nadler (D-NY), John Garamendi (D-CA), and Kathy Castor (D-FL) reintroduced the Children’s Health Protection Act of 2025 (H.R. 2339) that would codify into law the only office within EPA dedicated to children’s health, the Office of Children’s Health Protection (OCHP). According to Nadler’s March 25, 2025, press release, this office would be responsible for rulemaking, policy, enforcement actions, research, and applications of science that focus on prenatal and childhood vulnerabilities, safe chemicals management, and coordination of community-based programs. The bill would make the EPA Children’s Health Protection Advisory Committee a permanent advisory committee. This advisory committee will advise the EPA Administrator in regard to the activities of the Office of Children’s Health Protection, all relevant information regarding regulations, research, and communications related to children’s health, and continue to serve the EPA in protecting children from environmental harm.

Nitrate And Arsenic In Drinking Water Act Reintroduced In The House: On Aril 3, 2025, Representatives David Valadao (R-CA) and Norma Torres (D-CA) reintroduced the Nitrate and Arsenic in Drinking Water Act (H.R. 2656). According to Valadao’s April 3, 2025, press release, the bipartisan bill would:

Amend the SDWA to provide grants for nitrate and arsenic reduction;

Authorize $15 million for fiscal year 2026 and every fiscal year after; and

Direct the EPA Administrator to conduct a review on programs under the SDWA, taking into consideration the diverse needs of underserved populations.

Bipartisan Bill Would Clean Up Marine Debris: On April 3, 2025, Representatives Suzanne Bonamici (D-OR), Amata Coleman Radewagen (R-American Samoa-At Large), and James Moylan (R-Guam-At Large) introduced the Save Our Seas (S.O.S.) 2.0 Amendments Act of 2025 (H.R. 2620) to strengthen efforts to combat marine debris and protect the ocean. According to Bonamici’s April 3, 2025, press release, the bipartisan bill builds upon the success of the Save Our Seas 2.0 Act and provides greater flexibility to the National Oceanic and Atmospheric Administration (NOAA) to work with other stakeholders in marine debris prevention and removal efforts.

MISCELLANEOUS

Canada Releases Final State Of PFAS Report And Proposed Risk Management Approach: On March 5, 2025, Environment and Climate Change Canada (ECCC) announced the availability of its final State of Per- and Polyfluoroalkyl Substances (PFAS) Report (State of PFAS Report) and proposed risk management approach for PFAS, excluding fluoropolymers. The State of PFAS Report concludes that the class of PFAS, excluding fluoropolymers, is harmful to human health and the environment. To address these risks, Canada proposed on March 8, 2025, to add the class of PFAS, excluding fluoropolymers, to Part 2 of Schedule 1 to the Canadian Environmental Protection Act, 1999 (CEPA). ECCC states that it will prioritize the protection of health and the environment while considering factors such as the availability of alternatives. Phase 1, starting in 2025, will address PFAS in firefighting foams to protect better firefighters and the environment. Phase 2 will focus on limiting exposure to PFAS in products that are not needed for the protection of human health, safety, or the environment. ECCC notes that this will include products like cosmetics, food packaging materials, and textiles. ECCC states that it will publish a final decision on the proposed addition of 131 individual PFAS to the National Pollutant Release Inventory (NPRI) with reporting to take place by June 2026 for PFAS releases that occurred during the 2025 calendar year. ECCC states that these data will improve its understanding of how PFAS are used in Canada, help it evaluate possible industrial PFAS contamination, and support efforts to reduce environmental and human exposure to harmful substances. Comments on the proposed risk management approach and the proposed order to add the class of PFAS, excluding fluoropolymers, to CEPA Schedule 1 Part 2 are due May 7, 2025. More information is available in our March 24, 2025, memorandum.

Amazon Files Suit Against CPSC, Challenging CPSC’s Determination That Amazon Is A Distributor: On March 14, 2025, Amazon filed suit against the Consumer Product Safety Commission (CPSC) in the U.S. District Court for the District of Maryland, challenging CPSC’s July 29, 2024, and January 16, 2025, orders determining that Amazon is “a ‘distributor’ of certain products that are defective or fail to meet federal consumer product safety standards, and therefore bears legal responsibility for their recall.” According to CPSC’s January 17, 2025, announcement, “[m]ore than 400,000 products are subject to this Order: specifically, faulty carbon monoxide (CO) detectors, hairdryers without electrocution protection, and children’s sleepwear that violated federal flammability standards.” CPSC determined that the products, listed on Amazon.com and sold by third-party sellers using the Fulfillment by Amazon (FBA) program, pose a “substantial product hazard” under the Consumer Product Safety Act (CPSA). In its complaint, Amazon argues that CPSC “overstepped” the statutory limits of the CPSA by ordering “a wide-ranging recall of products that were manufactured, owned, and sold by third parties,” not Amazon itself. Amazon states that CPSC’s recall order “relies on an unprecedented legal theory that stretches the [CPSA] beyond the breaking point and fails to discharge” CPSC’s obligations under the Administrative Procedure Act (APA). More information is available in our March 20, 2025, blog item.

NSF Announces PFAS-Free Certification For Nonfood Compounds And Food Equipment Materials: On March 24, 2025, NSF announced the release of NSF Certification Guideline 537: PFAS-Free Products for Nonfood Compounds and Food Equipment Materials (NSF 537). The press release states that to be certified, nonfood compound products “must first be registered under NSF’s Nonfood Compounds Guidelines or certified by NSF to ISO 21469, Safety of Machinery, Lubricants with Incidental Product Contact-Hygiene Requirements.” According to the press release, food equipment materials “must be certified to NSF/ANSI Standard 51: Food Equipment Materials to ensure that products meet minimum public health and sanitation requirements.” The press release notes that “PFAS-Free means that the product contains no intentionally added PFAS, no post-consumer recycled material, no intentionally used PFAS additives (PPA, etc.) and the Total Organic Fluorine [(TOF)] is less than 50 ppm.” Certification will require that TOF levels be retested annually. NSF will add certified nonfood compounds to the NSF White Book™ and add certified food equipment materials to the NSF Certified Food Equipment listing.

Petitions Filed To Add Chemicals To List Of Chemical Substances Subject To Superfund Excise Tax: On April 2 and April 3, 2025, the Internal Revenue Service (IRS) announced that petitions have been filed to add the following chemicals to the list of taxable substances:

Polyisobutylene (90 Fed. Reg. 14521): Petition filed by TPC Group, Inc., an exporter of polyisobutylene;

Acrylonitrile butadiene styrene (90 Fed. Reg. 14687): Petition filed by Trinseo LLC, an importer and exporter of acrylonitrile butadiene styrene;

Acrylonitrile-butadiene rubber (90 Fed. Reg. 14684): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of acrylonitrile-butadiene rubber;

Chloroprene rubber (90 Fed. Reg. 14691): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of chloroprene rubber;

Emulsion styrene butadiene rubber (90 Fed. Reg. 14692): Petition filed by Michelin North America, Inc., an importer of emulsion styrene butadiene rubber;

Emulsion styrene-butadiene rubber (90 Fed. Reg. 14686): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of emulsion styrene-butadiene rubber;

Ethylene vinyl acetate (VA < 50 percent) (90 Fed. Reg. 14688): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of ethylene vinyl acetate (VA < 50 percent);

Ethylene vinyl acetate (VA ≥ 50%) (90 Fed. Reg. 14683): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of ethylene vinyl acetate (VA ≥ 50 percent);

Ethylene-propylene-ethylidene norbornene rubber (90 Fed. Reg. 14695): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of ethylene-propylene-ethylidene norbornene rubber;

Hydrogenated acrylonitrile-butadiene rubber (90 Fed. Reg. 14686): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of hydrogenated acrylonitrile-butadiene rubber;

Hydrogenated acrylonitrile-butadiene rubber (90 Fed. Reg. 14685): Petition filed by Zeon Chemicals L.P., an importer and exporter of hydrogenated acrylonitrile-butadiene rubber;

Isobutene-isoprene rubber (90 Fed. Reg. 14689): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of isobutene-isoprene rubber;

Solution styrene-butadiene rubber (90 Fed. Reg. 14690): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of solution styrene-butadiene rubber;

Bromo-isobutene-isoprene rubber (90 Fed. Reg. 14694): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of bromo-isobutene-isoprene rubber;

Poly(ethylene-propylene) rubber (90 Fed. Reg. 14690): Petition filed by Arlanxeo USA LLC and Arlanxeo Canada Inc., importers and exporters of poly(ethylene-propylene) rubber;

Solution styrene-butadiene rubber (90 Fed. Reg. 14693): Petition filed by Michelin North America, Inc., an importer of solution styrene-butadiene rubber; and

Styrene-acrylonitrile (90 Fed. Reg. 14693): Petition filed by Trinseo LLC, an importer and exporter of styrene-acrylonitrile.

Comments on the petitions are due June 2, 2025.

Maine Board Approves Motion To Adopt Rule On PFAS In Products; CUU Proposals For Products Prohibited As Of January 1, 2026, Are Due June 1, 2025: As reported in our April 1, 2025, blog item, the Maine Board of Environmental Protection (MBEP) was scheduled to consider the Maine Department of Environmental Protection’s (MDEP) December 2024 proposed rule regarding products containing PFAS during its April 7, 2025, meeting. As reported in our December 31, 2024, memorandum, on December 20, 2024, MDEP published a proposed rule that would establish criteria for currently unavoidable uses (CUU) of intentionally added PFAS in products and implement sales prohibitions and notification requirements for products containing intentionally added PFAS but determined to be a CUU. During the April 7, 2025, meeting, MBEP unanimously approved a motion to adopt the Chapter 90 rule, the Chapter 90 basis statement, and MDEP’s response to comments “as presented and with correction of minor typographical errors, and the addition of ‘Maine Department of Transportation’ at section 4(A)(8),” according to MBEP’s draft meeting minutes. Under the approved rule, CUU requests for products scheduled to be prohibited January 1, 2026, are due June 1, 2025. The products containing intentionally added PFAS that are scheduled to be prohibited include:

Cleaning products;

Cookware;

Cosmetics;

Dental floss;

Juvenile products;

Menstruation products;

Textile articles. The prohibition does not include:

Outdoor apparel for severe wet conditions; or

A textile article that is included in or a component part of a watercraft, aircraft or motor vehicle, including an off-highway vehicle;

Ski wax; or

Upholstered furniture.

The January 1, 2026, prohibition applies to any of the products listed that do not contain intentionally added PFAS but that are sold, offered for sale, or distributed for sale in a fluorinated container or container that otherwise contains intentionally added PFAS. More information is available in our April 11, 2025, blog item.

OMB RFI Seeks Proposals To Rescind Or Replace Regulations: On April 11, 2025, OMB published a request for information (RFI) to solicit ideas for deregulation. 90 Fed. Reg. 15481. OMB seeks proposals to rescind or replace regulations “that stifle American businesses and American ingenuity,” including regulations “that are unnecessary, unlawful, unduly burdensome, or unsound.” According to the notice, “comments should address the background of the rule and the reasons for the proposed rescission, with particular attention to regulations that are inconsistent with statutory text or the Constitution, where costs exceed benefits, where the regulation is outdated or unnecessary, or where regulation is burdening American businesses in unforeseen ways.” Comments are due May 12, 2025. Earlier in the week, on April 9, 2025, President Trump issued the following memorandum and EOs regarding federal regulations:

Presidential Memorandum Regarding Directing the Repeal of Unlawful Regulations;

EO on Reducing Anti-Competitive Regulatory Barriers; and

EO on Zero-Based Regulatory Budgeting to Unleash American Energy.

More information is available in our April 14, 2025, blog item.

Former DOL Officials Urge Federal Contractors to Continue Lawful Diversity Practices Despite Trump Administration’s Efforts to End DEI

On April 15, 2025, a group of former U.S. Department of Labor officials issued an “open letter” urging federal contractors to continue voluntary diversity practices, including conducting self-assessments, despite the Trump administration’s attacks on diversity, equity, and inclusion (DEI) programs and the revocation of Executive Order (EO)11246, which mandated federal contractors’ affirmative action and non-discrimination obligations.

Quick Hits

A group of former U.S. Department of Labor officials is urging federal contractors to maintain their voluntary diversity practices despite the Trump administration’s revocation of Executive Orders supporting affirmative action and anti-discrimination efforts.

The officials assert that continued diversity, equity, and inclusion programs are essential for compliance with federal laws and for promoting equal opportunity in the workplace.

The open letter, signed by ten former DOL officials, argued President Donald Trump lacks authority to end voluntary programs designed to promote compliance with federal, state, and local antidiscrimination laws, including Title VII of the Civil Rights Act of 1964. The letter said federal contractors “should carefully weigh the risks of backing away from employment practices to promote equal opportunity for all. “

The open letter addressed President Trump’s January 21, 2025, EO 14173, which seeks to end “illegal” DEI and diversity, equity, inclusion, and accessibility (DEIA) programs. The order revoked the former EO 11246, which had prohibited discrimination by federal contractors based on race, color, religion, and national origin, and it stripped the Office of Federal Contract Compliance Programs (OFCCP) of much of its authority to enforce federal contractors’ compliance with federal laws and regulations requiring nondiscrimination.

Additionally, the letter comes after statements from newly appointed OFCCP Director Catherine Eschbach, calling OFCCP’s prior activities “contradictory to the nation’s laws” and indicating the agency is considering enforcement actions to “deter DEI programs and principles.”

However, the former DOL officials argued that President Trump’s actions contradict well-established laws, override congressional mandates to prevent discrimination, violate due process and free speech, and seek to retroactively impose liability on contractors for their good faith efforts to comply with EO 11246 prior to its revocation. They further questioned whether OFCCP even has the authority to investigate and take enforcement actions against contractors for their DEIA programs, particularly following the revocation of EO 11246.

“Because the Administration’s actions are legally unsound and harmful to workers, employers, and America’s economy, we urge federal contractors to carefully evaluate how they can best achieve the equal opportunity commitments they have made through their diversity, equity, inclusion, and accessibility programs,” the letter said. “These programs not only serve important business and risk management objectives, but also uphold fundamental civil rights protections and promote fair treatment and opportunity for all workers.”

The open letter was signed by former OFCCP Directors Jenny R. Yang, 2021-2023, and Patricia A. Shiu, 2009–2016, and joined by Pamela Coukos, OFCCP senior advisor 2011–2016; Donna Lenhoff, OFCCP senior civil rights advisor from 2011–2017; Seema Nanda, solicitor of labor 2021–2025; Patrick O. Patterson, OFCCP deputy director 2014–2017; Maya Raghu, OFCCP deputy director, policy 2021–2023; Dariely Rodriguez, OFCCP chief of staff 2021–2022; M. Patricia Smith, solicitor of labor 2010–2017; and Shirley J. Wilcher, deputy assistant secretary for OFCCP 1994–2001.

‘Lawful and Effective Tools to Ensure Equal Opportunity’

The former DOL officials argued in the letter that federal contracts may and should continue voluntary diversity efforts. The letter pointed to specific practices followed by some leading employers to prevent discrimination:

Proactive Barrier Analyses—The open letter urged contractors to continue self-assessments that identify and remove obstacles to equal employment opportunity, such as examining hiring processes to understand why qualified candidates with certain backgrounds are rejected. The letter argues that such analyses remain fully lawful and prevent discrimination.

Collecting and Analyzing Workforce Data—The letter said that despite the revocation of EO 11246, federal contractors “should continue to collect and analyze applicant flow and workforce data.” Such collection is “a critical part of determining whether an employer has unlawful employment practices,” the letter said.

Well-Crafted Benchmarks—The letter further argued that “well-crafted benchmarks or aspirational goals” remain a “useful tool for employers.” Such appropriate benchmarks should be based on an analysis of the labor pool, “realistic goals” based on the necessary qualifications and location, and safeguards to ensure that job decisions are still focused on qualifications, skills, and merit without excluding qualified candidates based on protected characteristics. The letter said such benchmarks “are not quotas and are not discriminatory.

Next Steps

The Trump administration’s scrutiny of DEI and DEIA programs has caused some uncertainty for federal contractors and other private employers. The former DOL officials’ letter argues that certain programs may be lawful and further antidiscrimination compliance.

The letter comes after a similar letter by a group of former U.S. Equal Employment Opportunity Commission (EEOC) officials, which also included former OFCCCP Director Yang. That letter argued that many employer diversity programs could remain lawful, including antidiscrimination and harassment training, employee resource groups/affinity groups, broad-based recruitment efforts, and data collection, if certain safeguards are followed,

Still, given the uncertainty, employers may want to review or audit all their existing DEI or DEIA programs or initiatives to determine if they align with lawful practices under applicable federal antidiscrimination laws. As part of such reviews, employers may consider internal analysis or assessments conducted under the protection of attorney-client privilege. Additionally, employers may want to consider conducting proactive, privileged, and voluntary analyses of certain workforce analytics to ensure compliance with federal, state, and local laws.

Texas Attorney General Investigating “Healthy” Claims in Cereal

On April 5, 2025, Texas Attorney General Ken Paxton announced an investigation of W.K. Kellogg Co. (Kellogg) for potential violation of Texas consumer protection laws, alleging that Kellogg’s marketing of its cereals as “healthy” is deceptive marketing because they include artificial food dyes and butylated hydroxytoluene (BHT).

As we previously reported, the Texas Senate recently passed SB 25, which if passed into law would require food labels to warn Texas consumers if a food product contains ingredients banned in other countries. The bill is now under review with the Texas House Committee on Public Health. AG Paxton’s announcement signals the Texas government’s continued focus on food additives and “healthy” claims by food manufacturers.

AG Paxton alleges that Kellogg’s “healthy” claim is deceptive because the artificial dyes “have been linked to hyperactivity, obesity, autoimmune disease, endocrine-related health problems, and cancer in those who consume them.” However, not all food scientists agree with this link to health issues, and many of the food dyes and additives are currently approved for use by the U.S. Food and Drug Administration (FDA).

Keller and Heckman will continue to monitor this investigation and relay any developments.

Supreme Court Decisions Cited for Regulatory Repeal Effort in Latest White House Memo

On April 9, 2025, the White House issued a memorandum titled “Directing the Repeal of Unlawful Regulations,” directing agency heads to repeal rules without notice and comment, where doing so is consistent with the “good cause” exception in the Administrative Procedure Act (5 U.S.C. 553(b)(3)(B)). The “good cause” exception allows agencies to dispense with notice-and-comment rulemaking when that process would be “impracticable, unnecessary, or contrary to the public interest.”

This review-and-repeal effort directs agencies to evaluate existing regulation’s lawfulness under several recent Supreme Court decisions such as Loper Bright Enterprises v. Raimondo and West Virginia v. EPA—decisions which limited the power of federal agencies to promulgate regulations absent explicit congressional authorization.

The memorandum is intended to reinforce Executive Order 14219, published on February 19, 2025, which directed the heads of all executive departments and agencies to identify categories of unlawful and potentially unlawful regulations within 60 days and begin plans to repeal them.

Following the 60-day review period ordered in Executive Order 14219, agencies are instructed to immediately take steps to effectuate the repeal of any regulation, or the portion of any regulation, that “clearly exceeds the agency’s statutory authority or is otherwise unlawful.”

Keller and Heckman will continue to monitor developments related to the repeal of regulations and provide updates on how these changes impact regulated industries.

Termination of Humanitarian Parole for Citizens of Cuba, Haiti, Nicaragua, Venezuela Blocked by Federal Court

U.S. District Court Judge Indira Talwani issued an order on April 14, 2025, blocking DHS’s March 25, 2025, decision to terminate Humanitarian Parole for individuals from Cuba, Haiti, Nicaragua, and Venezuela paroled into the United States under the CHNV program. The judge also certified the case as a class action.

The CHNV program allows approximately 450,000 people to live and work legally in the United States. On March 25, 2025, DHS announced that it was terminating, as of April 24, 2025, the CHNV program and revoking work authorization issued under the program.

Pursuant to Judge Talwani’s order, DHS has been enjoined from terminating CHNV parole for all beneficiaries by Federal Register notice. While the order is in effect, CHNV beneficiaries’ humanitarian parole and related work authorization document will expire on the date listed on the humanitarian parole approval notice/I-94 and related work authorization document. Judge Talwani’s ruling specifies that DHS cannot revoke a CHNV beneficiary’s humanitarian parole and related work authorization prior to the stated expiration date without a review of the beneficiary’s individual case.

Finally, the ruling states that all CHNV revocation notices sent to CHNV beneficiaries are stayed pending further court order.

Judge Talwani stated that DHS’s decision to terminate the CHNV program will force CHNV beneficiaries to “choose between two injurious options: continue following the law and leave the country on their own, or await removal proceedings.… The first option will expose Plaintiffs to dangers in their native countries.… The second option will put Plaintiffs at risk of arrest and detention and, because Plaintiffs will be in the United States without legal status, undermine Plaintiff’s chances of receiving other forms of immigration relief in the future – potentially permanently.”

Australia and New Zealand Approve First Cell-Cultured Food Product

Food Standards Australia and New Zealand (FSANZ) have approved a cell-cultured quail product produced by Vow, an Australian company. Under the approval, either the term “cell-cultured” or “cell-cultivated” must be displayed on the labeling.

The approval follows two rounds of public consultations on Vow’s novel food application, originally submitted in January 2023.

The approval will now be sent to the Food Ministers (of the Commonwealth, States and Territories, and New Zealand) who have 60 days to accept, amend, or seek a review of the proposed change to the Food Standards Code. If accepted, the product can then be commercialized.

Caffeine Warning Bill Introduced in House of Representatives

A new bill introduced in the U.S. House of Representatives would require a “high caffeine” warning on beverages that contain more than 150 milligrams of caffeine, as well as require manufacturers to declare the amount of caffeine in their products.

Representative Robert Menendez introduced H.R.2511, the Sarah Katz Caffeine Safety Act, stating that “the bill is about transparency and safety,” aimed at preventing tragedies such as the death of Sarah Katz, a college student who died after drinking a highly caffeinated beverage. As we previously blogged, Katz’s parents filed a lawsuit alleging that Panera Bread Company’s “Charged Lemonade” caused their daughter’s death and that the beverage contained anywhere from 260-390 mg of caffeine, depending on the size of the beverage.

The bill would require menu items in chain restaurants containing at least 150 mg of caffeine to bear a statement such as “high caffeine” on the menu. In addition, the bill amends Section 403 of the Food, Drug, and Cosmetic Act to consider foods and dietary supplements containing more than 10 mg of caffeine as misbranded unless the label includes the amount of caffeine in the product, a statement of whether the caffeine is naturally occurring or an additive, and an advisory statement regarding FDA’s daily recommended limit of caffeine for healthy adults.

The bill also directs FDA to define “added caffeine” and review the status of caffeine and other stimulants as generally recognized as safe (GRAS). Specifically, FDA would be directed to consider:

Whether caffeine should be considered GRAS;

The safety of caffeine or other stimulants, either alone or in a blend;

The safety of guarana, taurine, and similar substances in food and dietary supplements with added caffeine;

Thresholds for the amount of caffeine or blends of caffeine and other stimulants; and

Whether any regulations relating to caffeine in food and dietary supplements should be issued or updated.

Finally, the National Institutes of Health would be required to conduct or support a review of the effect of caffeine consumption in vulnerable populations, and FDA and CDC would be required to conduct a public education campaign on caffeine safety.

FDA’s webpage on caffeine indicates that 400 mg a day is “not generally associated with dangerous, negative effects,” but that the level of sensitivity can vary widely.

Keller and Heckman will continue to monitor this bill and other developments regarding caffeinated beverages.

Trump Issues Executive Order on “Restoring America’s Maritime Dominance”

Last week, President Trump issued an Executive Order (EO) titled “Restoring America’s Maritime Dominance,” emphasizing the urgent need to revitalize the domestic maritime industry.

The EO acknowledges the decline of the U.S. flag fleet and the nation’s minimal role in global commercial shipbuilding.To address this, the EO calls for a Maritime Action Plan (MAP), developed by the National Security Advisor and other officials, with the goal of strengthening shipbuilding capabilities, enhancing maritime workforce training, and ensuring adequate commercial vessel capacity for national security. The EO also outlines legislative proposals, funding mechanisms, and deregulatory initiatives to support these objectives, including tariffs on Chinese-built ships, financial incentives for U.S. shipyards, and expanded mariner training programs.

My colleagues recently co-authored an article outlining the more than 20 actions included in the EO. Please refer to the article (or reach out directly) for more detailed guidance on implementation and compliance requirements.

It is the policy of the United States to revitalize and rebuild domestic maritime industries and workforce to promote national security and economic prosperity.

www.supplychaindive.com/…

Chinese Invention Patent Grants Down Almost 21% in First Quarter of 2025; Trademark Registrations Down Almost 15%

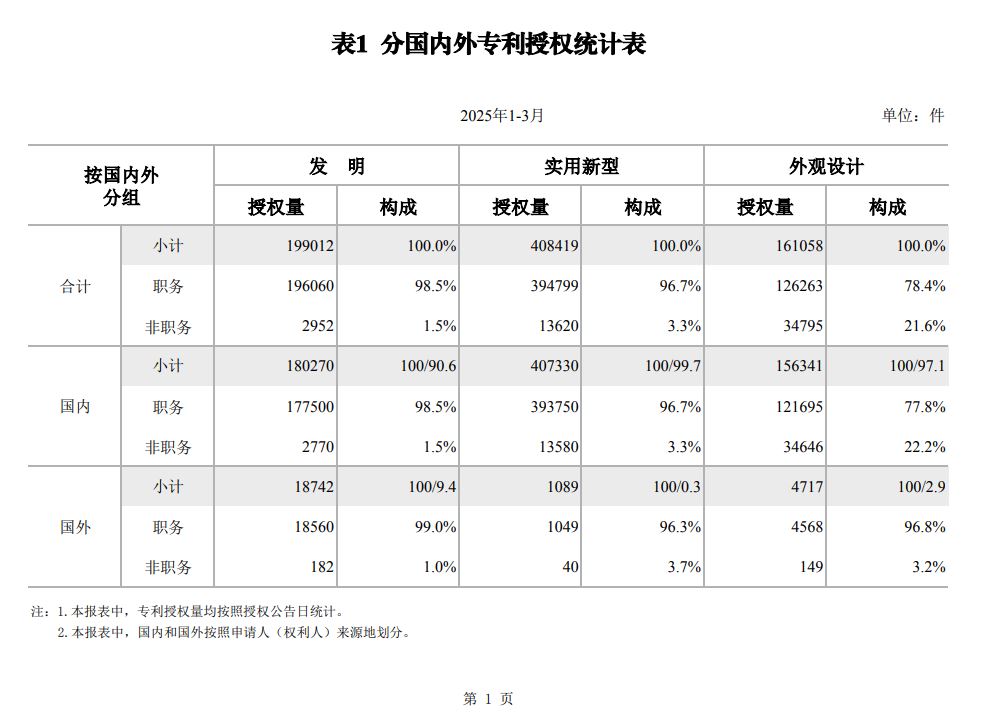

Per statistics released by China’s Intellectual Property Administration (CNIPA) on April 15, 2025, Chinese invention patent grants are down 20.99% in the first quarter of 2025 versus the first quarter of 2024. Specifically, the number of invention patents granted decreased by 52,870 to 199,012 grants. Utility model grants also dropped slightly by 2.33% year-on-year to 408,419 grants (down by 11,032 grants). However, design patent grants increased by 10.11% year-on-year to 161,058 grants (up by 14,788 grants). The number of trademark registrations from January to March 2025 decreased by 193,996 compared with that in 2024 (a year-on-year decrease of 14.97%).

CNIPA did not provide any reasons for the drop in invention patent grants but may be related to China’s push for quality over quantity including:

the end of subsidies for patent grants in 2025;

the continuing crackdown on “abnormal” patent applications; and

setting a goal for high-value patents per 10,000 people (instead of only patents per 10,000 people).

In addition, the decrease in trademark and patent grants may be related to a slowdown in China’s economy although grants would be a lagging indicator.

Note though per CNIPA’s 2025 budget, CNIPA is expect over 5 million patent application filings this year and to examine over 2 million invention patent applications.

The full data set is available here: 2025年3月国家知识产权局审查注册登记统计月报(外部版) (Chinese only).

Pricing Under Pressure: Prepare for Enhanced Antitrust Scrutiny amid Tariff Uncertainty

In an era of increased tariff pressures, U.S. antitrust enforcers have signaled that they remain vigilant for attempts by businesses to exploit the situation through anticompetitive conduct, especially in sectors already strained by supply shocks, volatile input costs, and shifting demand patterns.

Roger Alford, Principal Deputy Assistant Attorney General at the U.S. Department of Justice’s Antitrust Division, recently emphasized the need for competition authorities to remain vigilant for signs of collusion and manipulation of dynamic pricing models, particularly as companies adjust to heightened tariff levels. He warned that the imposition of trade barriers — such as tariffs that could reduce competition from abroad — can lead to market concentration, reducing the number of active suppliers and thereby increasing the risk of coordinated pricing or supply restrictions.

A case in point is the DOJ’s recent investigation into record-high egg prices. Despite arguments that egg price inflation resulted from market factors such as avian influenza outbreaks disrupting supply, after the DOJ issued subpoenas to major egg producers to examine potential collusion, prices dropped sharply from $8 to $3 per dozen. Alford cited this as an example of the heightened risk that companies may engage in anticompetitive conduct under the cover of external pressures.

Companies in the consumer packaged goods (CPG) sector are particularly exposed to these risks. Especially when facing thin margins, concentrated supply chains, commoditized inputs, or inelastic demand, CPG firms may be perceived as being especially vulnerable to engaging in coordinated responses to external shocks, whether explicit or tacit. Jurisdictions outside the U.S. have already begun scrutinizing the pricing behavior of such companies for potentially using inflation as a pretext to coordinate higher prices. For example, the Korea Fair Trade Commission recently launched investigations into several CPG companies following claims of collusion to raise prices on confectionaries and beverages. In Australia, Prime Minister Anthony Albanese recently pledged to crack down on alleged price gouging by the country’s supermarkets if reelected.

This is not the first time regulators have taken a more aggressive posture during periods of systemic disruption. For example, during the COVID-19 pandemic, the DOJ and FTC issued joint guidance stating they were on the alert for individuals and businesses using the pandemic as “an opportunity to subvert competition or prey on vulnerable Americans.” The guidance further stated that the agencies would stand ready to “pursue civil violations of the antitrust laws, which include agreements between individuals and business to restrain competition through increased prices, lower wages, decreased output, or reduced quality as well as efforts by monopolists to use their market power to engage in exclusionary conduct,” as well as “prosecute any criminal violations of the antitrust laws, which typically involve agreements or conspiracies between individuals or businesses to fix prices or wages, rig bids, or allocate markets.”

In light of this renewed scrutiny, companies in the CPG and similar sectors should consider the following measures:

Document the bases for pricing decisions, to create a record reflecting the independence of and any business justifications for those decisions.

Train commercial teams to recognize and steer clear of “soft signals” of collusion, particularly in discussions of tariffs or supply shortages.

Review pricing and supply chain practices for potential antitrust sensitivities, and engage antitrust counsel when appropriate.

SPECIFIC VS. GENERAL PERMISSION: Jibreal Hindi Defens His Onslaught of TCPA Class Actions Before the FCC and His Argument is Kind of Interesting

So REACH recently submitted a hard-hitting comment in support of an effort to shut down frivolous lawsuits arising from out-of-time-limitation SMS messages.

These messages generally arise when a consumer travels from one location to another and the caller is not aware of the changed location and sends messages based upon area codes that end up being inaccurate because, you know, people move around.

R.E.A.C.H.’s comment is laser focused on the language of the CFR that limits claims related to out-of-time messages to “solicitations” and the definition of “solicitations” looks only at messages sent without prior express invitation or permission. It follows that a message sent with invitation or permission may be sent outside of the TCPA’s timing limitations.

Simple.

But not so fast.

Hindi– the guy behind hundreds of recent TCPA class actions against small businesses and who also just bragged about buying a 15 seat private jet on social media–counters that the CFR is unclear whether the permission was to be general or specific in nature.

In his view of the world a consumer that gives permission to receive text messages from a business impliedly gives only limited consent; i.e. consent to receive texts WITHIN the timing limitations of the TCPA. While a consumer may ask for texts outside of the timing window such consent must be SPECIFIC as to the timing component.

Here is how he frames the issue:

The undersigned does recognize that text messages sent with “prior express invitation or permission” are not “telephone solicitations” under the TCPA and, thus, do not fall within the ambit of the Quiet Hours Provision. See 47 U.S.C. § 227(a)(4); see also 47 C.F.R. § 64.1200(c)(1). However, it bears repeating that the instant issue lies not in general invitation or permission, but rather the scope of such invitation or permission. Senders of text messages—who are in the best position to clarify the scope of invitation or permission—often leave the detail of message timing unaddressed and ambiguous by and through their own opt-in language. Indeed, in the undersigned’s experience, almost no sender of text message solicitations cares to obtain a consumer’s prior express invitation or permission to send texts “before 8 a.m. or after 9 p.m.” or at “any time.” This is a major issue for consumers, who reasonably believe they consent to messages at objectively normal hours but are instead bombarded with texts during objectively invasive hours.

Almost all consumers complain about quiet hours messages, even when they have given general express invitation or permission to receive texts. These consumers, including those without a legal background of any kind, often point out that they did not specifically consent to receiving messages “before 8 a.m. or after 9 p.m.” or “any time.” The average person is confused or, in some cases, outright enraged when they merely provide a company with their residential phone number and start receiving text messages in the middle of the night. Even Petitioners acknowledge this reality, noting that after-hours text messages can cause nuisance or annoyance for consumers.

Interesting, no?

Importantly general vs. specific consent may have BIG consequences in other TCPA arenas as well. For instance if the courts or “delete delete delete” proceedings dismantle express consent rules in the CFR we will be back to determining what “clearly and unmistakably stated” consent means for all purposes– and that might mean consumers must specifically request to hear from a caller “using an autodialer” or “using prerecorded calls” or “using AI.”

While that is not much of a shift from today’s practice for telemarketers it is a MASSIVE shift for informational calling where such specific consent is not required. So there may be bigger issues afoot here.

Regardless I thought the response here was interesting enough to merit a quick blog.

Full response here: Jibrael Hindi