How to Report Cyber, AI, and Emerging Technologies Fraud and Qualify for an SEC Whistleblower Award

SEC Forms Cyber and Emerging Technologies Unit

On February 20, 2025, the SEC announced the creation of the Cyber and Emerging Technologies Unit (CETU) to focus on combatting cyber-related misconduct and to protect retail investors from bad actors in the emerging technologies space. In announcing the formation of the CETU, Acting Chairman Mark T. Uyeda said:

The unit will not only protect investors but will also facilitate capital formation and market efficiency by clearing the way for innovation to grow. It will root out those seeking to misuse innovation to harm investors and diminish confidence in new technologies.

As detailed below, the SEC’s press release identifies CETU’s seven priority areas to combat fraud and misconduct. Whistleblowers can provide original information to the SEC about these types of violations and qualify for an award if their tip leads to a successful SEC enforcement action. The largest SEC whistleblower awards to date are:

$279 million SEC whistleblower award (May 5, 2023)

$114 million SEC whistleblower award (October 22, 2020)

$110 million SEC whistleblower award (September 15, 2021)

$104 million SEC whistleblower award (August 4, 2023)

$98 million SEC whistleblower award (August 23, 2024)

SEC Whistleblower Program

Under the SEC Whistleblower Program, the SEC will issue awards to whistleblowers who provide original information that leads to successful enforcement actions with total monetary sanctions in excess of $1 million. A whistleblower may receive an award of between 10% and 30% of the total monetary sanctions collected. The SEC Whistleblower Program allows whistleblowers to submit tips anonymously if represented by an attorney in connection with their disclosure.

In its short history, the SEC Whistleblower Program has had a tremendous impact on securities enforcement and has been replicated by other domestic and foreign regulators. Since 2011, the SEC has received an increasing number of whistleblower tips in nearly every fiscal year. In fiscal year 2024, the SEC received nearly 25,000 whistleblower tips and awarded over $225 million to whistleblowers.

The uptick in received tips, paired with the sizable awards given to whistleblowers, reflects the growth and continued success of the whistleblower program. See some of the SEC whistleblower cases that have resulted in large awards.

CETU Priority Areas for SEC Enforcement

The CETU will target seven areas of fraud and misconduct for SEC enforcement:

Fraud committed using emerging technologies, such as artificial intelligence (AI) and machine learning. For example, the SEC charged QZ Asset Management for allegedly falsely claiming that it would use its proprietary AI-based technology to help generate extraordinary weekly returns while promising “100%” protection for client funds. In a separate action, the SEC settled charges against investment advisers Delphia and Global Predictions for making false and misleading statements about their purported use of AI in their investment process.

Use of social media, the dark web, or false websites to perpetrate fraud. For example, the SEC charged Abraham Shafi, the founder and former CEO of Get Together Inc., a privately held social media startup known as “IRL,” for raising approximately $170 million from investors by allegedly fraudulently portraying IRL as a viral social media platform that organically attracted the vast majority of its purported 12 million users. In reality, IRL spent millions of dollars on advertisements that offered incentives to download the IRL app. Shafi hid those expenditures with offering documents that significantly understated the company’s marketing expenses and by routing advertising platform payments through third parties.

Hacking to obtain material nonpublic information. For example, the SEC brought charges against a U.K. citizen for allegedly hacking into the computer systems of public companies to obtain material nonpublic information and using that information to make millions of dollars in illicit profits by trading in advance of the companies’ public earnings announcements.

Takeovers of retail brokerage accounts. For example, the SEC charged two affiliates of JPMorgan Chase & Co. for failures including misleading disclosures to investors, breach of fiduciary duty, prohibited joint transactions and principal trades, and failures to make recommendations in the best interest of customers. According to the SEC’s order, a JP Morgan affiliate made misleading disclosures to brokerage customers who invested in its “Conduit” private funds products, which pooled customer money and invested it in private equity or hedge funds that would later distribute to the Conduit private funds shares of companies that went public. The order finds that, contrary to the disclosures, a JP Morgan affiliate exercised complete discretion over when to sell and the number of shares to be sold. As a result, investors were subject to market risk, and the value of certain shares declined significantly as JP Morgan took months to sell the shares.

Fraud involving blockchain technology and crypto assets. For example, the SEC brought charges against Terraform Labs and its founder Do Kwon for orchestrating a multi-billion dollar crypto asset securities fraud involving an algorithmic stablecoin and other crypto asset securities. In a separate action, the SEC brought charges against FTX CEO Samuel Bankman-Fried for a years-long fraud to conceal from FTX’s investors (1) the undisclosed diversion of FTX customers’ funds to Alameda Research LLC, his privately-held crypto hedge fund; (2) the undisclosed special treatment afforded to Alameda on the FTX platform, including providing Alameda with a virtually unlimited “line of credit” funded by the platform’s customers and exempting Alameda from certain key FTX risk mitigation measures; and (3) undisclosed risk stemming from FTX’s exposure to Alameda’s significant holdings of overvalued, illiquid assets such as FTX-affiliated tokens.

Regulated entities’ compliance with cybersecurity rules and regulations. For example, the SEC settled charges against transfer agent Equiniti Trust Company LLC, formerly known as American Stock Transfer & Trust Company LLC, for failures to ensure that client securities and funds were protected against theft or misuse, which led to losses of millions of dollars in client funds.

Public issuer fraudulent disclosure relating to cybersecurity. For example, the SEC settled charges against software company Blackbaud Inc. for making misleading disclosures about a 2020 ransomware attack that impacted more than 13,000 customers. Blackbaud agreed to pay a $3 million civil penalty to settle the charges. In a separate action, the SEC settled charges against The Intercontinental Exchange, Inc. and nine wholly owned subsidiaries, including the New York Stock Exchange, for failing to timely inform the SEC of a cyber intrusion as required by Regulation Systems Compliance and Integrity.

How to Report Fraud to the SEC and Qualify for an SEC Whistleblower Award

To report a fraud (or any other violations of the federal securities laws) and qualify for an award under the SEC Whistleblower Program, the SEC requires that whistleblowers or their attorneys report the tip online through the SEC’s Tip, Complaint or Referral Portal or mail/fax a Form TCR to the SEC Office of the Whistleblower. Prior to submitting a tip, whistleblowers should consult with an experienced whistleblower attorney and review the SEC whistleblower rules to, among other things, understand eligibility rules and consider the factors that can significantly increase or decrease the size of a future whistleblower award.

Recent Executive Orders: What Employers Need to Know to Assess the Shifting Sands

In January 2025, President Trump issued a flurry of executive orders. Several may significantly impact employers; the key aspects of these orders are described below, although this is not an exhaustive summary of every provision.

1. Diversity, Equity, and Inclusion (DEI) Programs and Affirmative Action Compliance Obligations

The “Ending Illegal Discrimination and Restoring Merit-Based Opportunity” Executive Order contains many provisions that may significantly impact federal contractors and private employers. First, this order revoked Executive Order 11246 (E.O. 11246), which, among other things, required federal contractors to engage in affirmative action efforts, including developing affirmative action plans concerning women and minorities. In addition to revoking E.O. 11246, President Trump’s order requires that the Office of Federal Contract Compliance (OFCCP) immediately cease promoting diversity, investigating federal contractors for compliance with their affirmative action efforts, and allowing or encouraging federal contractors to engage in workforce balancing based on race, color, sex, sexual preference, religion, or national origin. Further, the order states that federal contract recipients will be required to certify that they do not “operate any programs promoting diversity, equity, and inclusion (DEI) that violate any applicable Federal anti-discrimination laws.” This order does not impact affirmative action obligations concerning individuals with disabilities and protected veterans.

Second, private sector DEI efforts are also addressed in the order, which effectively states that the President believes such practices are illegal and violate civil rights and anti-discrimination laws. This order further provides that the Attorney General, in coordination with relevant agencies, must submit a report that identifies the most “egregious and discriminatory” DEI practices within the agency’s jurisdiction, including a plan to deter DEI programs or principles (whether the programs are denominated as DEI or not); identify up to nine potential civil compliance investigations of publicly traded corporations, large non-profits, large foundations, select associations and/or education institutions with endowments over one billion dollars; identify “other strategies to encourage the private sector to end illegal DEI discrimination;” and identify potential litigation and regulatory action or sub-regulatory guidance that would be appropriate.

In recent weeks, several corporations have rolled back or limited their DEI programs, presumably in anticipation of, or in reaction to, this order. Notably, the order does not prohibit all DEI policies and initiatives; rather, it impacts only those determined to be discriminatory and illegal, e.g., quotas or explicit preferences for women and/or minorities. Policies focusing on workplace inclusion, broadly defining diversity, and adhering to merit-based hiring may reduce the risk of violating this order.

2. Sex and Gender as Protected Characteristics

The “Defending Women From Gender Ideology Extremism and Restoring Biological Truth to the Federal Government” Executive Order redefines federal policy about sex and gender, stating that the federal government will only recognize sex (meaning biological sex – male or female) and not gender. This order directs federal agencies to end initiatives that support “gender ideology”; use the term “sex” not “gender” in federal policies and documents; enforce sex-based rights and protections using the order’s definition of “sex”; and rescind all agency guidance that is inconsistent with the order, including the Equal Employment Opportunity Commission’s “Enforcement Guidance on Harassment in the Workplace” (April 29, 2024), among others. This order also mandates that all government-issued identification documents, including visas, reflect the biological sex assigned at birth and seeks to limit the scope of the U.S. Supreme Court decision in 2020 that held that “sex discrimination” includes gender identity and sexual orientation. This order also directs the EEOC and U.S. Department of Labor (DOL) to prioritize enforcement of rights as defined by the order.

3. Artificial Intelligence

In 2023, former President Biden issued an executive order regarding the potential risks associated with artificial intelligence (AI), which resulted in the DOL releasing guidance on May 16, 2024, entitled “Department of Labor’s Artificial Intelligence and Worker Well-being: Principles for Developers and Employers.” On January 23, 2025, President Trump issued an executive order regarding AI entitled “Removing Barriers to American Leadership in Artificial Intelligence,” which rescinded President Biden’s order. President Trump’s order instructs federal advisors to review all federal agency responses to President Biden’s order and rescind those that are inconsistent with President Trump’s order. Accordingly, the DOL and any other related federal agency guidance, including the 2024 AI guidance issued by the OFCCP, will be rescinded. Employers incorporating such guidance into their policies and practices should respond appropriately. Despite this change in the federal landscape, employers should keep in mind that several states have recently passed laws governing AI use in the workplace, highlighting potential violations under federal and state anti-discrimination laws through AI use.

Below are links to the relevant Executive Orders.

Executive Order 14173 – “Ending Illegal Discrimination and Restoring Merit-Based Opportunity”

Executive Order 14168 – “Defending Women From Gender Ideology Extremism and Restoring Biological Truth to the Federal Government”

Executive Order 14151 – “Ending Radical And Wasteful Government DEI Programs And Preferencing”

Executive Order 14179 – “Removing Barriers to American Leadership in Artificial Intelligence”

New Jersey Updates Discrimination Law: New Rules for AI Fairness

The New Jersey AG and the Division on Civil Rights’ new guidance on algorithmic discrimination explains how AI tools might be used in ways that violate the New Jersey Law Against Discrimination. The law applies to employers in New Jersey, and some of its requirements overlap with new state “comprehensive” privacy laws. In particular, those laws’ requirements on automated decisionmaking. Those laws, however, typically do not apply in an employment context (with the exception of California). This New Jersey guidance (which mirrors what we are seeing in other states) is a reminder that privacy practitioners should keep in mind AI discrimination beyond the consumer context.

The division released the guidance last month (as reported in our sister blog) to assist businesses as they vet automated decision-making tools. In particular, to avoid unfair bias against protected characteristics like sex, race, religion, and military service. The guidance clarifies that the law prohibits “algorithmic discrimination,” which occurs when artificial intelligence (or an “automated decision-making tool”) creates biased outcomes based on protected characteristics. Key takeaways about the division’s position, as articulated in the guidance, are listed below, and can be added to practitioners’ growing rubric of requirements under the patchwork of privacy laws:

The design, training, or deployment of AI tools can lead to discriminatory outcomes. For example, the design of an AI tool may skew its decisions, or its decisions may be based on biased inputs. Similarly, data used to train tools may incorporate the developers bias and reflect those biases in their outcomes. When a business deploys a new tool incorrectly, whether intentionally or unintentionally, the outcomes can create an iterative bias.

The mechanism or type of discrimination does not matter when it comes to liability. Whether discrimination occurs through a human being or through automated tools is immaterial when it comes to liability, according to the guidance. The division’s position is if the covered entity discriminates, they have violated the NJLAD. Additionally, the type of discrimination, whether disparate or intentional, does not matter. Importantly, if an employer uses an AI tool that disproportionately impacts a protected group, then they could be liable.

AI tools might not consider reasonable accommodations and thus could result in a discriminatory outcome. The guidance points to specific incidents that could impact employers and employees. An AI tool that measures productivity may flag for discipline an individual who has timing accommodations due to a disability or a person who needs time to express breast milk. Without taking these factors into account, the result could be discriminatory.

Businesses are liable for algorithmic discrimination even if the business did not develop the tool or does not understand how it works. Given this position, employers, and other covered entities, need to understand the AI tools and automated decision-making processes and regularly assess the outcomes after deployment.

Steps businesses, and employers, can take to mitigate risk. The guidance recommends that there be quality control measures in place for the design, training, and deployment of any AI tools. Businesses should also conduct impact assessments and regular bias audits (both pre- and post- deployment). Employers and covered entities should provide notice about the use of automated decision-making tools.

Putting it into Practice: This new guidance may foreshadow a focus by the New Jersey division on employer use of AI tools. New Jersey is not the only state to contemplate AI use in the employment context. Illinois amended its employment law last year to address algorithmic bias in employment decisions. Privacy practitioners should not forget about these employment laws when developing their privacy requirements rubrics.

Listen to this post

Joint Cybersecurity Advisory Released on Ghost (Cring) Ransomware

The Cybersecurity & Infrastructure Security Agency, the Federal Bureau of Investigation, and the Multi-State Information Sharing and Analysis Center released an advisory on February 19, 2025, providing information on Ghost ransomware activity.

According to the advisory, “Ghost actors conduct these widespread attacks targeting and compromising organizations with outdated versions of software and firmware on their internet facing services.” They use publicly available code to exploit Common Vulnerability Exposures (CVE) that have not been patched. The CVEs used by Ghost include CVE-2018-13379, CVE-2010-2861, CVE-2009-3960, CVE-2021-34473, CVE-2021-34523, CVE-2021-31207.

The advisory urges organizations to:

Maintain regular system backups stored separately from the source systems, which cannot be altered or encrypted by potentially compromised network devices [CPG 2.R].

Patch known vulnerabilities by applying timely security updates to operating systems, software, and firmware within a risk-informed timeframe [CPG 2.F].

Segment networks to restrict lateral movement from initial infected devices and other devices in the same organization [CPG 2.F].

Require Phishing-Resistant MFA for access to all privileged accounts and email services accounts.

The advisory details how Ghost (Cring) is gaining initial access, executing applications, escalating privileges, obtaining credentials, evading defenses, moving laterally, and exfiltrating data. It also provides indicators of compromise and email addresses used by the threat actors.

Patching continues to be a crucial block-and-tackle technique, and timely patching is critical for mitigating exploitation. Blocking known malicious emails is a proven tactic to mitigate access. Review the advisory to ensure the applicable patches have been applied and the malicious emails associated with Ghost have been blocked.

Clarifying the Copyrightability of AI-Assisted Works

The U.S. Copyright Office’s long-awaited second report assessing the issues raised by artificial intelligence (AI) makes clear that purely AI generated works cannot be copyrighted, and the copyrightability of AI-assisted works depends on the level of human creative authorship integrated into the work.

With the rise of mainstream generative AI platforms, clarity has been sought by creators, artists, producers, and technology companies concerning whether works created with AI may be entitled to copyright protection. In its most recent report, the Copyright Office concludes that existing copyright legislation and principles are well-suited for the issue of AI outputs’ copyrightability and suggests that AI may be used in the creation of copyrighted works as long as there is the requisite level of human creative expression. The Copyright Office’s report also makes clear that copyright protection will not extend to purely computer-generated works. Instead, copyrightability must be assessed on a case-by-case basis analyzing whether a work has the necessary human creative expression and originality to be copyrightable. Such intensive analysis equips existing U.S. copyright law to adapt to works made with emerging technologies.

In the process of crafting the report, the Copyright Office considered input from over 10,000 stakeholders seeking clarity on the protection of works for licensing and infringement purposes. This report does not address issues relating to fair use in training AI systems or copyright liability associated with the use of AI systems; these topics are expected to be covered in separate publications.

Report on Copyrightability of AI Outputs

The Copyright Office’s report examines the threshold question of copyrightability, or whether a work can be protected and endowed with rights that are enforceable against subsequent copiers, which raises important policy questions on the incentives of copyright law and the history of emerging technologies. Overall, the Copyright Office makes clear that tangential use of AI technology will not disqualify any subsequent work of authorship from protection, but rather the level of protection hinges on the nature and extent of the human expression added to the work.

I. Scope of the Report

The Copyright Office sought to clarify several overarching questions on the copyrightability of AI outputs, including:

Whether the Copyright Clause of the Constitution protects AI-generated works.

Whether AI can be the author of a copyright.

If additional protection for AI generations is recommended, and if so what additions.

If revisions to the human authorship standard are necessary.

II. The Copyrightability Standard and Current AI Technology

Human Authorship –There is a low level of human creativity or “authorship” needed to create copyright protection in a work, and the Copyright Office believes existing legal frameworks are relevant to the assessment of AI-generated outputs. Specifically, the Copyright Office believes that determining whether the authorship standard for copyrightability has been met depends on the level of human expressive intervention in the work.

For example, a photographer’s arrangement, lighting, timing, and post-production editing are all indications of the human expression required for copyright protection, even though, technologically, the camera “assists” to capture the photo.[1] On the other hand, photos taken by animals do not create authorship in the animal because of their non-human status.[2] Similarly, “divine messages” from alleged spirits do not contain the requisite human creativity to amount to authorship.[3] In the context of AI, like a photographer using a camera, the use of new technology does not default to a lack of authorship, but like a monkey taking a picture, non-human machines cannot be authors and therefore the expressions created solely by AI platforms cannot be copyrighted.

Assistive AI – The report further comments on the incorporation of AI into creative tasks, like aging actors on film, adding or removing objects to a scene, or finding errors in software code, and concludes that protection of works using such technology would depend on how the system is being used by a human author and whether a human’s expression is captured by the resulting work.

Protection of Prompts – The Copyright Office concludes that prompts alone do not form a basis for claiming copyright protection in AI-generated outputs (no matter how complex they may seem), unless the prompt itself involves a copyrightable work. At its core, copyright law does not protect ideas because copyright seeks to promote the free flow of ideas and thought. Rather, copyright law protects unique human expressions of the underlying ideas which are fixed in some tangible medium. The Copyright Office explains that prompts do not provide sufficient human control to make AI-users authors. Instead, prompts function as instructions that reflect a user’s conception of the idea but do not control the expression of that idea. Primarily, gaps between prompts and resulting outputs demonstrate that lack of control a user has in the expression of those ideas.

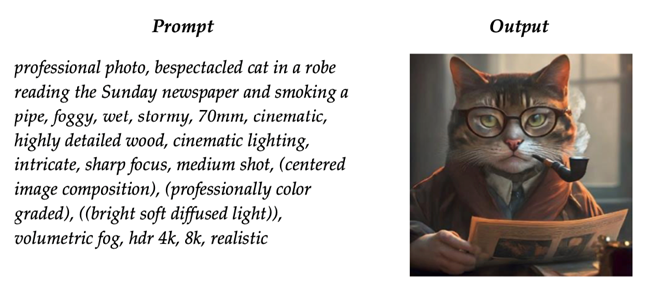

Expressive Inputs – The Copyright Office uses two examples in its report to illustrate this point. The first prompt, detailing the subject matter and composition of a cat smoking a pipe, was considered uncopyrightable because the AI system fills in the gaps of a user’s prompt. Here, the prompt does not specify the breed or coloring of the cat, its size, the pose, or what clothes it should be wearing underneath the robe. Without these particular instructions in the user’s prompts, the AI system still generated an image based on its own internal algorithm to fill in the gaps, thus stripping away expressive control from the user.

In contrast, the second prompt, asking the AI system to generate a photorealistic graphic of a human-drawn sketch, was considered copyrightable because the original elements of the sketch were retained in the AI-generated output. In assessing copyrightability, the Copyright Office pointed to the copyright in the original elements of the sketch as evidence of authorship, and any output depicting identifiable elements of the sketch (directed by the human author) was viewed by the Office as a derivative work of the sketch’s copyright. The artist’s protection in the AI output would overlap with the protectable elements in the original sketch, and like other derivative rights, the AI output would require a license to the original sketch.

In sum, where a human inputs their own copyrightable work into an AI system, they will be the author of that portion of the work still perceptible in the output; the individual elements must be identifiable and traceable to the initial human expression.

The Copyright Office views the current use of prompts as largely containing unprotectable (or public domain) ideas but notes that extensive human expression could potentially make prompts protectable, just not with currently available technology. Additionally, the Copyright Office notes that current technology is unpredictable and inconsistent, often producing vastly different outputs from the same prompts, which in its view shows that prompts lack the requisite clear direction of expression to rise to the level of human authorship.

Arrangement and Modification of AI Works –The Copyright Office also concludes that human authorship can be shown by the additions to, or arrangement of, AI outputs, including the use of AI adaptive tools. For example, a comic book “illustrated” with AI but with added original text by a human author was granted protection in the arrangement and expression of the images in addition to any copyrightable text because the work is the product of creative human choices. The same reasoning applies to AI generated editing tools which allow users to select and regenerate regions of an image with a modified prompt. Unlike prompts, the use of these tools enables users to control the expression of specific creative elements, but the Copyright Office clarifies that assessing the copyrightability of these modifications depends on a case-by-case determination.

III. International AI Copyright Decisions

In its review of international responses to AI copyright questions, the U.S. Copyright Office notes the general consensus of applying existing human authorship requirements to determine copyrightability of AI works.

Instructions from Japan’s Cultural Counsel underline the case-by-case basis necessary for assessing copyrightability and noted examples of human AI input that may rise to a copyrightable level. These include the number and type of prompts given, the number of attempts to generate the ideal work, selection by the user, and any later changes to the work.

A court in China found that over 150 prompts, along with retouches and modifications to the AI’s output, resulted in sufficient human expression to gain copyright protection.

In the European Union, most member states agree that current copyright policy is equipped to cover the use of AI, and similar to the U.S., most member states require significant human input into the creative process to qualify for copyright protection.

Canada and Australia have both expressed a lack of clarity on the issue of AI, but neither has taken steps to change legislation.

Unlike other countries, some commonwealth jurisdictions like the United Kingdom, India, New Zealand, and Hong Kong enacted laws before modern generative AI allowing for copyright protection for works created entirely by computers. With recent developments in technology, the United Kingdom has considered changing this law, but other countries have yet to clarify whether their existing laws would apply to AI-generated works.

IV. Policy Implications for Additional Protection

Incentives – One of the key components of copyright policy, as written in the U.S. Constitution, is to “promote science and the useful arts.” Comments to the Copyright Office varied on whether providing protection for AI-generated work would incentivize authorship; proponents of increasing copyright protection argued that it would promote emerging technologies, while opponents note the quick expansion of these technologies shows incentivization is not necessary. The Copyright Office finds the current legal framework as sufficiently balanced, stating that additional laws are not needed to incentivize AI creation because the existing threshold requirement of human creativity already protects and incentivizes the works of human authorship that copyright law seeks to promote.

Staying Internationally Competitive – Commentators noted that without underlying copyright protection, U.S. creators would be impacted by weaker protection for AI-generated works. The Copyright Office counters that similar protections are available worldwide and align with the U.S.’s standards of human authorship.

Clarity on AI-Generated Protection – Commentators petitioned Copyright Office officials for some legal certainty that works created with AI could be licensed to other parties and be registered with the Copyright Office. The Copyright Office’s report provides assurance that works made with assistance from AI platforms may be registered under existing copyright laws and notes the difficulty of any further clarity due to the case-by case nature of copyright analysis.

Conclusion and Considerations[4]

The foundations of U.S. copyright law have been applied consistently to emerging technologies, and the Copyright Office believes those doctrines will apply equally well to AI technologies. With the Copyright Office’s affirmation that purely AI-generated works cannot be copyrighted, and that AI-assisted works must involve meaningful human authorship, businesses leveraging AI systems must consider several key legal and strategic factors:

Maintain detailed records of human prompts and modifications, such as arranging, adapting, or refining AI outputs.

Focus on enhancing human-made, copyrightable works with AI systems rather than generating works solely through uncopyrightable prompts.

For companies commissioning AI-assisted work, specify in contracts that employees or contractors provide sufficient human control, arrangement, or modification of AI works to ensure copyrightability.

For companies offering AI-assisted work as part of their services, consider mitigating risks by excluding AI generated works from standard IP representations/warranties, and further disclaiming any liability in relation to the use of such works.

Consider variations in international AI copyright laws to assess the impact on global IP strategies.

Given the unique analysis copyright cases require, and the existing precedent requiring human input for protection, copyright law is well prepared to face the challenges posed by AI platforms. Due to the unique facts of each case, creators are encouraged to check with an experienced copyright attorney who can help evaluate whether an individual AI-assisted work includes enough human intervention to be protectable.

[1] Burrow-Giles Litho. Co. v. Sarony, 111 U.S. 53, 55–57 (1884).

[2] Naruto v. Slater, No. 15-cv-04324, 2016 U.S. Dist. LEXIS 11041, at *10 (N.D. Cal. Jan. 28, 2016) (finding animals are not “authors” within the meaning of the Copyright Act).

[3] Urantia Found. v. Kristen Maaherra, 114 F.3d 955, 957–59 (9th Cir. 1997) (holding that copyright law does not intend to protect divine beings, and protects the arrangement of otherworldly messages, but not the messages’ content).

[4] As noted above, the Copyright Office’s report does not address issues relating to fair use in training AI systems or copyright liability associated with the use of AI systems; these topics are expected to be covered in a separate publication.

The ReAIlity of What an AI System Is – Unpacking the Commission’s New Guidelines

The European Commission has recently released its Guidelines on the Definition of an Artificial Intelligence System under the AI Act (Regulation (EU) 2024/1689). The guidelines are adopted in parallel to commission guidelines on prohibited AI practices (that also entered into application on February 2), with the goal of providing businesses, developers and regulators with further clarification on the AI Act’s provisions.

Key Takeaways for Businesses and AI Developers

Not all AI systems are subject to strict regulatory scrutiny. Companies developing or using AI-driven solutions should assess their systems against the AI Act’s definition. With these guidelines (and the ones of prohibited practices), the European Commission is delivering on the need to add clarification to the core element of the act: what is an AI system?

The AI Act defines an AI system as a machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment. The system, for explicit or implicit objectives, infers from input data how to generate outputs – such as predictions, content, recommendations or decisions – that can influence physical or virtual environments.

One of the most significant clarifications in the guidelines is the distinction between AI systems and “traditional software.”

AI systems go beyond rule-based automation and require inferencing capabilities.

Traditional statistical models and basic data processing software, such as spreadsheets, database systems and manually programmed scripts, do not qualify as AI systems.

Simple prediction models that use basic statistical techniques (e.g., forecasting based on historical averages) are also excluded from the definition.

This distinction ensures that compliance obligations under the AI Act apply only to AI-driven technologies, leaving other software solutions outside of its scope.

Below is a breakdown of what the guidelines bring for each of the seven components:

Machine-based systems – AI systems rely on computational processes involving hardware and software components. The term “machine-based” emphasizes that AI systems are developed with and operate on machines, encompassing physical elements such as processing units, memory, storage devices and networking units. These hardware components provide the necessary infrastructure for computation, while software components include computer code, operating systems and applications that direct how the hardware processes data and performs tasks. This combination enables functionalities like model training, data processing, predictive modeling, and large-scale automated decision-making. Even advanced quantum computing systems and biological or organic systems qualify as machine-based if they provide computational capacity.

Varying levels of autonomy – AI systems can function with some degree of independence from human intervention. This autonomy is linked to the system’s capacity to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments. The AI Act clarifies that autonomy involves some independence of action, excluding systems that require full manual human involvement. Autonomy also spans a spectrum – from systems needing occasional human input to those operating fully autonomously. This flexibility allows AI systems to interact dynamically with their environment without human intervention at every step. The degree of autonomy is a key consideration for determining if a system qualifies as an AI system, impacting requirements for human oversight and risk-mitigation measures.

Potential adaptiveness – Some AI systems change their behavior after deployment through self-learning mechanisms, though this is not a mandatory criterion. This self-learning capability enables systems to automatically learn, discover new patterns or identify relationships in the data beyond what they were initially trained on.

Explicit or implicit objectives – The system operates with specific goals, whether predefined or emerging from its interactions. Explicit objectives are those directly encoded by developers, such as optimizing a cost function or maximizing cumulative rewards. Implicit objectives, however, emerge from the system’s behavior or underlying assumptions. The AI Act distinguishes between the internal objectives of the AI system (what the system aims to achieve technically) and the intended purpose (the external context and use-case scenario defined by the provider). This differentiation is crucial for regulatory compliance, as the intended purpose influences how the system should be deployed and managed.

Inferencing capability – AI systems must infer how to generate outputs rather than simply executing manually defined rules. Unlike traditional software systems that follow predefined rules, AI systems reason from inputs to produce outputs such as predictions, recommendations or decisions. This inferencing involves deriving models or algorithms from data, either during the building phase or in real-time usage. Techniques that enable inference include machine learning approaches (supervised, unsupervised, self-supervised and reinforcement learning) as well as logic- and knowledge-based approaches.

Types of outputs – AI systems generate predictions, content, recommendations or decisions that shape both their physical and virtual environments. Predictions estimate unknown values based on input data; content generation creates new materials like text or images; recommendations suggest actions or products; and decisions automate processes traditionally managed by human judgement. These outputs differ in the level of human involvement required, ranging from fully autonomous decisions to human-evaluated recommendations. By handling complex relationships and patterns in data, AI systems produce more nuanced and sophisticated outputs compared to traditional software, enhancing their impact across diverse domains.

Environmental influence – Outputs must have a tangible impact on the system’s physical or virtual surroundings, exposing the active role of AI systems in influencing the environment they operate within. This includes interactions with digital ecosystems, data flows and physical objects, such as autonomous robots or virtual assistants.

Why These Guidelines Matter

The AI Act introduces a harmonized regulatory framework for AI developed or used across the EU. Core to its scope of application is the definition of “AI system” (which then spills over onto the scope of regulatory obligations, including restrictions on prohibited AI practices and requirements for high-risk AI systems).

The new guidelines serve as an interpretation tool, helping providers and stakeholders identify whether their systems qualify as AI under the act. Among the key takeaways is the fact that the definition is not to be applied mechanically, but should consider the specific characteristics of each system.

AI systems are a reA(I)lity; if you have not started assessing the nature of the one you develop or the one you procure, now is the time to do so. While the EU AI Act might be considered by many as having missed its objective (a human-centric approach to AI that fosters innovation and sets a level playing field), it is here to stay (and its phased application is on track).

Google Removes Gemini AI Assistant from Main iOS App: What It Means for Users and the Future of AI.

Google Removes Gemini AI Assistant from Main iOS App: What It Means for Users and the Future of AI. Alphabet Inc.’s Google has made a significant move by removing its AI assistant, Gemini, from the main app on iOS devices. Users were informed via email that Gemini is no longer available in the Google app, […]

Prosecutorial Reset: NLRB Acting General Counsel Rescinds Biden Guidance Memoranda En Masse

Not waiting for the appointment of a new General Counsel after President Trump’s discharge of both the previous General Counsel and then Acting General and suggesting that his motivation related to the workload of the Agency, on February 14, 2025, National Labor Relations Board’s current Acting General Counsel William B. Cowen rescinded nearly all of the Biden administration General Counsel’s substantive prosecutorial guidance memos.

While these memoranda do not have the weight of law or regulation, they do set out the agency’s priorities and key interpretations of the National Labor Relations Act. As a result, it marks a (not unexpected) complete reversal of the prosecutorial focus of the Office of the General Counsel from General Counsel Abruzzo’s tenure.

There were generally two types of rescissions. Some of the memos were rescinded in full, while others were rescinded “pending further guidance” – suggesting those areas where the new administration will be placing its focus.

The Acting GC’s memorandum did not address the impact of the NLRB’s current lack of a quorum on the Acting GC’s prosecutorial agenda. President Trump’s unprecedented firing of former NLRB Chair Gwynne Wilcox, which deprived the NLRB of a quorum, is currently being litigated.

The list of key rescinded memoranda and their policy impact are summarized below.

Abruzzo GC Memoranda Rescinded in Full

Rescinded General Counsel Memoranda

Topic and Relevant Policy

Memorandum GC 21-01

Offered guidance on mail-ballot elections, because “COVID-19 is no longer a Federal Public Health Emergency”.

Memorandum GC 21-02

Rescinded prior memos, including those that provided guidance on employment handbook rules, decertification petitions, and duty of fair representation cases, among other things.

Memorandum GC 21-03

Advocated greater enforcement of Section 7 rights regarding workplace health and safety in light of COVID-19.

Memorandum GC 21-08

Endorsed prosecuting universities that did not classify student-athletes as employees under the NLRA.

Memorandum GC 22-06

Offered an update on NLRB regional offices seeking broader remedies when prosecuting unfair labor practices (e.g., consequential damages, employer letters of apology).

Memorandum GC 23-02

Advocated prosecuting employers who used AI and algorithms in a way that could chill employee Section 7 activity.

Memorandum GC 23-05

Endorsed prosecuting employers that imposed on employees broadly worded severance agreements with expansive non-disparagement and confidentiality clauses.

Memorandum GC 23-08

Advocated prosecuting employers that imposed on employees noncompetition agreements outside limited cases.

Memorandum GC 24-04

Supported seeking full remedies (e.g., increased healthcare costs, lost pension contributions) for employees in unfair labor practice charge settlements with employers.

Memorandum GC 24-05

Proposed continuing to seek Section 10(j) injunctive relief against employers despite the higher procedural bar set by the Supreme Court in Starbucks Corp. v. McKinney.

Memorandum GC 25-01

Advocated prosecuting employers who imposed on employees stay-or-pay provisions (e.g., training repayment agreement provisions, quit fees, sign-on bonuses).

Abruzzo Memoranda Rescinded – Pending Further Guidance

Rescinded General Counsel Memoranda

Topic and Relevant Policy

Memorandum GC 21-05

Advocated Board prosecutors seek Section 10(j) injunctive relief to protect Section 7 rights from “remedial failure due to the passage of time.”

Memorandum GC 21-06

Endorsed NLRB regional offices seeking a “full panoply” of make-whole remedies, including “consequential damages to make employees whole for economic losses (apart from the loss of pay or benefits)”, such as credit card late fees or higher healthcare costs, in unfair labor practice cases.

Memorandum GC 21-07

Proposed Board prosecutors seek expanded remedies in formal and informal settlements, including consequential damages, front pay, and work authorization sponsorship for immigrant workers, employer letters of apology, among others.

Memorandum GC 22-01

Supported ensuring that immigrant workers’ Section 7 rights were protected, including by NLRB regional offices pursuing deferred action, parole, and a stay of removal, among other things, when immigrants allege they suffered unfair labor practices.

Memorandum GC 22-02

Endorsed NLRB regional offices seeking Section 10(j) injunctive relief in response to employers allegedly committing unfair labor practices during union organizing campaigns.

Memorandum GC 24-01

Offered guidance to Board prosecutors seeking a Cemex bargaining order against employers that allegedly fail to recognize and bargain with unions.

Memorandum GC 25-04

Provided insight on the interaction between federal anti-discrimination and labor law, including in cases where an employee engages in Section 7 activity that may be discriminatory.

As always, we will continue to monitor developments related to the Board and provide updates as they develop.

Fair Warning: AI’s First Copyright Fair Use Ruling, Thomson Reuters v. ROSS

Early last week, the first substantive US ruling on fair use in AI-related copyright litigation, Thomson Reuters v. ROSS Intelligence, No. 1:20-cv-00613 (D. Del.), was issued by Judge Stephanos Bibas. This landmark opinion marks a significant development in AI litigation, particularly concerning the use of copyrighted materials in training AI models.

However, while this decision focuses on the training of an AI model, it does not involve generative AI technology. Hence, it will be important for AI developers and deployers to continue to monitor future decision that address whether the subsequent steps of generating and distributing AI-generated content are considered fair use of the original works.

Key Takeaways

The court held that ROSS’s copying of Thomson Reuters’ content to build a competing AI-based legal platform is not fair use under the US Copyright Act.

The court found actual copying and substantial similarity of 2,243 Westlaw headnotes.

The court rejected ROSS’s defenses of innocent infringement, copyright misuse, merger, and scenes à faire.

ROSS’s commercial use weighed heavily against its fair use defense.

The court vacated its previous denial of summary judgment on the issue of fair use.

Background

Thomson Reuters owns Westlaw, one of the largest legal research platforms in the US. Through a subscription, Westlaw users are able to access a wide range of resources, including case law, state and federal statutes, state and federal regulations, practical guides, news, law review articles, legislative histories, and trial transcripts. A key feature of Westlaw is its headnotes, which summarize the key points of legal opinions. Additionally, West.aw includes the “Key Number System,” which organizes legal opinions.

ROSS Intelligence, a competitor, sought to license Westlaw’s content to develop its own legal AI-based tool. After Thomson Reuters refused, ROSS obtained “Bulk Memos,” created using Westlaw’s headnotes, through a third-party legal services vendor. Thomson Reuters discovered this and sued ROSS for copyright infringement based on its use of Westlaw content to train its AI model.

Overview of the Case

The court granted partial summary judgment to Thomson Reuters on direct copyright infringement, fair use, and other defenses, while denying summary judgment motions from Ross, analyzing the fair use factors. Under the US Copyright Act (17 § USC 107), there are four factors:

(1) the purpose and character of use, including whether the use is of a commercial nature or for nonprofit educational purposes;

(2) the nature of the copyrighted work;

(3) the amount and substantiality of the portion used in relation to the copyrighted work as a whole; and

(4) the likely effect of the use on the potential market for the copyrighted work.

Thomson Reuters prevailed on the first factor. In examining the purpose and character of ROSS’s use, the court focused on whether the use was commercial and transformative. ROSS acknowledged that its use was commercial but argued that it was transformative, as the headnotes in question were allegedly “transformed” into numerical data representing the relationship among legal words in its AI system. The court disagreed, noting that ROSS’s use did not have a further purpose or different character from Thomson Reuters’ use. ROSS also argued that its use was permissible under the doctrine of “intermediate copying,” but again the court disagreed, noting that the cited cases were inapt because they involved copying of computer code rather than written words and the code copying was necessary for competitors to innovate. By contrast, use of the headnotes was not necessary to achieve ROSS’s desired purpose.

The court resolved factor two in ROSS’s favor, finding that although Westlaw’s material has the minimal required originality, it is not highly creative. Further, while the headnotes involve some editorial creativity, the Key Number System is a factual compilation with limited creativity.

The court also ruled in favor of ROSS on factor three, despite the number of headnotes used, because the material available to the public did not include the Westlaw headnotes. According to the court, what matters is not the amount and substantiality of the portion used in making a copy, but rather the amount and substantiality of what is thereby made accessible to the public for which it may serve as a competing substitute. It determined there was no factual dispute, as ROSS’s output did not include Westlaw headnotes.

Finally—and most important in this case—the court emphasized that because ROSS could have developed its own product without infringing Thomson Reuters’ copyrights, the fourth factor weighs in favor of Thomson Reuters. The court examined the likely effect of ROSS’s copying on the market for Westlaw’s product and, while initially considering whether ROSS’s use served a different purpose by creating a new research platform, ultimately concluded that ROSS intended to compete with Westlaw, and failed to prove otherwise. Court have differed over the years on whether the first or the fourth fair use factor is the most important; here analysis of the fourth is crucial (but both favored Thomson Reuters).

Looking Ahead

The implications of this decision for AI copyright litigation and fair use arguments are significant.

First, many practitioners have been waiting for a decision whether creating an AI model is considered transformative and fair use, particularly since AI models store their intelligence as numerical weights that are updated during the training process. But even with such advanced technology, the court in this case declined to hold that the use was transformative, based largely on its ultimate purpose of competing with the owner of the original works.

Also noteworthy is that the court declined to find fair use for an AI technology that is not generative AI. Even though the output from ROSS’s AI system was uncopyrighted verbatim quotes from court opinions (and not the original copyrighted headnotes), there was no fair use.

This could have broader consequences for large language models (LLMs) and generative AI technologies. When judges in other pending generative AI cases consider both the training step (as in ROSS) and the output generation step for a generative AI technology (e.g., an AI-generated image), it could be even less likely that fair use will apply. Here, the court emphasized that “factor four is undoubtedly the single most important element of fair use.” So, if AI-generated content, including that produced by LLMs, is substantially similar to an original work and has a detrimental effect on the market for the original work (e.g., puts an artist out of business), a finding of fair use may be less likely.

Our “fair warning” is this: AI developers and deployers should continue to monitor ongoing AI litigation, while considering the market implications of the use of copyrighted materials for training AI models or distributing AI-generated output. Because fair use is heavily dependent upon the facts, we anticipate different rulings from different courts, particularly where the commercial use of the original content is not a clear-cut as here.

The State of the Funding Market for AI Companies: A 2024 – 2025 Outlook

Artificial intelligence (AI) has emerged as an influential technology, driving notable investments across various industries in recent years. In 2024, venture capital (VC) funding for AI companies reached record levels, signaling ongoing interest and optimism in the sector’s potential. Looking ahead, 2025 is anticipated to bring continued innovation, with promising funding opportunities and a growing IPO market for AI-driven businesses.

VC Funding in 2024: A Year of Growth

Global VC investment in AI companies saw remarkable growth in 2024, as funding to AI-related companies exceeded $100 billion, an increase of over 80% from $55.6 billion in 2023. Nearly 33% of all global venture funding was directed to AI companies, making artificial intelligence the leading sector for investments. This marked the highest funding year for the AI sector in the past decade, surpassing even peak global funding levels in 2021. This growth also reflects the increasing adoption of AI technologies across diverse sectors, from healthcare to transportation and more, and the growing confidence of investors in AI’s transformative potential.

Industries Attracting Funding

The surge in global venture capital funding for AI companies in 2024 was driven by diverse industries adopting AI to innovate and solve complex problems. This section explores some of the industries that captured significant investments and highlights their transformative potential.

Generative AI. Generative AI, which includes technologies capable of creating text, code, images, and synthetic data, has experienced a remarkable surge in investment. In 2024, global venture capital funding for generative AI reached approximately $45 billion, nearly doubling from $24 billion in 2023. Late-stage VC deal sizes for GenAI companies have also skyrocketed from $48 million in 2023 to $327 million in 2024. The growing popularity of consumer-facing generative AI programs like Google’s Bard and OpenAI’s ChatGPT has further fueled market expansion, with Bloomberg Intelligence projecting the industry to grow from $40 billion in 2022 to $1.3 trillion over the next decade. As a result, venture capitalists are increasingly focusing on GenAI application companies—businesses that build specialized software using third-party foundation models for consumer or enterprise use. This new wave of AI identifies patterns in input data and generates realistic content that mimics the features of its training data. Models like ChatGPT generate coherent and contextually relevant text, while image-generation tools such as DALL-E create unique visuals from textual descriptions.

Healthcare and Biotechnology: The healthcare and biotechnology industries have seen a significant surge in AI integration, with startups harnessing the power of artificial intelligence for diagnostics, drug discovery, and personalized medicine. In 2024, these AI-driven companies captured a substantial share of venture capital funding. Overall, venture capital investment in healthcare rose to $23 billion, up from $20 billion in 2023, with nearly 30% of the 2024 funding directed toward AI-focused startups. Specifically, biotechnology AI attracted $5.6 billion in investment, underscoring the growing confidence in AI’s ability to revolutionize healthcare solutions. As AI continues to evolve, its impact on diagnostics and personalized treatments is expected to shape the future of patient care, driving innovation across the sector.

Financial Technology: Fintech, short for financial technology, refers to the use of innovative technologies to enhance and automate financial services. It includes areas like digital banking, payments, lending, and investment management, offering more efficient, accessible, and cost-effective solutions for consumers and businesses. In recent years, AI has become an important tool in fintech, helping to improve customer service through chatbots, enhance fraud detection with machine learning algorithms, automate trading, and personalize financial advice. While overall fintech investment in 2024 has dropped to around $118.2 billion, down from $229 billion in 2021, AI in fintech remains a high-growth area, valued at $17 billion in 2024 and projected to reach $70.1 billion by 2033. This reflects a strong and sustained interest in leveraging AI to revolutionize financial services despite broader investment slowdowns in the sector.

Trends for 2025

In 2025, VC investments in AI companies are continuing the momentum from previous years. Global venture funding totaled $26 billion in January 2025, of which AI-related companies garnered $5.7 billion, accounting for 22% of overall funding. However, despite the continued interest in AI investment, the investment strategies in 2025 may evolve from the approaches seen in 2024, as market dynamics shift and investors adapt to new challenges and opportunities.

In 2024, the investment strategy was heavily characterized by aggressive funding and rapid scaling. Investor focus appeared to be on capitalizing on the hype around AI technology, leading to substantial valuations and rapid deal cycles. The strategy was primarily characterized by pure innovation, with VCs eager to back groundbreaking technologies regardless of immediate profitability. This led to significant investments in cutting-edge research and experimental applications. However, this approach often led to inflated valuations.

On the other hand, the investment landscape in 2025 is expected to shift with VCs adopting more disciplined and strategic investment approaches. The focus now appears to be on sustainable growth and profitability. Investors are predicted to become more selective, favoring companies with solid fundamentals and proven business models to navigate economic uncertainties.

Regulatory concerns are also playing an increasingly significant role in shaping VC investment strategies in AI. Governments worldwide are ramping up efforts to regulate AI technologies to address issues such as data privacy, algorithmic bias, and security risks. For instance, in the United States, regulatory scrutiny is also intensifying, with lawmakers proposing new frameworks to ensure transparency and accountability in AI algorithms. This includes discussions about mandating audits of high-risk AI systems and potentially introducing liability rules for AI-generated content. These evolving regulatory landscapes are contributing to market unpredictability, as startups may face heightened compliance burdens and legal uncertainties. As a result, while the enthusiasm for AI investments remains high, the 2025 strategy is marked by increased due diligence and a more calculated approach, reflecting a growing emphasis on navigating complex regulatory landscapes.

Resurgence of Initial Public Offerings (IPOs):

In 2025, the IPO market for AI companies is expected to be a significant area of focus, driven by a combination of strong growth in the sector and favorable market conditions. A major window for the IPO market could be opening. Analysts attribute this rebound to factors such as markets reaching new highs, stabilized interest rates, a strong economy, and a clearer understanding of the new administration’s plans following the recent election. The favorable market environment for these companies is supported by a solid U.S. economy, which is expected to grow by 2.3% in 2025.

Several major AI players are preparing to enter the public markets. One of the most anticipated IPOs is that of Databricks, an AI-driven data analytics platform that has raised nearly $14 billion in funding, most recently at a $62 billion valuation. The company has expressed intentions to go public in 2025, indicating a favorable outlook for the sector. Additionally, companies like CoreWeave, an AI cloud platform based in New Jersey, are expected to follow with their own IPOs later in the year, further fueling the optimism around AI investments. Crunchbase News highlights that there are at least 13 other AI startups with strong IPO potential in 2025. This IPO pipeline is a reflection of the broader momentum within the AI sector.

Despite these positive indicators, economic challenges such as trade tensions, inflationary pressures, and concerns over policy decisions add a layer of complexity to the market. For example, trade tensions could contribute to rising manufacturing costs, which could put pressure on companies that rely on global supply chains. Tariffs could contribute to inflationary pressures, which could dampen consumer spending and overall economic growth. These challenges highlight the need for companies to navigate an evolving landscape where trade policies and inflationary concerns could impact their growth trajectories.

Despite these hurdles, the IPO market remains buoyed by investor confidence, particularly in AI. As AI companies continue to develop new applications across industries, the appetite for public offerings remains strong. The favorable market environment for these companies suggests that AI will be a key focus for investors seeking sustainable growth opportunities in 2025.

Conclusion

The AI funding landscape in 2024 demonstrated the technology’s transformative potential across industries. As we move into 2025, investors and companies alike will need to navigate evolving market dynamics and regulatory landscapes. The IPO market, too, holds promise, provided companies are well-prepared to meet investor expectations surrounding sustainable growth and profitability.

AI and Blockchain – 1+1 =3

Individually, AI and blockchain are among the hottest, most transformative technologies. Collectively, they are incredibly synergistic – hence the 1+1=3 concept in the title. We are seeing more examples of how the two will interact. Over time, the level of interaction will be extensive. Many projects are being developed that bring the power of AI to blockchain applications and vice versa. One of these projects that has garnered significant attention is the Virtuals Protocol. The project launched in October 2024 via integration with Base, an Ethereum layer-2 network. Just recently, the project announced that it is expanding to Solana.

The Virtuals Protocol is a decentralized platform for buying, trading, and creating AI agents. It transforms AI agents into tokenized, revenue-generating assets. By leveraging blockchain technology, Virtuals Protocol enables the creation, co-ownership, and interaction with AI agents, expanding their potential across various applications.

AI agents are software programs that can interact with their environment, collect data, and use the data to perform self-determined tasks to meet predetermined goals. Humans set goals, but an AI agent independently chooses the best actions it needs to perform to achieve those goals. See “What are AI Agents?” for more information.

How Virtuals Protocol Works

The Virtuals Protocol integrates AI agents, blockchain infrastructure, and tokenization to create a scalable, decentralized ecosystem. Here’s a breakdown of how it operates:

Agent Tokenization: AI agents are minted as ERC-20 tokens with fixed supplies, paired with $VIRTUAL in locked liquidity pools. These tokens are deflationary through buyback-and-burn mechanisms.

G.A.M.E Framework: Agents utilize multimodal AI capabilities, such as text generation, speech synthesis, gesture animation, and blockchain interactions. This framework allows agents to adapt in real-time.

Revenue Routing: Agents earn revenue through inference fees, app integrations, or user interactions. The proceeds flow into their on-chain wallets for buybacks or treasury growth.

Memory Synchronization: Agents retain cross-platform memory through a Long-Term Memory Processor, ensuring user-specific, contextual continuity.

Decentralized Validation: Contributions and model updates are governed by a Delegated Proof of Stake (DPoS) system, ensuring agent performance aligns with community standards.

On-Chain Wallets: Each agent operates an ERC-6551 wallet, enabling autonomous transactions, asset management, and financial independence.

What Virtuals Do

The Virtuals Protocol redefines digital engagement across gaming, entertainment, and decentralized economies. By simplifying AI adoption, rewarding contributors, and lowering barriers for non-experts, it creates a scalable ecosystem that delivers value for stakeholders. The platform’s agents collectively hold a valuation of over $850 million at the time of publishing, led by Mentigent and aidog_agent. Ownership of these two tokenized AI agents is fractionalized; each is held by more than 200 owners who receive a share of the revenue generated.

Sample Legal Issues Associated with Virtuals

As with any emerging technology, Virtuals Protocol faces several legal challenges:

Intellectual Property Rights: The creation and use of AI agents raise questions about the ownership and protection of intellectual property. Ensuring that creators and users have clear rights and protections is crucial;

Data Privacy: AI agents collect and process vast amounts of user data, raising concerns about data privacy and security. Robust safeguards are necessary to protect user information;

Liability and Safety Standards: Ensuring the safety and reliability of AI agents is essential. Legal frameworks must address potential liabilities and establish safety standards to protect users; and

Regulatory Compliance: As AI and blockchain technologies evolve, regulatory compliance becomes increasingly complex. Virtuals Protocol must navigate various legal requirements to ensure its operations remain lawful and ethical.

Securities Laws: The tokenization of AI agents as ERC-20 tokens and the fractionalized ownership of high-value AI agents may attract scrutiny under securities laws. If the SEC deems these tokens to be investment contracts under the Howey Test, the project could face enforcement actions, requiring registration. See here for our discussion on the SEC’s gameplan for crypto under Trump.

Consumer Finance Laws: The collection and processing of user data by AI agents could subject the project to data privacy and consumer protection regulations. Furthermore, if promotional efforts are perceived as deceptive or unfair to users or investors, this could lead to enforcement actions under federal or state consumer protection laws. To the extent revenue-sharing models are subject to consumer protection laws, this could trigger requirements for fair and clear disclosures to fractionalized owners.

AI-Specific Regulations: The Federal Trade Commission (FTC) has issued guidance emphasizing the importance of transparency and honesty in the use of AI, and cautioning against deceptive practices such as making misleading claims about AI capabilities or results. Overstating the capabilities or revenue generating potential of AI agents to attract users or investors could lead to increased regulatory scrutiny and enforcement. Proposed federal legislation, such as the Algorithmic Accountability Act, would require projects like Virtuals to assess the impacts of bias and discrimination on automated decision-making systems, including AI. AI agents may require audits for bias, transparency, and accountability, particularly given their use in user interactions and decision-making.

Despite the novel legal issues Virtuals Protocol presents, the project represents an exciting and significant advancement in the integration of AI and blockchain technologies. By transforming AI agents into tokenized assets, it creates new opportunities for digital engagement and revenue generation. However, addressing the associated legal issues is essential to ensure user trust and the platform’s sustainable growth.

New York Proposal to Protect Workers Displaced by Artificial Intelligence

On 14 January 2025, during her State of the State Address (the Address), New York Governor Kathy Hochul announced a new proposal aimed at supporting workers displaced by artificial intelligence (AI).1 This proposal would require employers to disclose whether AI tools played a role in mass layoffs or closings subject to New York’s Worker Adjustment and Retraining Notification Act (NY WARN Act). Governor Hochul announced that she is directing the New York State Department of Labor (DOL) to enact and enforce this requirement. The DOL does not have a timeline for implementing the new requirement, and Labor Commissioner Roberta Reardon acknowledged that “defining what counts as an AI-related layoff would be a challenge [to implementation].”2

In the Address, Governor Hochul acknowledged the benefits of AI, stating, “[innovations in AI] have the ability to change the way businesses operate, leading to greater efficiency, fewer business disruptions, and increased responsiveness to customer needs.” However, the implementation of AI tools in the workplace leads to increased automation, which may result in increased job loss, wage stagnation or loss, reduced hiring, lack of job satisfaction, and skill obsolescence—all of which are major concerns for US workers.3

The primary goals of imposing these employer disclosures are to: (i) aid transparency and gather data on the impact of AI technologies on employment and employees; and (ii) ensure the integration of AI tools into the workforce creates an environment where workers can thrive.

Implications for Employers

Disclosure Requirement

Employers in New York will need to disclose in their NY WARN Act notices whether layoffs are due to the implementation of AI tools replacing employees.

Scope

While specific details about the scope of the new disclosure requirement are not yet available, employers should prepare for this additional obligation as part of the existing complex notice requirements under the NY WARN Act.4

Compliance

Employers contemplating a NY WARN Act-triggering event should consult with legal counsel to ensure compliance with these disclosure requirements and expanded NY WARN Act obligations.

NY WARN Act

The Worker Adjustment and Retraining Notification Act (WARN Act) is a federal law that requires covered employers to provide employees with 60-day advance notice before closing a plant or conducting a mass layoff.5 The purpose of the WARN Act is to give workers and their families time to adjust to potential layoffs and to seek or train for new jobs.6 New York is one of 18 states with its own “mini-WARN Act.” The NY WARN Act imposes stricter requirements than the federal WARN Act. For example, the NY WARN Act applies to employers with 50 or more employees while the federal WARN Act applies to employers with 100 or more employees. The NY WARN Act also requires a 90-day advance notice, compared to the 60-day notice required under federal law. The early warning notices of closures and layoffs are provided to affected employees, their representatives, and the Department of Labor and local officials. If Governor Hochul’s proposal is enforced, NY WARN Act notices will also need to include the required AI disclosure.

Takeaways for Employers

Employers should be well versed in how AI tools are being used and the impact they are having on workers, especially if such impacts may lead to mass layoffs. Specifically, legal and human resources leaders should understand how the business is automating certain processes through AI tools and the implications the tools have on headcount requirements, employee job satisfaction and morale.

Our Labor, Employment, and Workplace Safety lawyers regularly counsel clients on a wide variety of issues related to emerging issues in labor, employment, and workplace safety law, and are well-positioned to provide guidance and assistance to clients on AI developments.

Footnotes

1 https://www.governor.ny.gov/news/governor-hochul-announces-new-proposals-support-small-businesses-and-boost-economic-growth

2 https://news.bloomberglaw.com/product/blaw/bloomberglawnews/exp/eyJpZCI6IjAwMDAwMTk0LTcxNTYtZDIzYy1hYmZjLTc1ZmU5NDhiMDAwMSIsImN0eHQiOiJETE5XIiwidXVpZCI6ImhqMGRvcTNKdGdrSkpKckZyL01QaUE9PU9seW0rTExPbVdiODlZZ1N6aWtDZHc9PSIsInRpbWUiOiIxNzM3Mzc0NjI2MDg5Iiwic2lnIjoiYTZXMnkwZnczcGZ3SnVpdlFrclV0S3FERFlnPSIsInYiOiIxIn0=?source=newsletter&item=body-link®ion=text-section&channel=daily-labor-report

3 https://www.imf.org/en/Blogs/Articles/2024/01/14/ai-will-transform-the-global-economy-lets-make-sure-it-benefits-humanity#:~:text=Roughly%20half%20the%20exposed%20jobs,of%20these%20jobs%20may%20disappear; https://cepr.org/voxeu/columns/workers-responses-threat-automation.

4 12 NYCRR Part 92

5 https://www.dol.gov/general/topic/termination/plantclosings

6 Id.