Context for the Five Pillars of EPA’s ‘Powering the Great American Comeback Initiative’

On February 4, new US Environmental Protection Agency (EPA) Administrator Lee Zeldin announced EPA’s “Powering the Great American Comeback Initiative,” which is intended to achieve EPA’s mission “while emerging the greatness of the American Economy.” The initiative has five “pillars” intended to “guide the EPA’s work over the first 100 days and beyond.” These are:

Pillar 1: Clean Air, Land, and Water for Every American.

Pillar 2: Restore American Energy Dominance.

Pillar 3: Permitting Reform, Cooperative Federalism, and Cross-Agency Partnership.

Pillar 4: Make the United States the Artificial Intelligence Capital of the World.

Pillar 5: Protecting and Bringing Back American Auto Jobs.

Below, we break down each of the five pillars and present context what these pillars may mean to the regulated community.

Pillar 1: “Clean Air, Land, and Water for Every American”

The first pillar is intended to emphasize the Trump Administration’s continued commitment to EPA’s traditional mission of protecting human health and the environment, including emergency response efforts. To emphasize this focus, accompanied by Vice President JD Vance, Zeldin’s first trip as EPA Administrator was to East Palatine, Ohio, on the two-year anniversary of a train derailment. While there, Administrator Zeldin noted that the “administration will fight hard to make sure every American has access to clean air, land, and water. It was an honor to meet with local residents, and I leave this trip more motivated to this cause than ever before. I will make sure EPA continues to clean up East Palestine as quickly as possible.” After surveying the site of the train derailment to survey the cleanup, Zeldin and Vance “participated in a meeting with local residents and community leaders to learn more” about how to expedite the cleanup.

Taken alone or in conjunction with Administrator Zeldin’s trip to an environmentally impacted site in Ohio, Pillar 1 appears consistent with past EPA practice.

Read in the context of the Trump Administration’s first-day executive orders (for more, see here) and related actions such as a memoranda from Attorney General Pam Bondi on “Eliminating Internal Discriminatory Practices” and “Rescinding ‘Environmental Justice’ Memoranda.” Pillar 1 should be construed as meaning that EPA no longer intends to proactively work to redress issues in “environmentally overburdened” communities. Consequently, programs under the Biden Administration that focus on environmental justice (EJ) and related equity issues are ended. (For more, see here.)

Pillar 2: Restoring American Energy Dominance

Pillar 2 focuses on “Restoring American Energy Dominance.” What this means in practice is little surprise given President Trump’s promises during his inauguration to “drill, baby, drill.” Two first-day Executive Orders provide further context to this pillar:

The Executive Order “Declaring a National Energy Emergency” declares a national energy emergency due to inadequate energy infrastructure and supply, exacerbated by previous policies. It emphasizes the need for a reliable, diversified, and affordable energy supply to support national security and economic prosperity. The order calls for immediate action to expand and secure the nation’s energy infrastructure to protect national and economic security.

The Executive Order on “Unleashing American Energy,” seeks to encourage the domestic production of energy and rare earth minerals while reversing various Biden Administration actions that limited the export of liquid natural gas (LNG), promoted electric vehicles and energy efficient appliances and fixtures, and required accounting for the social cost of carbon. (For context on the social context of carbon, see here and here.)

Pillar 3: Permitting Reform, Cooperative Federalism, and Cross-Agency Partnership

Pillar 3 focuses on government efficiency including permitting reform, cooperative federalism, and cross-agency partnerships. As with Pillar 1, two of these goals (cooperative federalism and cross-agency partnership) are generally consistent with typical agency practice across all administrations even if administrations approach them in different ways.

“Permitting reform” generally means streamlining the permitting processes so that the time from permitting submission to conclusion is shorter.

Current events, most notably three court decisions involving the National Environmental Policy Act (NEPA), require a deeper exploration of “permitting reform.” NEPA is a procedural environmental statute that requires federal agencies to evaluate the potential environmental impacts of major decisions before acting and provides the public with information about the environmental impacts of potential agency actions. The Council on Environmental Quality (CEQ), an agency within the Executive Office of the president, was created in 1969 to advise the president and develop policies on environmental issues, including ensuring that agencies comply with NEPA by conducting sufficiently rigorous environmental reviews.

Energy-related infrastructure ranging from transmission lines to ports needed to ship LNG often require NEPA reviews. During his first term, President Trump sought to streamline NEPA reviews. As we previously discussed, in 2020, CEQ regulations were overhauled to exclude requirements to discuss cumulative effects of permitting and, among other things, to set time and page limits on NEPA environmental impact statements. During the Biden Administration, in one phase of revisions, CEQ reversed course to undo the Trump Administration’s changes, and, in a second phase, the Biden Administration required evaluation of EJ concerns, climate-related issues, and increased community engagement. (For more, see here.) Predictably, litigation followed these changes. Additionally, we are waiting on the US Supreme Court’s decision in Seven County Infrastructure Coalition v. Eagle County, Colorado, which addresses whether NEPA requires federal agencies to identify and disclose environmental effects of activities which are outside their regulatory purview.

These two recent decisions add to the ongoing debate about whether CEQ ever had the authority to issue regulations that have been relied upon for decades. These include the DC Circuit’s decision in Marin Audubon Society v. FAA (for more, see here) and a second decision by a North Dakota trial court in in Iowa v. Council on Environmental Quality.

Pillar 4: Make the United States the Artificial Intelligence Capital of the World

EPA’s Pillar 4 seeks to promote artificial intelligence (AI) so that America is the AI “Capital of the World.”

AI issues fall into EPA’s purview because development of AI technologies is highly dependent on electric generation, transmission, and distribution. EPA plays a key role in overseeing permitting and compliance activities related to facilities like these. As we have discussed, AI requires significant energy to power the data centers it needs to function, and a study indicates that the carbon footprint of training a single AI natural language processing model produced similar emissions to 125 round-trip flights between New York and Beijing. Because data center developments tend to be clustered in specific regions, more than 10% of the electricity consumption in at least five states is used by data centers. (Report available here.)

Pillar 5: Protecting and Bringing Back American Auto Jobs

Pillar 5 focuses on supporting the American automobile industry. As was discussed in relation to Pillar 2, EPA seeks to support the American automobile industry. Regarding this sector, EPA intends to “streamline and develop smart regulations that will allow for American workers to lead the great comeback of the auto industry.” Additionally, the US Office of Management and Budget released a memo on January 21, clarifying that provisions of the “Unleashing American Energy” Executive Order were intended to pause disbursement of Inflation Reduction Act funds, including those for electric vehicle charging stations.

While the particulars of this pillar are less clear than some others, we expect that EPA’s efforts in this area will involve some combination of permitting reform and rollback to prior EPA decisions related to vehicle emissions.

Elon Musk’s Exit from OpenAI: Why He Sold His Stake and Why He Wants Back In

Elon Musk’s Exit from OpenAI: Why He Sold His Stake and Why He Wants Back In. Elon Musk’s relationship with OpenAI, the AI research organization he co-founded in 2015, has been complicated, marked by early enthusiasm, a departure, and a more recent attempt to regain influence. The journey from co-founder to adversary and, perhaps, back […]

Hangzhou Internet Court: Generative AI Output Infringes Copyright

On February 10, 2025, the Hangzhou Internet Court announced that an unnamed defendant’s generative artificial intelligence’s (AI) generating of images constituted contributory infringement of information network dissemination rights, and ordered the defendant to immediately stop the infringement and compensate for economic losses and reasonable expenses of 30,000 RMB.

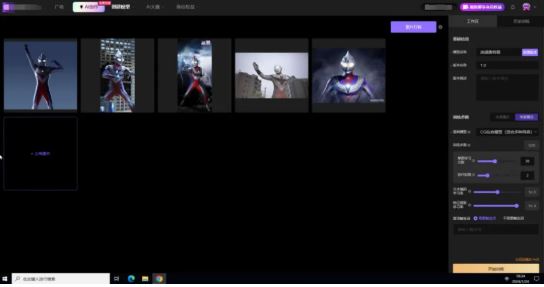

LoRA model training with Ultraman

Infringing image generated with the model.

The defendant operates an AI platform that provides Low-Rank Adaptation (LoRA) models, and supports many functions such as image generation and model online training. On the homepage of the platform and under “Recommendations” and “IP Works”, there are AI-generated pictures and LoRA models related to Ultraman, which can be applied, downloaded, published or shared. The Ultraman LoRA model was generated by users uploading Ultraman pictures, selecting the platform basic model, and adjusting parameters for training. Afterwards, other users could then input prompts, select the base model, and overlay the Ultraman LoRA model to generate images that closely resembled the Ultraman character.

The unnamed plaintiff (presumably Tsuburaya Productions) alleged that the defendant infringed on their information network dissemination rights by placing infringing images and models on the information network after training with input images. The defendant used generative AI technology to train the Ultraman LoRA model and generate infringing images, constituting unfair competition. The plaintiff demanded the defendant cease the infringement and compensate for economic damages of 300,000 RMB.

The defendant countered that their AI platform, by calling third-party open-source model code, integrates and deploys these models according to platform needs, offering a generative AI platform for users. However, the platform does not provide training data and only allows users to upload images to train the model, which falls within the “safe harbor” rule for platforms and does not constitute infringement.

The Court reasoned:

On the one hand, if the generative artificial intelligence platform directly implements actions protected by copyright, it may constitute direct infringement. However, in this case, there is no evidence to prove that the defendant and the user jointly provided infringing works, and the defendant did not directly implement actions protected by information network dissemination rights.

On the other hand, in this case, when the user inputs infringing images and other training materials and decides whether to generate and publish them, the defendant does not necessarily have an obligation to conduct prior review of the training images input by the user and the dissemination of the generated products. Only when it is at fault for the specific infringing behavior can it constitute aiding and abetting infringement.

Specifically, the following aspects are considered comprehensively:

First, the nature and profit model of generative AI services. The open source ecosystem is an important part of the AI industry, and the open source model provides a general basic algorithm. As a service provider directly facing end users at the application layer, the defendant has made targeted modifications and improvements based on the open source model in combination with specific application scenarios, and provided solutions and results that directly meet the use needs. Compared with the provider of the open source model, it directly participates in commercial practices and benefits from the content generated based on the targeted generation. From the perspective of service type, business logic and prevention cost, it should maintain sufficient understanding of the content in the specific application scenario and bear the corresponding duty of care. In addition, the defendant obtains income through users’ membership fees, and sets up incentives to encourage users to publish training models, etc. It can be considered that the defendant directly obtains economic benefits from the creative services provided by the platform.

Secondly, the popularity of the copyrighted work and the obviousness of the alleged infringement. Ultraman works are quite well-known. When browsing the platform homepage and specific categories, there are multiple infringing pictures, and the LoRA model cover or sample picture directly displays the infringing pictures, which is relatively obvious infringement.

Thirdly, the infringement consequences that generative AI may cause. Generally speaking, the results of user behavior using generative AI are not identifiable or intervenable, and the generated images are also random. However, in this case, because the Ultraman LoRA model is used, the characteristics of the character image can be stably output. At this time, the platform has enhanced the identifiability and intervention of the results of user behavior. And because of the convenience of technology, the pictures and LoRA models generated and published by users can be repeatedly used by other users. The trend of causing the spread of infringement consequences is already quite obvious, and the defendant should have foreseen the possibility of infringement.

Finally, whether reasonable measures have been taken to prevent infringement. The defendant stated in the platform user service agreement that it would not review the content uploaded and published by users. After receiving the lawsuit notice, it has taken measures such as blocking relevant content and conducting intellectual property review in the background, proving that it has the ability to take but has failed to take necessary measures to prevent infringement that are consistent with the technical level at the time of the infringement.

In summary, the defendant should have known that network users used its services to infringe upon the right of information network dissemination but did not take necessary prevention measures. It failed to fulfill his duty of reasonable care and was subjectively at fault, constituting aiding and abetting infringement.

Violations of the Anti-Unfair Competition Law did not need to be considered as copyright infringement was determined.

The full text of the announcement is available here (Chinese only).

Key Insights on President Trump’s New AI Executive Order and Policy & Regulatory Implications

On January 23, 2025, President Trump issued a new Executive Order (EO) titled “Removing Barriers to American Leadership in Artificial Intelligence” (Trump EO). This EO replaces President Biden’s Executive Order 14110 of October 30, 2023, titled “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” (Biden EO), which was rescinded on January 20, 2025, by Executive Order 14148.

The Trump EO signals a significant shift away from the Biden administration’s emphasis on oversight, risk mitigation and equity toward a framework centered on deregulation and the promotion of AI innovation as a means of maintaining US global dominance.

Key Differences Between the Trump EO and Biden EO

The Trump EO explicitly frames AI development as a matter of national competitiveness and economic strength, prioritizing policies that remove perceived regulatory obstacles to innovation. It criticizes the influence of “engineered social agendas” in AI systems and seeks to ensure that AI technologies remain free from ideological bias. By contrast, the Biden EO focused on responsible AI development, placing significant emphasis on addressing risks such as bias, disinformation and national security vulnerabilities. The Biden EO sought to balance AI’s benefits with its potential harms by establishing safeguards, testing standards and ethical considerations in AI deployment and deployment.

Another significant shift in policy is the approach to regulation. The Trump EO mandates an immediate review and potential rescission of all policies, directives and regulations established under the Biden EO that could be seen as impediments to AI innovation. The Biden EO, however, introduced a structured oversight framework, including mandatory red-teaming for high-risk AI models, enhanced cybersecurity protocols and monitoring requirements for AI used in critical infrastructure. The Biden administration also directed federal agencies to collaborate in the development of best practices for AI safety and reliability efforts that the Trump EO effectively halts.

The two EOs also diverge in their treatment of workforce development and education. The Biden EO dedicated resources to attracting and training AI talent, expanding visa pathways for skilled workers and promoting public-private partnerships for AI research and development. The Trump EO, however, does not include specific workforce-related provisions. Instead, the Trump EO seems to assume that reducing federal oversight will naturally allow for innovation and talent growth in the private sector.

Priorities for national security are also shifting. The Biden EO mandated extensive interagency cooperation to assess the risks AI poses to critical national security systems, cyberinfrastructure and biosecurity. It required agencies such as the Department of Energy and the Department of Defense to conduct detailed evaluations of potential AI threats, including the misuse of AI for chemical and biological weapon development. The Trump EO aims to streamline AI governance and reduce federal oversight, prioritizing a more flexible regulatory environment and maintaining US AI leadership for national security purposes.

The most pronounced ideological difference between the two executive orders is in their treatment of equity and civil rights. The Biden EO explicitly sought to address discrimination and bias in AI applications, recognizing the potential for AI systems to perpetuate existing inequalities. It incorporated principles of equity and civil rights protection throughout its framework, requiring rigorous oversight of AI’s impact in areas such as hiring, healthcare and law enforcement. Not surprisingly, the Trump EO did not focus on these concerns, reflecting a broader philosophical departure from government intervention in AI ethics and fairness – perhaps considering existing laws that prohibit unlawful discrimination, such as Title VI and Title VII of the Civil Rights Act and the Americans with Disabilities Act, as sufficient.

The two orders also take fundamentally different approaches to global AI leadership. The Biden EO emphasized the importance of international cooperation, encouraging US engagement with allies and global organizations to establish common AI safety standards and ethical frameworks. The Trump EO, in contrast, appears to adopt a more unilateral stance, asserting US leadership in AI without outlining specific commitments to international collaboration.

Implications for the EU’s AI Act, Global AI and State Legal Frameworks

The Trump administration’s deregulatory approach comes at a time when other jurisdictions, particularly the EU, are moving toward stricter regulatory frameworks for AI. The EU’s Artificial Intelligence Act (EU AI Act), which was adopted by the EU Parliament in March 2024, imposes comprehensive rules on the development and use of AI technologies, with a strong emphasis on safety, transparency, accountability and ethics. By categorizing AI systems based on risk levels, the EU AI Act imposes stringent requirements for high-risk AI systems, including mandatory third-party impact assessments, transparency standards and oversight mechanisms.

The Trump EO’s emphasis on reducing regulatory burdens stands in stark contrast to the EU’s approach, which reflects a precautionary principle that prioritizes societal safeguards over rapid innovation. This divergence could create friction between the US and EU regulatory environments, especially for multinational companies that must navigate both systems. Although the EU AI Act is being criticized as impeding innovation, the lack of explicit ethical safeguards and risk mitigation measures in the Trump EO also could weaken the ability of US companies to compete in European markets, where compliance with the EU AI Act’s rigorous standards is a legal prerequisite for EU market access.

Globally, jurisdictions such as Canada, Japan, the UK and Australia are advancing their own AI policies, many of which align more closely with the EU’s focus on accountability and ethical considerations than with the US’s pro-innovation stance under the Trump administration. For example, Canada’s Artificial Intelligence and Data Act emphasizes transparency and responsible development, while Japan’s AI guidelines promote trustworthy AI principles through multistakeholder engagement. While the UK has a less regulated approach than the EU, it has a strong accent on safety through the AI Safety Institute.

The Trump administration’s decision to rescind the Biden EO and prioritize a “clean slate” for AI policy also may complicate efforts to establish global standards for AI governance. While the EU, the G7 and other multilateral organizations are working to align on key principles such as transparency, fairness and safety, the US’s unilateral focus on deregulation could limit its influence in shaping these global norms. Additionally, the Trump administration’s pivot toward deregulation risks creating a perception that the US prioritizes short-term innovation gains over long-term ethical considerations, potentially alienating allies and partners.

A final consideration is the potential for the Trump EO to widen the gap between federal and state AI regulatory regimes, inasmuch as it presages deregulation of AI at the federal level. Indeed, while the EO signals a federal shift toward prioritizing innovation by reducing regulatory constraints, the precise contours of the new administration’s approach to regulatory enforcement – including on issues like data privacy, competition and consumer protection – will become clearer as newly appointed federal agency leaders begin implementing their agendas. At the same time, states such as Colorado, California and Texas have already enacted AI laws with varying scope and degrees of oversight. As with state consumer privacy laws, increased state-level activity in AI also would likely lead to increased regulatory fragmentation, with states implementing their own rules to address concerns related to high-risk AI applications, transparency and sector-specific oversight.

Thus, in the absence of clear federal guidelines, leaving businesses with a growing patchwork of state AI regulations will complicate compliance across multiple jurisdictions. Moreover, if Congress enacts an AI law that prioritizes innovation over risk mitigation, stricter state regulations could face federal preemption. Until then, organizations must closely monitor both federal and state developments to navigate this evolving and increasingly fragmented AI regulatory landscape.

Ultimately, a key test for the Trump administration’s approach to AI is whether it preserves and enhances US leadership in AI or allows China to build a more powerful AI platform. The US approach will undoubtedly drive investment and innovation by US AI companies. But China may be able to arrive at a collaborative engagement with international AI governance initiatives, which would position China strongly as an international leader in AI. Alternatively, is DeepSeek a flash in the pan, a stimulus for US competition or a portent for the future?

Conclusion

Overall, the Trump EO reflects a fundamental shift in US AI policy, prioritizing deregulation and freemarket innovation while reducing oversight and ethical safeguards. However, this approach could create challenges for US companies operating in jurisdictions with stricter AI regulations, such as the EU, the UK, Canada and Japan – as well as some of those states in the US that have already enacted their own AI regulatory regimes. The divergence between the US federal government’s pro-innovation strategy and the precautionary regulatory model pursued by the EU and these US states underscores the need for companies operating across these jurisdictions to adopt flexible compliance strategies that account for varying regulatory standards.

Pablo Carrillo also contributed to this article.

A Look at U.S. Government’s Changed Approach to Artificial Intelligence Development and Investments

Highlights

In January 2025, the new administration took several steps related to AI technologies and infrastructure

Many previous executive orders were rescinded, but a prior executive order regarding using federal lands for data centers remains in place

The U.S. has also announced major private investments into state-of-the-art AI data centers

Since the new administration took office, the U.S. has taken several steps to implement new strategies and priorities related to the development of, and investment in, artificial intelligence (AI) technologies.

On Jan. 20, 2025, Executive Order 14110 titled Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, was rescinded. It required companies developing AI to share information about their technologies with the federal government before their products could be made publicly available. All other previous executive orders pertaining to AI also were rescinded, except for an order related to using public lands for data centers.

On Jan. 21, 2025, several private companies announced from the White House a new private venture called the Stargate Project, which intends to invest $500 billion over the next four years building new AI infrastructure, including AI-focused data centers, in the U.S.

On Jan. 23, 2025, Executive Order 14179, Removing Barriers to American Leadership in Artificial Intelligence. was implemented. This new order states that to maintain U.S. leadership in AI innovation, “we must develop AI systems that are free from ideological bias or engineered social agendas.” It also “revokes certain existing AI policies and directives that act as barriers to American AI innovation, clearing a path for the United States to act decisively to retain global leadership in artificial intelligence.”

The order further states that it is the “policy of the United States to sustain and enhance America’s global AI dominance in order to promote human flourishing, economic competitiveness, and national security.”

To accomplish those objectives, the order requires:

1) Within 180 days, the Assistant to the President for Science and technology (APST), the Special Advisor for AI and Crypto, and the Assistant to the President for National Security Affairs (APNSA), in in coordination with the Assistant to the President for Economic Policy, the Assistant to the President for Domestic Policy, the Director of the Office of Management and Budget (OMB Director), and the heads of such executive departments and agencies (agencies) as the APST and APNSA deem relevant, shall develop and submit to the President an action plan to achieve the policy set forth in section 2 of this order.

2) The APST, the special advisor for AI and crypto, and the APNSA, in coordination with the heads of relevant agencies shall (1) identify policies, directives, regulations and orders taken pursuant to EO 14110 and (2) suspend, revise, or rescind such actions if they are inconsistent with the order’s objectives.

3) Within 60 days, the OMB shall revise its published guidance on AI to align with the order.

Takeaways

The U.S. is taking strides to maintain and extend its edge in AI innovations amid competition from others. The new executive order is one of the steps being taken, and the AI regulatory landscape is continuing to rapidly evolve, making it important to monitor the steps the U.S. and others take in connection with AI.

Insurtech in 2025: Opportunity and Risk

The explosion in artificial intelligence (AI) capability and applications has increased the potential for industry disruptions. One industry experiencing recent material disruption is about as traditional as it gets: insurance. While some level of disruption in the insurance industry is nothing new, AI has been accelerating more significant changes to industry fundamentals. This is the first advisory in a series exploring the legal risks and strategies surrounding disruptive insurance technologies, particularly those leveraging AI, known as Insurtech.

What is Insurtech?

Insurtech is a broad term that encompasses every stage of the insurance lifecycle. Cutting-edge technology can be instrumental in advertising, lead generation, sales, underwriting, claims processing and fraud detection, among others. Generative AI can assist in client management and retention. Insurtech can augment traditional forms of insurance such as car and health insurance, and facilitate less traditional forms of insurance, such as parametric insurance or microinsurance at scale.

Legal and Regulatory Risks of Insurtech

As Insurtech continues to evolve, designers, providers and deployers must be aware of the legal and regulatory risks inherent in the use of Insurtech at all stages. These risks are particularly heightened in the insurance world, where vendors and carriers process an enormous amount of personal information in the course of decision-making that impacts individuals’ rights, from advertising to product pricing to coverage decisions.

The heavily regulated nature of the traditional industry is also enhanced in the Insurtech context, given overlapping regulatory interests in regulating new technology applications. These additional layers of oversight – which in traditional applications may not be as much of a primary concern – include the Federal Trade Commission, states’ Attorneys’ General and in some jurisdictions, state-level privacy regulators.

Building Compliance for Insurtech Solutions

Designing, providing and deploying Insurtech solutions requires a multifaceted, customized approach to position agents, vendors, carriers and indeed any entity in the insurance stack for compliance. Taking early action to build appropriate governance for your Insurtech product or application is critical to building a defensive regulatory position. For entities that have an eye on raising capital, engaging in mergers or acquisitions, or other collaborative marketplace activity, such governance will minimize friction that can impede success.

Additionally, consumers are increasingly attentive to data privacy and AI governance standards. Incorporating proper data privacy and AI governance regimes from day one is not only a forward-thinking business decision to mitigate risk and facilitate success; it is also a market imperative.

Looking Ahead: Risks and Opportunities in 2025

Over the next few months, we will take a closer look into more discrete risks and opportunities that Insurtech providers and deployers need to keep in mind throughout 2025. Follow along as we explore this exciting area that in recent years has demonstrated enormous potential for continued growth.

The New Legal Synergy: Collaborative Intelligence with Lawyers and Agentic AI

It’s easy to dismiss new technology as impractical for an industry as established as law. But we’re well past the speculation phase. AI isn’t a theoretical disruptor — it’s already here, reshaping legal work in real-time.

The legal industry has witnessed a staggering increase in AI adoption, from a mere 19% in 2023 to an impressive 79% in 2024. In the UK alone, 41% of legal professionals now use AI for work, up from just 11% in July 2023. The dramatic surge in AI adoption is not just the latest “hype cycle”, it marks the beginning of a fundamental shift in how legal work is done.

The traditional image of a lawyer pouring over dusty tomes and case files is fading. AI-powered tools are becoming integral to legal practice. But what we’ve seen so far with generative AI is just the beginning. The fundamental transformation will come with agentic AI.

Agentic and reasoning: the next frontier of AI

Agentic AI, the next frontier beyond generative AI, is poised to revolutionize legal work. Unlike its predecessor, agentic AI uses advanced AI systems capable of independently performing complex research or document drafting tasks. These AI systems can accomplish tasks with minimal human oversight and even check their own work before human review.

Large law firms are already experimenting with agentic AI, with experts predicting that AI systems could soon be members of legal teams. This gradual integration is expected to continue, emphasizing training and preparation.

Advanced legal reasoning, powered by AI

One of the most promising applications of agentic AI in the legal field is advanced legal reasoning (ALR), which goes beyond simple document analysis or basic research tasks.

ALR allows lawyers to upload tens of thousands of documents and conduct deep analyses to uncover insights into the strengths, weaknesses, and potential strategies buried in the complexities of the facts and issues — all within minutes. Leveraging the most advanced AI systems, ALR streamlines complex workflows, enabling lawyers to make informed decisions faster than ever.

It can interpret complex legal scenarios, apply relevant case law and statutes, and even suggest strategic approaches to legal problems. Lawyers can ask ALR systems questions like, “What is the weakest part of our claim concerning liability?” By analyzing key documents and referencing leading legal authorities, the ALR platform would provide a detailed, actionable response.

For example, when asked about a spouse’s income for child support calculations, ALR first employs an agent to search for the legal standard, then uses another agent to apply that understanding to case documents and extract the necessary information.

The impact of advanced legal reasoning tools is already evident. A staggering 71% of lawyers cite faster delivery as a key benefit of AI, while 54% report improved client service. Unsurprisingly, 78% of large law firms and 74% of corporate in-house teams have implemented AI changes.

Considerations for law firms adopting agentic AI

As agentic AI becomes more integrated into legal practice, firms must navigate ethical considerations and data privacy concerns. About two-thirds (70%) of firms prioritize data privacy policies when vetting technology vendors and litigation support providers. This focus on data protection is crucial, as 76% of legal professionals express concern about inaccurate or fabricated information from public AI platforms. To address these privacy and security concerns, a growing pool of legaltech companies is helping law firms adopt self-hosted AI solutions built to run within a firm’s private cloud ecosystem.

The future of agentic AI in law

Looking ahead, the future of law is undeniably intertwined with AI – from established firms to schools teaching the next generation of lawyers.

Two-thirds (75%) of organization leaders expect to change talent strategies within two years due to AI advancement. Law schools are already integrating generative AI training for new junior lawyers, preparing the next generation for an AI-powered workforce.

But let’s be clear: AI is not here to replace lawyers. It’s here to make them better. Those who embrace it — who approach it with curiosity and a willingness to adapt — will gain the most. The legal industry isn’t losing its expertise. It’s gaining new tools to apply that expertise more effectively.

If you take this shift seriously, AI won’t just change how you practice law — it will give you an edge.

EPA Administrator Zeldin Announces Five Pillar Initiative to Guide EPA; What Does It Mean for OCSPP?

U.S. Environmental Protection Agency (EPA) Administrator Lee Zeldin on February 4, 2025, announced the “Powering the Great American Comeback Initiative” (PGAC Initiative). It consists of five pillars and is intended to serve as a roadmap to guide EPA’s actions under Administrator Zeldin.

The five pillars are:

Clean Air, Land, and Water for Every American;

Restore American Energy Dominance;

Permitting Reform, Cooperative Federalism, and Cross-Agency Partnership;

Make the United States the Artificial Intelligence Capital of the World; and

Protecting and Bringing Back American Auto Jobs.

Administrator Zeldin explained Pillar 3 by stating, “Any business that wants to invest in America should be able to do so without having to face years-long, uncertain, and costly permitting processes that deter them from doing business in our country in the first place.” [Emphasis added.] We agree and would urge Administrator Zeldin to consider the years-long new chemical approval process under the Toxic Substances Control Act (TSCA).

There has been much discussion about the Trump Administration’s desire to reduce the size of the government by reducing the federal workforce and restore common sense to the decision-making process. What is getting lost in the discussion and actions taken to “right-size” the government is that chemical manufacturers and formulators rely on EPA action to bring new products to market. The public seldom hears about how agencies like EPA play a vital role in promoting innovation and supporting job creation. Instead, political rhetoric has been about reducing agency headcounts and budgets, but not enough about how to improve agency performance and efficiency.

This is not new. Dr. Richard Engler and I wrote in November 2024 about the newly unveiled Department of Government Efficiency (DOGE), “If DOGE can identify ways to improve the operation and efficiency of [EPA’s Office of Chemical Safety and Pollution Prevention (OCSPP)] (e.g., by ensuring appropriate resources and updated technology), this could lead to economic gains, greater investment, innovation, and sustainability, and yes, more jobs in the United States.” I would expand what we wrote in November to include the PGAC Initiative.

American businesses need OCSPP, a critically important EPA office charged with conducting safety reviews of existing products and the gatekeeper for new chemical products, to be properly resourced (with funds, people, and technology), operate efficiently and effectively, and be held accountable for performance. If the PGAC Initiative and DOGE efforts lead to OCSPP’s proper resourcing, it would go a long way in reversing the trend of fewer new chemicals being submitted to EPA for approval in the United States and reducing the commercialization of innovative new chemistries overseas instead of here in the United States.

Trump Administration Unveils New AI Policy, Reverses Biden’s Regulatory Framework

Early signals from the Trump administration suggest it may move away from the Biden administration’s regulatory focus on the impact of artificial intelligence (AI) and automated decision-making technology on consumers and workers. This federal policy shift could result in an uptick in state-based AI regulation.

Quick Hits

On January 23, 2025, President Trump signed an executive order to develop an action plan to enhance AI technology’s growth while reviewing and potentially rescinding prior policies to regulate its use.

The Trump administration is reversing Biden-era guidance on AI and emphasizing the need for minimal barriers to foster innovation and U.S. leadership in artificial intelligence.

The administration is working closely with tech leaders and has tapped a tech investor and former executive as the newly created White House AI & Crypto Czar to guide policy development in the AI sector.

State legislators may step in to fill the regulatory gap.

As part of a flurry of executive action during President Donald Trump’s first week of his second term in office, the president rescinded a Biden-era executive order (EO) issued on October 30, 2023, titled the “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” which sought to create safeguards for the “responsible development and use of AI.”

Further, on January 23, 2025, President Trump took action to shape the development of AI technology, signing EO 14179, “Removing Barriers to American Leadership in Artificial Intelligence.” The order states, “It is the policy of the United States to sustain and enhance America’s global AI dominance in order to promote human flourishing, economic competitiveness, and national security.”

AI Executive Order

President Trump’s EO 14179 directs that, within 180 days, “relevant” agencies create an “action plan to achieve” the EO’s AI policy. That plan is to be developed by the “Assistant to the President for Science and Technology (APST), the Special Advisor for AI and Crypto, and the Assistant to the President for National Security Affairs (APNSA), in coordination with the Assistant to the President for Economic Policy, the Assistant to the President for Domestic Policy, the Director of the Office of Management and Budget (OMB Director), and the heads of such executive departments and agencies (agencies) as the APST and APNSA deem relevant.”

The order also mandates that these heads of agencies immediately review all policies, directives, regulations, and other actions taken under President Biden’s now-revoked EO 14110 to identify any actions inconsistent with the EO’s policy objectives. The EO states that inconsistent actions will be suspended, revised, or rescinded as appropriate to ensure that federal guidelines and regulations do not impede the nation’s role as an AI leader.

The EO directs the OMB Director, in coordination with the APST, to revise two specific OMB memoranda “as necessary to make them consistent” with the president’s new AI policy:

OMB Memorandum M-24-10, “Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence,” issued in March 2024, which directed the Board of Governors of the Federal Reserve System to submit a biennial AI compliance plan to OMB.

OMB Memorandum M-24-18, “Advancing the Responsible Acquisition of Artificial Intelligence in Government,” issued in October 2024.

Shifting AI Policy

The Biden administration sought to create safeguards for the development of AI technology and its impact on labor markets, potential displacement of workers, and the use of AI and automated decision-making tools to make employment decisions and evaluate worker performance.

In November 2024, the U.S. Department of Labor (DOL) issued guidance on AI, detailing principles and best practices for employers in using AI in the workplace. That guidance built on prior guidance published by the DOL’s Wage and Hour Division and Office of Federal Contract Compliance Programs. Also, in 2022 and 2023, the U.S. Equal Employment Opportunity Commission (EEOC) issued guidance on employers’ use of AI tools and the potential for discrimination. As of the date of publication of this article, the EEOC’s former AI guidance has been removed from its website.

However, in a fact sheet published on January 23, 2025, the Trump administration stated that the “Biden AI Executive Order established unnecessarily burdensome requirements for companies developing and deploying AI that would stifle private sector innovation and threaten American technological leadership.” According to the fact sheet, the “development of AI systems must be free from ideological bias or engineered social agendas.”

President Trump is also reportedly working closely with many tech company leaders and AI developers. The president tapped investor and former tech executive David Sacks as the newly created “White House AI & Crypto Czar,” who will help shape policy around emerging technologies.

Next Steps

The Trump administration’s shift in AI policy marks a substantial departure from the previous administration’s focus. By rescinding Biden-era executive orders and implementing new directives to foster innovation, the Trump administration seeks to remove perceived barriers to the development of artificial intelligence technology.

Although the new administration has expressed its intent to deregulate this area, many states and jurisdictions have taken a different position, including California, Colorado, Illinois, and New York City. Other states may also consider filling the gap created by the absence of federal agency action on AI in employment.

In light of this, employers may want to continue to implement policies and procedures that protect the workplace from unintended consequences of AI use, including maintaining an AI governance team, establishing policies and practices for the safe use of AI in the workplace, enhancing cybersecurity practices, auditing results to identify and correct unintended consequences (including bias), and maintaining an appropriate level of human oversight.

The Colorado AI Act: Implications for Health Care Providers

Artificial intelligence (AI) is increasingly being integrated into health care operations, from administrative functions such as scheduling and billing to clinical decision-making, including diagnosis and treatment recommendations. Although AI offers significant benefits, concerns regarding bias, transparency, and accountability have prompted regulatory responses. Colorado’s Artificial Intelligence Act (the Act), set to take effect on February 1, 2026, imposes governance and disclosure requirements on entities deploying high-risk AI systems, particularly those involved in consequential decisions affecting health care services and other critical areas.

Given the Act’s broad applicability, including its potential extraterritorial reach for entities conducting business in Colorado, health care providers must proactively assess their AI utilization and prepare for compliance with forthcoming regulations. Below, we discuss the intent of the Act, what types of AI it applies to, future regulation, potential impact on providers, statutory compliance requirements, and enforcement mechanisms.

1. What Is the Act Trying to Protect Against?

The Act primarily seeks to mitigate algorithmic discrimination, defined as AI-driven decision-making that results in unlawful differential treatment or disparate impact on individuals based on certain characteristics, such as race, disability, age, or language proficiency. The Act seeks to prevent AI from reinforcing existing biases or making decisions that unfairly disadvantage particular groups.

Examples of Algorithmic Discrimination in Health Care

Access to Care Issues: AI-powered phone scheduling systems may fail to recognize certain accents or accurately process non-English speakers, making it more difficult for non-native English speakers to schedule medical appointments.

Biased Diagnostic Tools and Treatment Recommendations: Some AI diagnostic tools may recommend different treatments for patients of different ethnicities, not because of medical evidence but due to biases in the training data. For instance, an AI model trained primarily on data from white patients might miss early signs of disease that present differently in Black or Hispanic patients, resulting in inaccurate or less effective treatment recommendations for historically marginalized populations.

By targeting these and other AI-driven inequities, the Act aims to ensure automated systems do not reinforce or exacerbate existing disparities in health care access and outcomes.

2. What Types of AI Are Addressed by the Act?

The Act applies broadly to businesses using AI to interact with or make decisions about Colorado residents. Although certain high-risk AI systems — those that play a substantial factor in making consequential decisions — are subject to more stringent requirements, the Act imposes obligations on most AI systems used in health care.

Key Definitions in the Act

“Artificial Intelligence System” means any machine-based system that generates outputs — such as decisions, predictions, or recommendations — that can influence real-world environments.

“Consequential Decision” means a decision that materially affects a consumer’s access to or cost of health care, insurance, or other essential services.

“High-Risk AI System” means any AI tool that makes or substantially influences a consequential decision.

“Substantial Factor” means a factor that assists in making a consequential decision or is capable of altering the outcome of a consequential decision and is generated by an AI system.

“Developers” means creators of AI systems.

“Deployers” means users of high-risk AI systems.

3. How Can Health Care Providers Ensure Compliance?

Although the Act sets out broad obligations, specific regulations are still forthcoming. The Colorado Attorney General has been tasked with developing rules to clarify compliance requirements. These regulations may address:

Risk management and compliance frameworks for AI systems.

Disclosure requirements for AI usage in consumer-facing applications.

Guidance on evaluating and mitigating algorithmic discrimination.

Health care providers should monitor developments as the regulatory framework evolves to ensure their AI-related practices align with state law.

4. How Could the Act Impact Health Care Operations?

The Act will require health care providers to specifically evaluate how they use AI across various operational areas, as the Act applies broadly to any AI system that influences decision-making. Given AI’s growing role in patient care, administrative functions, and financial operations, health care organizations should anticipate compliance obligations in multiple domains.

Billing and Collections

AI-driven billing and claims processing systems should be reviewed for potential biases that could disproportionately target specific patient demographics for debt collection efforts.

Deployers should ensure that their AI systems do not inadvertently create financial barriers for specific patient groups.

Scheduling and Patient Access

AI-powered scheduling assistants must be designed to accommodate patients with disabilities and limited English proficiency to prevent inadvertent discrimination and delayed access to care.

Providers must evaluate whether their AI tools prioritize certain patients over others in a way that could be deemed discriminatory.

Clinical Decision-Making and Diagnosis

AI diagnostic tools must be validated to ensure they do not produce biased outcomes for different demographic groups.

Health care organizations using AI-assisted triage tools should establish protocols for reviewing AI-generated recommendations to ensure fairness and accuracy.

5. If You Use AI, With What Do You Need to Comply?

The Act establishes different obligations for Developers and Deployers. Health care providers will in most cases be “Deployers” of AI systems as opposed to Developers. Health care providers will want to scrutinize contractual relationships with Developers for appropriate risk allocation and information sharing as providers implement AI tools into their operations.

Obligations of Developers (AI Vendors)

Disclosures to Deployers: Developers must provide transparency about the AI system’s training data, known biases, and intended use cases.

Risk Mitigation: Developers must document efforts to minimize algorithmic discrimination.

Impact Assessments: Developers must evaluate whether the AI system poses risks of discrimination before deploying it.

Obligations of Deployers (e.g., Health Care Providers)

Duty to Avoid Algorithmic Discrimination

Deployers of high-risk AI systems must use reasonable care to protect consumers from known or foreseeable risks of algorithmic discrimination.

Risk Management Policy & Program

Deployers must implement a risk management policy and program that identifies, documents, and mitigates risks of algorithmic discrimination.

The program must be iterative, regularly updated, and aligned with recognized AI risk management frameworks.

Requirements vary based on the deployer’s size, complexity, AI system scope, and data sensitivity.

Impact Assessments (Regular & Event-Triggered Reviews)

Timing Requirements: Deployers must conduct impact assessments:

Before deploying any high-risk AI system.

At least annually for each deployed high-risk AI system.

Within 90 days after any intentional and substantial modification to the AI system.

Required Content: Each impact assessment must include the AI system’s purpose, intended use, and benefits, an analysis of risks of algorithmic discrimination and mitigation measures, a description of data processed (inputs, outputs, and any customization data), performance metrics and system limitations, transparency measures (including consumer disclosures), and details on post-deployment monitoring and safeguards.

Special Requirements for Modifications: If an impact assessment is conducted due to a substantial modification, it must also include an explanation of how the AI system’s actual use aligned with or deviated from its originally intended purpose.

Notifications & Transparency

Public Notice: Deployers must publish a statement on their website describing the high-risk AI systems they use and how they manage discrimination risks.

Notices to Patients/Employees: Before an AI system makes a consequential decision, individuals must be notified of its use.

Post-Decision Explanation: If AI contributes to an adverse decision, deployers must explain its role and allow the individual to appeal or correct inaccurate data.

Attorney General Notifications: If AI is found to have caused algorithmic discrimination, deployers must notify the Attorney General within 90 days.

Small deployers (those with fewer than 50 employees) who do not train AI models with their own data are exempt from many of these compliance obligations.

6. How is the Act Enforced?

Only the Colorado Attorney General has enforcement authority.

A rebuttable presumption of compliance exists if Deployers follow recognized AI risk management frameworks.

There is no private right of action, meaning consumers cannot sue directly under the Act.

Health care providers should take early action to assess their AI usage and implement compliance measures.

Final Thoughts: What Health Care Providers Should Do Now

The Act represents a significant shift in AI regulation, particularly for health care providers who increasingly rely on AI-driven tools for patient care, administrative functions, and financial operations.

Although the Act aims to enhance transparency and mitigate algorithmic discrimination, it also imposes substantial compliance obligations. Health care organizations will have to assess their AI usage, implement risk management protocols, and maintain detailed documentation.

Given the evolving regulatory landscape, health care providers should take a proactive approach by auditing existing AI systems, training staff on compliance requirements, and establishing governance frameworks that align with best practices. As rulemaking by the Colorado Attorney General progresses, staying informed about additional regulatory requirements will be critical to ensuring compliance and avoiding enforcement risks.

Ultimately, the Act reflects a broader trend toward AI regulation that is likely to extend beyond state borders. Health care organizations that invest in AI governance now will not only mitigate legal risks but also maintain patient trust in an increasingly AI-driven industry.

If health care providers plan to integrate AI systems into their operations, conducting a thorough legal analysis is essential to determine whether the Act applies to their specific use cases. This should also include careful review and negotiation of service agreements with AI Developers to ensure that the provider has sufficient information and cooperation from the Developer to comply with the Act and to properly allocate risk between the parties.

Compliance is not a one-size-fits-all process. It requires careful evaluation of AI tools, their functions, and their potential to influence consequential decisions. Organizations should work closely with legal counsel to navigate the Act’s complexities, implement risk management frameworks, and establish protocols for ongoing compliance. As AI regulations evolve, proactive legal assessment will be crucial to ensuring that health care providers not only meet regulatory requirements but also uphold ethical and equitable AI practices that align with broader industry standards.

Bipartisan Push to Strengthen American Supply Chains

Members of the Senate Commerce Committee have demonstrated an early bipartisan interest in continuing to promote U.S. supply chain resilience, highlighting an avenue for bipartisanship in the Trump Administration’s foreign policy agenda.

Sen. Marsha Blackburn (R-Tennessee) has partnered with Democratic colleagues as an original cosponsor on the reintroduction of two pieces of legislation aimed at coordinating the U.S. government’s focus on supply chain resilience: the Strengthening Support for American Manufacturing Act (S. 99); and, the Promoting Resilient Supply Chains Act(S. 257).

The Strengthening Support for American Manufacturing Act would require the Secretary of Commerce and the National Academy of Public Administration to produce a report on the effectiveness and management of the Department of Commerce’s various manufacturing support programs. Notably, the report is tasked with identifying relevant offices and bureaus within the Department of Commerce with responsibilities related to critical supply chain resilience, and manufacturing and industrial innovation, and make recommendations on improving their efficiency by identifying gaps and duplicative duties between offices.

Sen. Gary Peters (D-Michigan), who introduced the Strengthening Support for American Manufacturing Act, explains the legislation is intended to streamline various manufacturing programs offered by the federal government. Specifically, in a press release associated with the bill, Sen. Peters highlights a 2017 report released by the Government Accountability Office that identified 58 manufacturing related programs across 11 different federal agencies that serve US manufacturing, several of which are managed by the Department of Commerce.

The Promoting Resilient Supply Chains Act (the “PRSCA”) would establish a Supply Chain Resilience Working Group (the “Working Group”) comprised of federal agencies – including the Departments of Commerce, State, Defense, Agriculture, and Health and Human Services, among others. Moreover, under the PRSCA, the Assistant Secretary of Commerce for Industry and Analysis would be required to designate “critical industries,” “critical supply chains,” and “critical goods,” and the Working Group would be charged with mapping, monitoring, and modeling U.S. capacity to mitigate vulnerabilities in these areas.

Notably, during the Commerce Committee’s January 29, 2025, hearing to consider the nomination of Howard Lutnick to become Secretary of Commerce, Sen. Lisa Blunt Rochester (D-Delaware), the author of the PRSCA, asked Mr. Lutnick whether the Department of Commerce would maintain the agency’s supply chain mapping initiatives under his direction. Mr. Lutnick replied in the affirmative.

In discussing the merits of the PRSCA, Sen. Blackburn stated: “To achieve a strong, resilient, supply chain, we must have a coordinated, national strategy that decreases dependence on our adversaries, like Communist China, and leverages American ingenuity.” This claim is particularly relevant in the PRSCA’s promise to design and implement an “early warning supply chain disruption system” that would employ artificial intelligence and quantum computing to identify and mitigate potential supply chain shocks. As a crisis response measure, the platform would locate alternative sourcing options for supply chains under imminent threat and press private sector to shift their supply chains toward “countries that are allies or key international partners” of the United States. Secretary of State Marco Rubio has emphasized that the Trump Administration’s foreign policy program will prioritize “relocating [U.S.] critical supply chains closer to the Western Hemisphere,” namely in Latin American countries, as a means to enhance “neighbors’ economic growth and safeguard Americans’ own economic security.”

Sen. Blackburn’s willingness to support these Democratic pieces of legislation reflects an increasing bipartisan sense that the impacts of recent geopolitical conflicts, natural disasters, and the COVID-19 pandemic highlighted the fragility of U.S. supply chains. Additionally, the PRSCA has been endorsed by the private sector, including the Information Technology Industry Council, the National Association of Electrical Distributor, the National Association of Wholesaler-Distributors, and the Supply Chain Resiliency Consumer Brands Association.

It remains uncertain whether either the PRSCA or Strengthening Support for American Manufacturing Act can advance this Congress as standalone bills, as the Trump Administration’s tariff and foreign assistance actions deepen partisan trends. Still, the bills’ emphasis on government efficiency, prioritizing American manufacturing, and near-shoring may be able to leverage Trump Administration “America First” and “Department of Government Efficiency” themes to ride momentum into FY 2026 annual appropriations legislation under a national security title. Accordingly, importers interested in the U.S. market would likely benefit from reviewing their supply chains with a long view that seeks to leverage opportunities to reinvest in American manufacturing and looks to near shore material supply chains, particularly in the Western Hemisphere – where the Trump Administration has underscored its interests in boxing out Chinese investment.

Colorado’s AI Task Force Proposes Updates to State’s AI Law

Stemming from Colorado’s Concerning Consumer Protections in Interactions with Artificial Intelligence Systems Act (the Act), which will impose obligations on developers and deployers of artificial intelligence (AI), the Colorado Artificial Intelligence Impact Task Force recently issued a report outlining potential areas where the Act can be “clarified, refined[,] and otherwise improved.”

The Task Force’s mission is to review issues related to AI and automated detection systems (ADS) affecting consumers and employees. The Task Force met on several occasions and prepared a report summarizing their findings:

Revise the Act’s definition of the types of decisions that qualify as “consequential decisions,” as well as the definition of “algorithmic discrimination,” “substantial factor,” and “intentional and substantial modification;”

Revamp the list of exemptions to what qualifies as a “covered decision system;”

Change the scope of the information and documentation that developers must provide to deployers;

Update the triggering events and timing for impact assessments as well as changes to the requirements for deployer risk management programs;

Possible replacement of the duty of care standard for developers and deployers (i.e., consider whether such standard should be more or less stringent);

Consider whether to minimize or expand the small business exemption (the current exemption under the Act is for businesses with less than 50 employees);

Consider whether businesses should be provided a cure period for certain types of non-compliance before Attorney General enforcement under the Act; and,

Revise the trade secret exemptions and provisions related to a consumer’s right to appeal.

As of today, the requirements for AI developers and deployers under the Act go into effect on February 1, 2026. However, the Task Force recommends reconsidering the law’s implementation timing. We will continue to track this first-of-its-kind AI law.