Clickbait: Actual Scope (Not Intended Scope) Determines Broadening Reissue Analysis

The US Court of Appeals for the Federal Circuit affirmed the Patent Trial & Appeal Board’s rejection of a proposed reissue claim for being broader than the original claim, denying the inventors’ argument that the analysis should focus on the intended scope of the original claim rather than the actual scope. In re Kostić, Case No. 23-1437 (Fed. Cir. May 6, 2025) (Stoll, Clevenger, Cunningham, JJ.)

Miodrag Kostić and Guy Vandevelde are the owners and listed inventors of a patent directed to “method[s] implemented on an online network connecting websites to computers of respective users for buying and selling of click-through traffic.” Click-through links are typically seen on an internet search engine or other website inviting the user to visit another page, often to direct sales. Typical prior art transactions would require an advertiser to pay the search engine (or other seller) an upfront fee in addition to a fee per click, not knowing in advance what volume or responsiveness the link will generate. The patent at issue discloses a method where the advertiser and seller first conduct a trial of click-through traffic to get more information before the bidding and sale process. The specification also discloses a “direct sale process” permitting a seller to bypass the trial and instead post its website parameters and price/click requirement so advertisers can start the sale process immediately.

The independent claim recites a “method of implementing on an online network connecting websites to computers of respective users for buying and selling of click-through traffic from a first exchange partner’s web site.” The claim requires “conducting a pre-bidding trial of click-through traffic” and “conducting a bidding process after the trial period is concluded.” A dependent claim further requires “wherein the intermediary web site enables interested exchange partners to conduct a direct exchange of click-through traffic without a trial process.”

The patent was issued in 2013, and the inventors filed for reissue in 2019. The reissue application cited an error, stating that the “[d]ependent claim [] fails to include limitations of [the independent] claim,” where the dependent claim “expressly excludes the trial bidding process referred to in the method of [the independent] claim,” which would render it invalid under 35 U.S.C. § 112. To fix the error, the inventors attempted to rewrite the dependent claim as an independent claim that omitted a trial process.

The examiner issued a nonfinal Reissue Office Action rejecting the reissue application as a broadening reissue outside of the statutory two-year period. The examiner found that the original dependent claim is interpreted to require all steps of the independent claim, including the trial period, and further to require a direct sale without its own trial, beyond the trial claimed in the independent claim. The inventors attempted to rewrite the dependent claim as the method of independent claim with “and/or” language regarding the trial process versus direct to sale process. The amendment was rejected for the same reasons. The Board affirmed on appeal.

Whether amendments made during reissue enlarge the scope of the claim in violation of 35 U.S.C. § 251 is a matter of claim construction. The inventors argued that the proper inquiry was not whether the scope of the proposed reissue version of the dependent claim was broader than the scope of the original dependent claim but whether the scope of the proposed reissue claim was broader than the “intended scope” of original dependent claim.

The Federal Circuit rejected the inventors’ argument, finding that it contradicted the plain text of § 251(d), which prohibits reissue patents enlarging the scope of the claims, not reissue patents enlarging the intended scope of the claims. The Court further reasoned that “[l]ooking at the intended scope rather than the actual scope of the original claim would prejudice competitors who had reason to rely on the implied disclaimer involved in the terms of the original patent.” Finding that the text, history, and purpose of § 251 all counsel against reviewing the “intended scope” of claims on reissue, the Court affirmed the Board’s denial.

EDPB and EDPS Support GDPR Record-Keeping Simplification Proposal

On May 8, 2025, the European Data Protection Board (“EDPB”) and the European Data Protection Supervisor (“EDPS”) adopted a joint letter addressed to the European Commission regarding the upcoming proposal to simplify record-keeping obligations under the EU General Data Protection Regulation (“GDPR”). This proposal aims to amend Article 30(5) of the GDPR, simplifying the record-keeping requirements and reducing administrative burdens while maintaining robust data protection standards.

The European Commission proposed the following changes to Article 30(5) of the GDPR:

Exemptions for Small Mid-Cap Companies: Extending the derogation which currently applies to enterprises or organizations with fewer than 250 employees (including small and medium-sized enterprises or SMEs), to also cover “small mid-cap companies,” i.e., companies with fewer than 500 employees and with a defined annual turnover, as well as organizations such as non-profits with fewer than 500 employees.

Expansion of Application: Modifying the derogation so it would not apply if the processing is “likely to result in a high risk to the rights and freedoms of natural persons,” as opposed to the current provision, which only mentions processing likely to result in a “risk,” therefore broadening the ability to use the derogation.

Limiting Record-Keeping Exceptions: Removing certain exceptions to the record-keeping derogation, including references to occasional processing and possibly special categories of data.

Employment, Social Security or Social Protection Law Exception: Introducing a recital clarifying that the obligation to maintain records of processing activities would not apply to the processing of special categories of data to comply with legal obligations in the field of employment, social security or social protection law in accordance with Article 9(2)(b) of the GDPR.

In their joint letter, the EDPB and EDPS express “preliminary support to this targeted simplification initiative,” noting that they support the retention of a risk-based approach in respect of processing, and observing that “even very small companies can still engage in high-risk processing.” Both parties welcome the opportunity for a formal consultation to take place after the publication of the draft legislative change.

BIS Issues Four Key Updates on Advanced Computing and AI Export Controls

On May 13, 2025, the U.S. Department of Commerce’s Bureau of Industry and Security (“BIS”) announced four significant policy developments under the Export Administration Regulations (“EAR”), affecting exports, reexports, and in-country transfers of certain advanced integrated circuits (“ICs”) and related computing items with artificial intelligence (“AI”) applications. These actions reflect the Trump administration’s first moves to address national security risks associated with exports of emerging technologies, and to prevent use of such items in a manner contrary to U.S. policy. Below is a summary of each development and its practical implications.

1. Initiation of Rescission of the “AI Diffusion Rule”

As explained in a press release, BIS has begun the process to rescind the so-called “AI Diffusion Rule,” issued in the closing days of the Biden administration and slated to go into effect on May 15. That rule would have imposed sweeping worldwide controls on specified ICs and set up a three-tiered system for access to such items by countries around the world. The rescission is intended to streamline U.S. export controls and avoid “burdensome new regulatory requirements” and strain on U.S. diplomatic relations.

It will be important to monitor developments for BIS’s anticipated issuance of the formal rescission and for the control regime that BIS will likely implement in its place. In the meantime, all IC-related controls preceding the AI Diffusion Rule remain in effect.

2. New End-Use Controls for Advanced Computing Items

BIS has issued a policy statement informing the public of new end-use controls targeting the training of large AI models. Specifically, the statement provides that the EAR may impose restrictions on the export, reexport, and in-country transfer of certain advanced ICs and computing items when there is knowledge or reason to know that the items will be used for training AI models for or on behalf of weapons of mass destruction or military-intelligence end-uses in or end-users headquartered in China and other countries in BIS Country Group D:5. Furthermore, U.S. persons are prohibited from knowingly supporting such activity.

This development underscores the importance of robust due diligence and end-use screening for companies involved in exports, re-exports, and transfers of such items, especially to Infrastructure as a Service providers.

3. Guidance to Prevent Diversion: Newly Specified Red Flags

To assist industry in preventing unauthorized diversion of controlled items to prohibited end-users or end-uses, BIS has published updated guidance identifying new “red flags” that may indicate a risk of such diversion. The guidance provides practical examples and scenarios, such as unusual purchasing patterns, requests for atypical technical specifications, or inconsistencies in end-user information. Companies are encouraged to review and update their compliance programs to incorporate these new red flags and to ensure that employees are trained to recognize and respond to potential diversion risks.

4. Prohibition of Transactions Involving Certain Huawei “Ascend” Chips Under “General Prohibition Ten”

BIS has released guidance regarding the use of and transactions in certain Huawei “Ascend” chips meeting the parameters for control under Export Control Classification Number (“ECCN”) 3A090, clarifying the application to such activities of “General Prohibition Ten” under the EAR. This prohibition restricts all persons worldwide from engaging in a broad range of dealings in, and use of, specified Ascend chips that BIS alleges were produced in violation of the EAR.

Regarding due diligence in this context, BIS has provided the following guidance:

If a party intends to take any action with respect to a PRC 3A090 IC for which it has not received authorization from BIS, that party should confirm with its supplier, prior to performing any of the activities identified in GP10 to ensure compliance with the EAR, that authorization exists for the export, reexport, transfer (in-country), or export from abroad of (1) the production technology for that PRC 3A090 IC from its designer to its fabricator, and (2) the PRC 3A090 IC itself from the fabricator to its designer or other supplier.

Key Takeaways for Industry

It is important to keep in mind that the BIS actions focus on dealings in ICs and advanced computing items meeting the control parameters of ECCN 3A090 and related ECCNs. With that in mind, the following steps are recommended:

Review and update compliance programs: Impacted companies should promptly assess their export control policies and procedures in light of these developments, with particular attention to end-use and end-user screening.

Monitor regulatory changes: The rescission of the AI Diffusion Rule and the introduction of new end-use and General Prohibition Ten controls may require adjustments to licensing strategies.

Enhance employee training: Incorporate the newly specified red flags and guidance into training materials for relevant personnel.

BIS’s latest actions reflect a dynamic regulatory environment for national security regulation of advanced computing and AI technologies. Companies operating in these sectors should remain vigilant and proactive in managing compliance risks, as there are likely to be more developments in this area in the months ahead.

ANOTHER SLICE OF THE PIE: Serial TCPA Plaintiff Goes After Pizza Hut

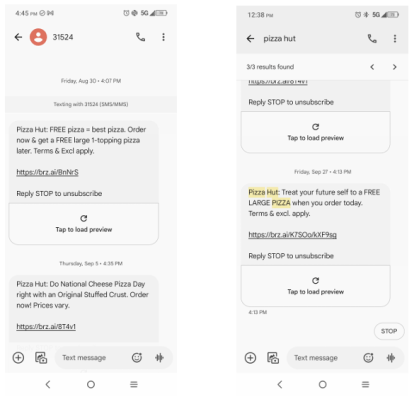

It seems Joseph Brennan is no stranger to the drive-thru – or the courtroom. See FROM CORN DOGS TO COURTROOMS: Sonic’s Texts Might Cost More Than a Combo Meal – TCPAWorld. This time, he’s served a steaming hot complaint against Pizza Hut.

Joseph Brennan v. Pizza Hut, Inc. was originally filed in the Western District of Louisiana. Following an unopposed motion to transfer by Pizza Hut, the case was moved to the Northern District of Texas on May 13, 2025.

In his Complaint, Brennan states that he never gave Pizza Hit his phone number, never opted in to any of their rewards programs, and never had a business relationship with Pizza Hut. Yet, Brennan alleges that he received three unsolicited text messages from Pizza Hut on August 30, 2024, September 5, 2024, and September 27, 2024, despite placing his number on the National Do Not Call Registry.

Brennan also purports to represent the following class:

All persons throughout the United States (1) to whom Pizza Hut delivered, or directed to be delivered, more than one text message within a 12 month period for purposes of solicitating the sale of a Pizza Hut product, (3) where the person’s telephone number had been registered with the National Do Not Call Registry for at least thirty (30) days before Pizza Hut delivered or directed to be delivered at least two of the text messages within the 12 month period, (3) from four-years prior to the filing of the initial complaint in this action through the date notice is disseminated to a certified class, and (4) for whom Defendant claims it obtained prior express invitation or permission in the same manner as Defendant claims it obtained prior express invitation or permission from Plaintiff.

As the case heats up in Texas, we’ll be keeping a close eye on whether Brennan’s claims rise to the occasion.

Massachusetts Court Grants Motion to Dismiss “Spy Pixel” Privacy Class Action for Lack of Standing

On January 31, 2025, in Campos v. TJX Companies, Inc., No. 24-cv-11067, the District of Massachusetts granted a motion to dismiss a class action due to the plaintiff’s lack of standing. The court concluded that the named plaintiff’s claims regarding the intrusion of her privacy by “spy pixels” could not be successful because there was no injury in fact.

TJX Pixel Software and Campos’ Privacy Claims

Arlette Campos filed a putative class action against defendant TJX Companies (“TJX”) alleging that it intruded upon her privacy through promotional emails it sent to her.

Campos claimed that TJX had embedded pixel software in its promotional emails, which collect information about the email recipient, including when the email is opened and read, the recipient’s location, how long the recipient spends reading the email, and the email server the recipient uses.

Although Campos had provided TJX with her email and subscribed to their email list, she claimed that TJX collected her private information without her consent.

TJX Challenges Whether Campos Met Article III Standing Requirements

TJX filed a motion to dismiss under Rule 12(b)(1) for lack of subject matter jurisdiction, claiming that Campos lacked standing.

Article III of the Constitution requires that litigants have standing to sue. Whether a litigant has standing to sue is an inquiry of three elements: injury in fact, traceability, and redressability.

TJX challenged Campos’ standing on the basis that she did not suffer an injury in fact.

To sufficiently plead an injury in fact, a plaintiff must allege a concrete harm. Quoting from TransUnion LLC v. Ramirez, the court highlighted that “traditional tangible harms, such as physical and monetary harms, are obvious[ly] concrete.” However, based on the holding in TransUnion, the court made clear that “[i]ntangible harms can also be concrete . . . such as reputational harms, disclosure of private information, and intrusion upon seclusion.”

Thus, even though Campos did not have a traditional, tangible harm, this did not necessarily preclude a finding of concrete harm.

Court Rejects Campos’ Intrusion Upon Seclusion Claim for Her Injury

Campos pointed to the tort of intrusion upon seclusion to argue that she was injured. The Restatement Second of Torts defines intrusion upon seclusion as the intentional intrusion “upon the solitude or seclusion of another or his private affairs or concerns.”

For this claim to be actionable, the intrusion must be “highly offensive to a reasonable person,” and the matter intruded upon must be deeply private, personal, and confidential.

Based on this, the court rejected the argument that the emails would fall within the ambit of deeply personal and private information contemplated by the tort because Campos provided her email address to TJX (which the court observed as “certainly not private”), she had consented to receive promotional emails, there was “nothing particularly private about the email’s subject or other content,” and TJX authored the contents of the emails, meaning they would have been known “with or without the pixels.”

Additionally, although the court noted that opening private mail is an example of an intrusion mentioned in the Restatement, because TJX did not peer into Campos’ inbox beyond the emails it authored, there was no intrusion here.

Even for other sensitive information that the pixels collected, such as whether, when, where, and for how long Campos read the emails, the court rejected Campos’ argument that this was private and personal information meant to be protected by the tort. The court found no precedent that “reading habits” for content authored by the defendant are “the type of private, personal information that the tort was aimed at protecting under the common law.”

The court was troubled by allegations that the pixel software tracked whether the email was forwarded, which it deemed “closest to tracking ‘unrelated personal messages,’” but faulted the absence of any allegation that “pixels could track to whom the email was forwarded or the content of that forwarded message.”

Therefore, the court held that Campos failed to adequately plead this claim, and thus, failed to establish that she was injured.

Court Rejects Campos’ Analogy to Other Privacy Harms for Her Injury

Campos also argued that use of pixel technology is similar to cases arising under the Telephone Consumer Protection Act (“TCPA”), which prohibits unsolicited marketing calls and faxes, and the Video Privacy Protection Act (“VPPA”), which prohibits the sharing of video rental records. The court, however, rejected these analogies.

In TCPA cases, standing has been found where recipients did not consent to being contacted. In this case, Campos willingly subscribed to receive emails from TJX, opened and read them, and took no steps to unsubscribe. Based on this, the court held that the TCPA was inapplicable, and Campos could not meet the standing requirement by relying upon it.

Similarly, the VPPA solely contemplates the disclosure of video rental and sale records, and because Campos did not allege any such disclosure, the court held that no harm occurred that could justify applying the VPPA and it thereby could not confer standing, either.

Based on Campos’ inability to establish standing, the court granted the motion to dismiss.

Fast Forward: Article III Standing and Class Certification

In this latest class action, the named plaintiff was unable to meet Article III’s standing requirement. However, even if Campos had, she would have had to overcome another hurdle: establishing whether the vast majority of absent class members also had standing.

The Supreme Court’s holding in TransUnion stands for the proposition that every member of a class, including absent members, must establish a concrete injury under Article III to be awarded individual damages. The Supreme Court did not, however, address the issue of class certification where the class contains absent members who lack Article III standing.

The Supreme Court is poised to answer this question in Laboratory Corporation of America Holdings v. Davis, which it granted certiorari for in January 2025. Courts that have answered this question have done so differently, leading to a three-way split between circuits.

The D.C. Circuit and First Circuit permit certification of a class only if the number of uninjured members is de minimis. The Ninth Circuit permits certification even if the class includes more than a de minimis number of uninjured class members. The Eighth and Second Circuits have taken the strictest approach, rejecting certification if any members are uninjured.

Given that venue may be outcome determinative in this regard, until the Supreme Court addresses this question, defendants should scrutinize potential standing deficiencies for both class representatives and absent class members as well. The Supreme Court heard argument in Lab Corp on April 29, 2025, and should soon issue a decision that may provide important clarity to class action litigants on this question of standing.

Changes to EEO-1 Report Approved

As an update to our previous post, the EEOC’s request for a non-substantive change to remove the option for employers to voluntarily report non-binary data on the EEO-1 data collection has been approved without change.

We are now waiting to see when EEOC will open the 2024 EEO data collection portal. In the proposed instructions filed with the requested change, EEOC indicated May 20, 2025 as the anticipated opening.

We are continuing to monitor the situation and will report back with any updates.

California Privacy Protection Agency Fines National Clothing Retailer More Than US$345,000 for Alleged CCPA Privacy Rights Violations

The California Privacy Protection Agency (CPPA) has made clear that failing to ensure compliance with consumer privacy requests can be costly. Last week was no different when the CPPA took decisive enforcement action against national clothing retailer, Todd Snyder, Inc., signaling that companies’ execution of consumer rights requests under the California Consumer Privacy Act of 2018, as amended (the CCPA), is at the center of the California privacy regulator’s priorities. This article explores the basis for the CPPA’s latest enforcement action and summarizes key takeaways to help minimize regulatory scrutiny.

Key Findings

On May 6, 2025, the CPPA announced that it had issued an order requiring the clothing retail company to change its business practices and pay a US$345,178 fine to resolve alleged violations of the CCPA with respect to the retailer’s procedures in responding to consumer privacy requests. This is the CPPA’s second major enforcement announcement based on similar privacy violations in recent months.

Specifically, the CPPA alleged that the clothing retailer had violated the CCPA in the following ways:

Failure to Process Consumer Opt-Out Requests: For a period of 40 days, the company’s privacy portal was not properly configured. As a result, requests from consumers to opt-out of the sale or sharing of their personal information were not processed.

Excessive Information Collection: When consumers submitted privacy-related requests, the company required them to provide more personal information than was necessary to process these requests. This ran counter to the CCPA’s data minimization requirement.

Unnecessary Identity Verification: Consumers were also required to verify their identity (even to opt-out of personal information sales or sharing — a step that is generally not required under CCPA unless sensitive information is being accessed or deleted).

The CPPA’s latest enforcement action highlights critical compliance features related to consumer opt-out rights and the handling of personal information, with particular emphasis on the company’s reliance on third-party privacy management tools and its imposition of excessive verification requirements.

Lessons Learned

Below are some key takeaways that can help CCPA-regulated businesses stay out of the CPPA’s crosshairs when it comes to complying with consumer rights requests:

1. Do not simply rely on third-party privacy management tools without ongoing oversight, but instead regularly monitor, test, and validate the effectiveness of these tools to ensure that consumer privacy rights are respected, and that opt-out mechanisms are functioning as required by law. This requires an ongoing interface between both the technical and legal teams to ensure both know what technologies are being implemented as well as the appropriate (and compliant) actions taken with respect to those technologies. According to the CPPA, “[u]sing a consent management platform doesn’t get you off the hook for compliance.”

Illustrative Example

The CPPA found that Snyder installed third-party tracking technologies (such as cookies and pixels) on its website, which collected and shared consumer personal information for analytics and cross-context behavioral advertising. Although the company represented to consumers (through outright statements that this would be the case) that the consumer could opt-out of the sale or sharing of their personal information via a Cookie Preferences Center, a technical misconfiguration rendered the opt-out mechanism inoperable for 40 days in late 2023.

2. Ensure that consumers can successfully exercise their opt-out rights easily, as well as identify and remediate any website design flaws that prevent consumers from exercising their requests via your website or other online interface.

Illustrative Example

In this latest enforcement action, the CPPA alleged that the clothing retailer’s website did not properly configure its opt-out mechanism (e.g., opt-out preference signals, such as the Global Privacy Control, were not processed during the 40-day period noted above), and its consent banner kept disappearing before consumers could submit their requests to opt-out of sale and sharing of personal information, making it impossible for consumers to submit opt-out requests.

3. When responding to a consumer’s CCPA rights request (i) seek to rely, as much as possible, on data it already has in its possession to verify the identity of the consumer making the request, and (ii) do not ask consumers for information that is not needed to process the request.

Illustrative Example

Todd Snyder required consumers to upload pictures of their driver licenses (which is considered sensitive personal information) to verify their identity for any CCPA request submitted. This requirement was imposed regardless of the type of CCPA request, including opt-out requests, which under the CCPA do not require verification. By requiring government identification for all requests, Snyder unlawfully imposed an undue burden on consumers and discouraged them from exercising their privacy rights. In addition, according to the CPPA, even for verifiable consumer requests (where verification is appropriate), the CCPA requires businesses to avoid collecting more information than necessary and to use information already maintained by the business whenever feasible. Accordingly, Snyder’s blanket requirement for government identification exceeded what was necessary and violated these provisions.

4. Do not engage in verification when it is not necessary to do so, and make sure that your policies and procedures are clear as to when a consumer’s request needs verification and when it does not.

Illustrative Example

As discussed above, Todd Snyder required consumers to provide certain information to verify their identity in connection with opt-out requests they submitted, even though the CCPA prohibits businesses from requiring consumers to verify their identity for opt-out requests. Thus, if you are subject to the CCPA and receive an opt-out request, you should not proceed to verifying the identity of the consumer making that request.

Conclusion

By understanding the alleged CCPA violations brought against Todd Snyder in this latest enforcement action, CCPA-regulated businesses can help to ensure that its processes and mechanisms for managing consumer privacy requests align with the CCPA’s requirements and reduce the likelihood that its practices for handling consumer requests under this state law are not subject to regulatory scrutiny.

Oregon Suit Muddies Crypto Regulatory Landscape

On April 18, 2025, the State of Oregon brought a civil enforcement action against Coinbase Global, Inc. (“Coinbase”) for the alleged sale of unregistered securities. In a press release, Oregon Attorney General Dan Rayfield openly acknowledged the action was in response to the United States Securities and Exchange Commission (“SEC”) dropping its own case against Coinbase, noting his belief that “states must fill the enforcement vacuum being left by federal regulators who are giving up under the new administration.” This begs the question: is the federal government’s resetting of its approach to crypto regulation an “enforcement vacuum” or a return to order?

Oregon’s complaint asserts that certain digital assets on Coinbase’s platform are investment contracts and, thus, securities under Oregon law. In order to be offered for sale in Oregon, a security must be registered or fall under an exemption (e.g., “Federal covered securities may be offered and sold in [Oregon] without registration,” subject to administrative conditions). ORS 59.049, 59.055, 59.115. Oregon statutorily defines a security to include “investment contracts” (see ORS 59.015), and its courts use a modified version of a test established by the United States Supreme Court in SEC v. W.J. Howey, 328 U.S. 293 (1946), to determine if a particular investment is a security. Oregon claims that Coinbase solicited and participated, or materially aided, in the sale of unregistered crypto securities, resulting in violations of Oregon’s blue sky laws.

The suit is aggressive in that it bumps up against the SEC’s historically exclusive mandate to regulate national exchanges, and for that reason, is vulnerable to legal challenge. In addition, consistent with the state’s press release, it was apparently brought in direct response to the SEC’s dismissal of its Coinbase enforcement action. However, the dismissal of this case and those against other crypto firms are hardly the only things the SEC has done in the crypto space of late. Since President Trump reentered the White House, the SEC has undertaken numerous steps to bring some order to crypto regulation. In recent remarks, newly-minted SEC Chair Paul Atkins stated that the SEC is committed to establishing a “rational, fit-for-purpose framework for crypto assets,” enabling innovation that has been “stifled for the last several years due to market and regulatory uncertainty that unfortunately the SEC has fostered.”

Consistent with this objective, the SEC has established a Crypto Task Force whose purpose is to “help the Commission draw clear regulatory lines, provide realistic paths to registration, craft sensible disclosure frameworks, and deploy enforcement resources judiciously.” The Task Force has hosted industry roundtables addressing key subjects relevant to crypto regulation. At the inaugural roundtable, then-Acting Chair Mark Uyeda remarked that the “approach of using notice-and-comment rulemaking or explaining the Commission’s thought process through releases—rather than through enforcement actions—should have been considered for classifying crypto assets under the federal securities laws.” Topics addressed by roundtables thus far include defining the security status of digital assets, tailoring regulation for crypto trading, know-your-customer considerations for crypto custody, and tokenization. A fifth roundtable is scheduled for June 9 on the subject of “DeFi and the American Spirit.” The roundtables are broadcast live to the public and archived for later viewing through links posted on the SEC’s website.

Moreover, at a conference in March 2025, Uyeda remarked that the SEC would conduct economic analyses that would help the agency “distinguish between approaches that are effective and efficient, versus those that are effective but costly.” He added that the SEC is “required by statute to consider efficiency, competition, and capital formation in its rulemaking. Our Division of Economic and Risk Analysis has developed robust procedures that build on this statutory mandate, recognizing that high-quality economic analysis is an essential part of our rulemaking.” Industry participants might fairly claim that these efforts are far from the SEC “giving up” and leaving an “enforcement vacuum” that states must rush to fill.

Taking the opposite tack of Oregon, several states have yielded in their pursuit of Coinbase. The Coinbase suit that the SEC dismissed was originally brought in June 2023, alongside ten states that initiated actions claiming the company’s administration of its crypto staking program resulted in unregistered securities offerings. According to the SEC’s press release accompanying its complaint, these ten states were part of a task force that coordinated their efforts with the SEC. After the SEC dismissed its case against Coinbase with prejudice in February 2025, five of those ten states—Vermont, Alabama, Illinois, Kentucky and South Carolina—followed suit. Some of those state regulators that withdrew their Coinbase actions have highlighted the SEC’s ongoing rulemaking efforts in their rescission papers. For instance, the Alabama Securities Commission’s Consent Order rescinding its June 6, 2023 Show Cause Order without prejudice cited the new SEC Crypto Task Force’s work and stated that “it would be apt to allow policy makers time to consider regulatory constructs.” The other five states that participated in the task force—California, New Jersey, Maryland, Washington and Wisconsin—have left their enforcement actions against Coinbase in place, at least for the time being.

While states may go their own way when they sense a regulatory gap, restraint may be the better course where active efforts are underway at the federal level to fill that gap with a legal framework informed by stakeholder input. Emergent state actions like Oregon’s present novel complications in the search for regulatory clarity. Given this state of play, both crypto industry participants and investors stand to benefit from governmental patience and coordination as the SEC’s Crypto Task Force performs its work.

Listen to this post

UK Data (Use and Access) Bill Status Update

As the draft UK Data (Use and Access) Bill (the “DUA Bill”) reaches its final stages, the House of Commons and the House of Lords are still debating several key issues. On May 14, 2025, the House of Commons received a program motion, urging it to deliberate on the amendments proposed by the House of Lords on May 12, 2025. The latest amendments introduced by the House of Lords include:

Scientific Data: Limiting the scope of the ‘scientific data’ provision by setting a higher standard for the reasonableness test such that “scientific research must be conducted according to appropriate ethical, legal and professional frameworks, obligations and standards.” This amendment is contrary to the position taken by the House of Commons, which proposed expanding the scope of the ‘scientific data’ provision by removing the requirement for the processing of ‘scientific data’ to be conducted in the ‘public interest.’

AI Models: Introducing transparency requirements for business data used in relation to AI models. The amendment would require developers of AI models to publish all information used in the pre-training, training, fine-tuning and retrieval-augmented generation of the AI model, and to provide a mechanism for copyright owners to identify any individual works they own that may have been used during such processes. The amendment also introduces transparency obligations in respect of “bots,” including the requirement to disclose information on the (1) name of the bot, (2) responsible legal entity the bot, and (3) specific purpose for which each bot is used.

Sex Data: Introducing requirements for ‘sex data’ to be collected in the context of digital verification services.

The House of Commons will now consider such amendments. With the DUA Bill’s progress accelerating, it is anticipated that the DUA Bill will soon be finalized.

Read the latest amendments proposed by the House of Lords.

For more information on the DUA Bill, read our previous update on the DUA Bill.

The TCPA Landscape in 2025: Key Developments and Compliance Priorities

The Telephone Consumer Protection Act (TCPA) continues to be a major source of litigation risk for businesses engaged in outbound marketing. In the first quarter of 2025, litigation under the TCPA surged dramatically, with 507 class action lawsuits filed — more than double the volume compared to the same period in 2024. This steep rise reflects shifting enforcement patterns and a growing emphasis on consumer communications practices. Companies should be aware of several emerging trends and evolving interpretations that are shaping the compliance environment.

TCPA Class Action Trends

In the first quarter of 2025, 507 TCPA class actions were filed, representing a 112% increase compared to the same period in 2024. April filings also reflected continued growth, indicating a sustained trend.

Key statistics:

Approximately 80% of current TCPA lawsuits are class actions.

By contrast, only 2%-5% of lawsuits under other consumer protection statutes, such as the Fair Debt Collection Practices Act (FDCPA) or the Fair Credit Reporting Act (FCRA), are filed as class actions.

This trend highlights the unique procedural and financial exposure associated with TCPA compliance.

Time-of-Day Allegations on the Rise

There has been an uptick in lawsuits alleging that companies are contacting consumers outside of the TCPA’s permitted calling hours — before 8 a.m. or after 9 p.m. local time. In March 2025 alone, a South Florida firm filed over 100 lawsuits alleging violations of these timing restrictions, many of which involved text messages.

Under the TCPA, telephone solicitations are not permitted during restricted hours, unless:

The consumer has given prior express permission;

There is an established business relationship; or

The call is made by or on behalf of a tax-exempt nonprofit organization.

It is currently unclear whether these exemptions definitively apply to time-of-day violations. A petition filed with the FCC in March 2025 seeks clarification on whether prior express consent precludes liability for messages sent during restricted hours. The FCC accepted the petition and opened a public comment period that closed in April.

Drivers of Increased Litigation

Several factors appear to be contributing to the rise in TCPA filings:

An increase in plaintiff firm activity and case volume;

Ongoing confusion regarding the interpretation of revocation rules; and

Continued complaints regarding telemarketing practices, including unwanted robocalls and text messages.

These dynamics reflect a broader trend of regulatory and private enforcement in the consumer protection space.

Compliance Considerations

Businesses should take steps to ensure their outbound communication practices are aligned with current TCPA requirements. This includes:

Documenting consumer consent clearly at the point of lead capture;

Ensuring systems adhere to permissible calling and texting times;

Reviewing policies and procedures for revocation of consent; and

Seeking guidance from counsel with experience in consumer protection laws.

Conclusion

The volume and nature of TCPA litigation in 2025 underscore the need for proactive compliance. Companies should treat consumer communication compliance as a core operational issue. Regular policy reviews, up-to-date systems, and informed legal support are essential to mitigating risk in this evolving area of law.

Listen to this post

Pennsylvania PUC Reviews Data Center Impacts Amid New Energy Plan

Key Takeaways:

During a recent Pennsylvania Utility Commission (PUC) hearing to evaluate how the rise in data centers is impacting energy demand, grid reliability and utility regulation, stakeholders emphasized fair cost allocation for infrastructure, opposing special treatment for data centers and favoring standard tariff processes.

Primary concerns include infrastructure investment and cost allocation, generation and reliability issues, and tariff design.

Six proposed bills in connection with Governor Shapiro’s “Lightning Plan” were unveiled on the same day of the PUC hearing, aimed at modernizing Pennsylvania’s energy landscape through a carbon cap-and-invest program, expanded clean energy targets, streamlined project approvals, infrastructure tax incentives, support for rural and low-income communities, and enhanced energy efficiency rebates.

As data centers surge across Pennsylvania, the PUC is taking a closer look at their impact on energy systems and regulatory oversight. At the same time, Governor Shapiro’s Lightning Plan proposes sweeping changes to modernize the Commonwealth’s energy systems, setting the stage for potential shifts in utility law and oversight. This update explores the legal context, policy drivers and impacts that may emerge from the intersection of infrastructure growth and state energy policy.

On April 24, 2025, the PUC convened an en banc hearing to address the growing impact of data centers and other large electricity consumers on the state’s power grid. In the Motion calling for the hearing, the Chair recognized what has been a running theme across the nation for large load consumers and developers looking to attract data centers — uncertainty regarding both the interconnection timeline and the costs these users will face to procure power in the Commonwealth.

The hearing brought together stakeholders from tech, public utility and consumer advocacy groups to discuss the opportunities presented by the rapid expansion of energy-intensive facilities and the challenges posed by the new demand on the grid. The testimony bore out three primary themes: (1) generation and reliability concerns, (2) infrastructure investment and cost allocation and (3) tariff design.

Infrastructure and Cost Allocation

Fair cost allocation was articulated as a priority by utility and data center panelists alike. The utilities explained in detail how their large load interconnection process works, including how infrastructure investment costs specific to large load customers are allocated. Panelists encouraged the PUC to avoid the creation of a data center customer class and instead rely on cost-of-service studies and rate case proceedings to ensure transparency and that proper allocation of costs to data center customers. This would mean that data centers would be customers under tariffs and not under special contracts, which are often filed for commission approval on a confidential basis.

Tariff Design

The panelists expressed differing views around a model tariff versus a policy statement. Some panelists advocated for a policy statement citing concerns around changes in the market and the potential of a model tariff that is too restrictive or cannot adapt to a changing environment. Others, particularly the statutory advocates, believe a model tariff will level the playing field for utilities serving data centers and not force the utilities to compete against each other in attracting them.

Commissioner Zerfuss noted at the end of the utility panel that she saw no difference between a model tariff and a policy statement, as both would be considered recommendations and not mandates.

Generation and Reliability

With the anticipated surge in electricity demand, the PUC acknowledged the strain on the existing grid infrastructure. The PUC emphasized that simply building more generation or transmission facilities may not suffice, advocating for a diversified approach that includes load management and demand response strategies. Panelists discussed the concept of a “bring your own generation” (BYOG) model, where data centers would provide their own power generation infrastructure, such as solar panels or wind turbines, to support their primary generation needs.

From a regulatory compliance perspective, BYOG could convert a data center to a utility, thus obligating compliance with a host of utility regulations. While some data centers are already navigating complex FERC guidelines resulting from recent FERC orders allowing them to monetize their on-site generation, a BYOG data center could also be subject to grid interconnection laws, energy trading restrictions and local zoning laws around where on-site generation can be located. It remains unclear whether BYOG would slow the development of data centers in the Commonwealth given the potential regulatory and legal obstacles that the data centers may face. There is a possibility, however, that the legal framework may change because of Governor Shapiro’s “Lightning Plan.”

The Lightning Plan

On the day of the PUC hearing, Governor Josh Shapiro’s Lightning Plan was introduced into the General Assembly through six pieces of legislation.

The Pennsylvania Climate Emissions Reduction Act (PACER) (HB 503) introduces a cap and invest program requiring power plants to pay for their carbon emissions with 70 percent of the revenues funneled back to consumers through utility bill rebates and the rest funding low-income assistance and clean energy initiatives.

The Pennsylvania Reliable Energy Sustainability Standard (PRESS) (HB 501) aims to increase the Commonwealth’s clean energy requirement from eight to 35 percent by 2035.

The Pennsylvania Reliable Energy Siting and Electric Transition (RESET) Board (HB 502) would expedite energy project approvals by streamlining the siting and permitting process in the Commonwealth, which is one of only 12 states without a state siting and permitting entity for such projects.

Improvements to the EDGE Tax Credit (HB 500) would add tax incentive credits for investment in energy infrastructure, including up to $100 million annually for new power plants over three years.

The community energy bill (HB 504) would support rural communities, farmers and low-income residents by promoting shared energy resources — such as methane digesters on farms — to reduce energy costs.

Modernizing energy efficiency in the Commonwealth (HB 505) through an amendment to Act 129 would provide more money to consumers in the form of rebates and incentives for buying energy efficient appliances.

Data Transactions: DOJ’s Final Rule’s Implications for Academic Medical Centers with Clinical Research Programs

The Department of Justice (DOJ) published its Final Rule to implement Executive Order 14117 on January 8, 2025, with a correcting amendment issued April 18, 2025. Executive Order 14117, issued on February 28, 2024, titled “Preventing Access to Americans’ Bulk Sensitive Personal Data and United States Government-Related Data by Countries of Concern,” instructed the Attorney General to create regulations that ban or limit U.S. persons from participating in transactions involving property in which a foreign country or its nationals have an interest. Transactions are banned or limited if they involve U.S. government-related data or bulk sensitive personal data (as defined by the final implementing rules), fall into categories deemed by the Attorney General to pose a national security risk (with such security risk arising from potential access to data by identified countries of concern or related individuals), and meet additional criteria outlined in the Executive Order.

The Final Rule outlines categories of transactions that are either banned or limited; designates specific countries and types of individuals or entities with whom transactions involving government-related or bulk U.S. sensitive personal data are restricted; creates a system for granting, modifying, or revoking licenses for otherwise restricted activities and for issuing advisory opinions; and sets requirements for transaction recordkeeping and reporting requirements to support the DOJ’s investigations, enforcement, and regulatory actions in relation to the Executive Order.

Academic Medical Centers (AMCs) and similar entities engaged in clinical research and international collaborations need to be aware of and determine the applicability of the regulatory requirements imposed by the Final Rule. Research partnerships involving biometric identifiers, personal health information, or genomic data may be deemed restricted or prohibited transactions if the partnerships include entities from designated countries of concern.

Summary

The Final Rule is aimed at preventing certain U.S. foreign adversaries — including China, Russia, Iran, North Korea, Cuba, and Venezuela — from accessing sensitive U.S. personal data and government-related information.

Key Definitions. The Final Rule authorizes the DOJ to regulate and enforce restrictions on data transactions with designated “Countries of Concern” and “Covered Persons.”

“Country of Concern” is defined to mean:

any foreign government that, as determined by the Attorney General with the concurrence of the Secretary of State and the Secretary of Commerce, (1) has engaged in a long-term pattern or serious instances of conduct significantly adverse to the national security of the United States or security and safety of United States persons, and (2) poses a significant risk of exploiting government-related data or bulk U.S. sensitive personal data to the detriment of the national security of the United States or security and safety of U.S. persons.

“Covered Person” is defined to include: (1) foreign entities that (a) are fifty percent or more owned, directly or indirectly, by countries of concern or another covered persons; or (b) are organized under the law of, or have their principal place of business in, a Country of Concern; (2) foreign entities that are fifty percent or more owned, directly or indirectly, by Covered Persons, either individuals or entities; (3) foreign individuals who are non-U.S. residents working as employees or contractors of a Country of Concern; (4) foreign individuals primarily residing in Countries of Concern; and (5) other entities or individuals as reasonably determined by the Attorney General based on certain criteria.

Categories of Covered Data. The Final Rule targets eight categories of “Covered Data,” including biometric identifiers, genomic data, health and financial data, precise geolocation information, and personal identifiers that can be linked to other sensitive data. It also includes certain government-related information, such as data tied to U.S. government personnel or the geolocation of sensitive facilities. Notably, the regulations apply regardless of data processing volume when government-related information is involved.

Primary Types of Restricted Transactions. The DOJ identifies three primary types of restricted transactions: employment, investment, and vendor agreements. U.S. businesses must ensure foreign employees, investors, and service providers — especially those linked to Countries of Concern — do not gain access to Covered Data unless strict security protocols are met. This affects a wide range of commercial activities, from hiring and corporate deals to cloud services and software subscriptions, and likely impacts AMCs engaging in clinical research when data is shared with certain employees. Research sponsors, investors and service providers. Prohibitions and restrictions of the Final Rule, however, only apply to Covered Data Transactions with a Country of Concern or Covered Person that involve access by a Country of Concern or Covered Person to government-related data or bulk U.S. sensitive personal data. The Final Rule does not regulate transactions that do not implicate access to government-related data or bulk U.S. sensitive personal data by a Country of Concern or a Covered Person.

Prohibited Transactions. Notably, under the Final Rule certain transactions are absolutely prohibited, such as those involving the sale or licensing of Covered Data to foreign entities in data brokerage arrangements, or those involving biometric data or biospecimens.

Penalties for Non-Compliance. Violations of the Final Rule carry significant fines and penalties. Civil fines can reach the greater of US$368,136 or twice the transaction amount. Willful violations may result in criminal penalties of up to US$1 million and up to 20 years in prison.

The Bottom Line for Clinical Research. To comply with the Final Rule, AMCs must engage in rigorous and thorough diligence on proposed, and existing research activities, collaborations and operations, including on their partners, clients, employees/contractors, and data recipients, to determine if a proposed or existing transaction falls within the ambit of the Final Rule. The scope and penalties for violations of and non-compliance with the Final Rule are a clear indicator that a process to determine and ensure compliance with the Final Rule will be critical for AMCs, and businesses across industries, that engage in activities and transactions involving personal or government-related data.

Implications for Academic Medical Centers with Clinical Research Programs

The Final Rule adds a new layer of regulatory compliance complexity for AMCs and similar entities engaged in clinical research and international collaborations.

Research studies and activities, including research collaborations and partnerships involving biometric identifiers, personal health information or genomic data, may be deemed restricted or prohibited transactions if the partnerships include entities from designated Countries of Concern and/or Covered Persons.

Existing and proposed multi-national studies and data-sharing initiatives must be reviewed to determine if the Final Rule is applicable to the study or activity, and if so, to ensure compliance.

Additionally, AMCs must also ensure that vendors, including cloud and AI service providers, are not affiliated with Countries of Concern and that all data processing activities meet stringent new security and compliance standards. As noted above, ensuring compliance with the Final Rule will necessitate a thorough review of the AMC’s vendor contracts.

Further, the Final Rule necessitates a reassessment by AMCs, of their data-sharing policies and multi-site protocols, and will likely require the incorporation of national security-focused compliance clauses in certain data sharing agreements (such as data use agreements) and the enhancement of institutional data governance frameworks, which frameworks should be designed to avoid and mitigate any legal and regulatory exposure, and ensure that the institution is able to maintain eligibility for receipt of federal funding.

Next Steps

This Final Rule prescribes significant categorical rules that prevent U.S. persons from providing government-related data or U.S. citizens’ bulk, sensitive personal data, including through commercial data-brokerage transactions, to Countries of Concern or Covered Persons. Compliance with the Final Rule specifically necessitates that AMCs and institution implement security measures when engaging in investment transactions, employment agreements, and vendor contracts, that involve either government-related data or large-scale collections of sensitive personal data — such as health records, biometric identifiers, or financial information.

The requirements of the Final Rule are intended to prevent foreign adversaries from indirectly accessing this data through commercial relationships. By identifying these specific transaction types, the Final Rule seeks to address perceived national security gaps and provides clear, enforceable standards that define when and how data-related dealings with foreign actors are restricted.

Failure to comply with these new requirements could result in fines and penalties, regulatory scrutiny, loss of federal funding, and enforcement actions, making compliance with the Final Rule, when and as applicable to a transaction and activity, a critical compliance priority for AMCs and institutions handling large volumes of sensitive personal data.