WAVE OF LITIGATION ENDED?: Are the TCPA’s Quiet Hour Rules Dead After Friday’s Supreme Court Ruling?

As TCPAWorld.com readers know, 2025 has seen a massive rush of TCPA class litigation. Indeed such filings are up over 100 percent from last year– which was already the highest volume year in history.

The biggest volume of TCPA class actions was the so-called quiet hours litigation. This was a massive body of cases brought, in particular, by one firm– Jibreal Hindi’s shop in South Florida.

The theory was simple enough– the TCPA’s CFR provisions mandate “telephone solicitations” cannot be sent before 8 am or after 9 pm. So just find a bunch of people who received texts or calls at 7:58 am of 9:01 pm wherever they happened to be that day and file lawsuits.

This is very easy to accomplish. Folks travel and have cell phone numbers with different area codes. Any vacation traveler can easily demonstrate calls or texts sent too early or too late.

These cases are mostly meritless on their face. Obviously trapping callers based on a called party’s unknown travel plans is not what the FCC had in mind when it promulgated the regs. And regardless “telephone solicitations” is defined to exclude calls made with consent– so the vast majority of “text club” submissions that result in this litigation are outside the scope of the statute anyway.

But this huge volume of cases wasn’t filed with the plan to go all the way. Exactly the opposite. These cases were filed with the plan to settle and settle quickly. And the vast majority of them have.

So this entire body of litigation was essentially nothing more than a shake down effort– and hundreds of businesses were caught in its wake.

As I wrote on Friday, the Supreme Court’s ruling in McKesson changes just about everything under the TCPA, and given the massive wave of quiet hours litigation impact businesses small and large alike, I figured I would start with the ruling’s impact on quiet hours regulations.

Let me start with this– if you have been sued with a quiet hours suit from Hindi DO NOT SETTLE IT. A complete defense is now available. (Not legal advice, just friendly advice.)

As a reminder, McKesson ended district court deference to FCC agency action under the Hobbs Act. While there is some open questions as to whether or not the ruling applies to both declaratory rulings and CFR provisions– McKesson looked a declaratory ruling but the language and analysis used was broad enough to cover both– district courts plainly have much more power to set aside or refuse to apply FCC rules than ever before.

Under the APA courts are now empowered to challenge and invalidate CFR provisions– freed up by both the Hobbs Act’s destruction in McKesson and Chevron’s destruction in Loper Bright.

While this power is likely not complete– where Congress specifically designated authority to an agency to expressly promulgate rules the appropriate scope of review will be limited to whether or not the agency exceeded the scope of that delegation (to be determined). But where an agency acted on its own implied authority in fashioning a CFR provision it seems clear the district courts can now set it aside.

So let’s look at the quiet hours provisions.

The quiet hours provisions of the TCPA are not actually found in the TCPA– they are found exclusively in the CFR, and that’s the point.

The rule reads:

No person or entity shall initiate any telephone solicitation to:

(1) Any residential telephone subscriber before the hour of 8 a.m. or after 9 p.m. (local time at the called party’s location)

The language used here causes a host of problems. But that is not the point for now. The point is must a court apply this FCC-generated rule to begin with?

The answer, following McKesson, may very well be no.

This reg was promulgated by the FCC delegated to it by Congress pursuant to 47 USC 227(c). That provisions provides a lengthy list of considerations and requirements the Commission must consider in implementing and developing what would become the National Do Not call list and related rules.

What the TCPA does not provide authority to the FCC to do– establish quiet hours for marketing calls.

Indeed, the TCPA is entirely silent on rules for marketing other than marketing efforts made to numbers on the DNC list and marketing calls made using regulated technology.

So this ruling was promulgated through the FCC’s implied authority to fashion rules to implement the TCPA. In other words, Congress did not tell it to create these rules– it created them for itself.

This is precisely the sort of action the Supreme Court has just held is subject to review by district courts. And since the FCC just made these rules up with no input from Congress you can expect many courts to be willing to strike the quiet hours rule down.

Everything Is Bigger in Texas: SB140 Passed—Texas’ New Mini-TCPA Takes Effect September 1, 2025! Bringing New Private Right of Action, Broader Telephone Solicitation Definition & Right to Repeat Claim

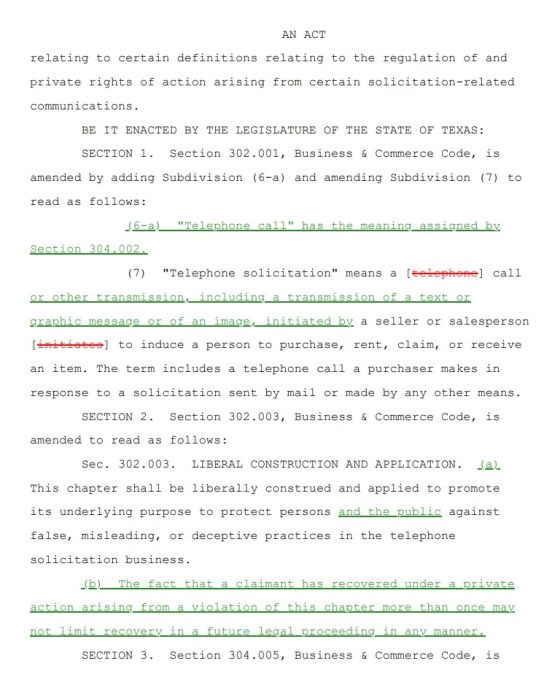

On June 20, 2025 – Governor Abott signed into law Texas Bill SB140 – as TCPAWorld previously reported the Texas-sized bill drastically amends the Texas Mini-TCPA statute – the Texas Business & Commerce Code (“TBCC”) by creating a private right of action under the Deceptive Trade Practices act that provides triple-stacked liability, extends the definition of telephone solicitations to cover text messages, and gives an open door to serial lawsuits.

The new law will take effect on September 1, 2025.

So for those who are making telemarketing calls or text messages in the Lone Star State – here’s what you need to know right now:

Private Right of Action Automatically Afforded Under Deceptive Trade Practices Act (“DTPA”): The new Texas Law creates a NEW private right of action under the DTPA for telemarketing violations – such as noncompliance with call-hour restrictions, failure to register, ignoring opt out requests, and using prohibited automated dialing or announcing devices (“ADAD”). Under the DTPA, consumers can seek treble damages, mental anguish awards and attorney’s fees – substantially increasing the potential exposure for a Texas telemarketing violation. The bill explicitly states that these chapters should be “liberally construed” to protect folks against false, misleading, or deceptive practices in telephone solicitation and telemarketing.

Expanded Definition of “Telephone Call” and “Telephone Solicitation”: A telemarketing call no longer only includes a voice call – it now includes text messages, images, graphics messages, or other electronic transitions initiated by a seller to induce a person to purchase, rent, claim, or receive an item. So the ambiguity in Texas is over – SB140 brings SMS, MMS, or other visual solicitations within the scope of the TBCC.

Private Right of Action for Repeat Claims: The new law clarifies that multiple legal recoveries for the same violation will not limit future recovery. Expect to see even more aggressive, serial litigants and filings in Texas!

You can see the new amendments here:

Now the TBCC is already a hotly litigated statute in Texas and particularly appealing to serial plaintiffs – – especially because of Texas’ registration requirements with a $5,000 per violation penalty. If you are operating in or contacting consumers in Texas, its critical to keep in mind:

Private Right of Action Under TBCC: Currently, under Section 304.052 of the TBCC, consumers do have a private right action for violations of Texas’s DNC and ADAD regulations – however this right is limited by procedural requirements. Before filing a lawsuit, a consumer must file a complaint to either the Texas Public Utility Commission, the Texas Attorney General, or any state agency. If the agency subsequentially initiates its own civil enforcement action in response, the consumer cannot pursue their claim for damages. If the consumer fails to file such a complaint – or cannot prove that the agency declined to act – their privacy action under the TBCC is barred. But with the NEW added private right of action under the DTPA it appears consumers can bypass those procedural barriers and bring claims directly for telemarketing violations – including for DNC violations, ADAD use, and text messages – without having to first go through a state agency. Expect to see a surge in private lawsuits in Texas now permitting treble damages, attorney’s fees and mental anguish claims.

Triple-Stacked Liability? TCPA + Mini-TCPA + DTPA: Texas already allows for what appears to be dual recovery under both the TCPA and TBCC. While real TCPAWorld dwellers understand that double recovery for the same violation under both state and federal law should be impermissible, (See the Czar’s masterful win in Masters v. Wells Fargo, 12-CA-376-SS (W.D. Tex. Jul. 11, 2013), some Texas courts have erroneously held that a violation of the TCPA constitutes an automatic violation of the TBCC. So that means a TCPA violation in Texas can cost you not $500.00 but $1,000.00. Automatically. And every time. And now layer on top potential DTPA damages!

Texas Registration Requirements: Section 302.101 of the TBCC requires sellers to hold a registration certification if making telephone solicitations from Texas or to a purchase in Texas. Violations of this provision will cost you $5,000 a pop – however note the registration requirement does have a bunch of exemptions folks can leverage.

Everything is indeed bigger in Texas.

Texas is supposed to be famously pro-business but these new amendments to its mini-TCPA law massively expands the reach of the statute and opens the door to a rash of new lawsuits against unsuspecting businesses big and small. This is a huge gift to plaintiff’s attorneys who may had the doors to federal courthouses closed on similar claims.

Managing the “Infinite Workday”: Employer Responsibilities in a 24/7 Work Culture

Remember when the workday ended at 5:00 pm?

In today’s always-on world, the “infinite workday” has quietly taken over—creeping into dinners, weekends, and even that quaint concept known as a “vacation.” With smartphones in every pocket and teams spread across multiple time zones, work now follows us everywhere. Microsoft’s 2025 Work Trend Index confirms what many leaders already sense: work is no longer confined by time or place—it’s always on.

The data is striking. By 6:00 a.m., 40% of workers are already checking email. During core hours, employees are interrupted every two minutes by meetings, messages, and alerts. And the day doesn’t end at dinner—nearly a third of workers are back in their inboxes by 10:00 p.m. Weekend work is also on the rise with nearly 20% of employees checking email before noon on Saturdays and Sundays. While the flexibility to work anytime, anywhere can be empowering, it also brings legal, operational, and cultural challenges that employers ignore at their peril.

The Rise of the “Right to Disconnect”

The infinite workday isn’t just stretching schedules – it’s stretching people thin. Microsoft’s data shows that one in three employees say the pace of work has made it impossible to keep up. Half of employees and leaders describe their work as chaotic and fragmented.

A major driver of this strain is the overwhelming volume of digital communication. According to the Index, on average, employees receive more than 100 emails and 150 Teams messages every workday. In fact, some exasperated workers have declared “email bankruptcy” – deleting their entire inbox of unanswered emails in an effort to regain control. It’s a clear sign that employees are struggling to keep up with the volume and velocity of communication.

In response, governments around the world are stepping in with “right to disconnect” laws – designed to protect employees from the expectation of 24/7 availability. Countries including Argentina, Australia, Belgium, Chile, France, Slovenia, and Spain have enacted laws limiting after-hours communications. Our neighbors in Ontario, Canada mandate written disconnect-from-work policies for employers with 25+ employees.

While the U.S. has no such law yet, the conversation is gaining traction. As we reported last year, California proposed but ultimately did not enact a right-to-disconnect law in 2024, and New Jersey introduced similar legislation that remains under review.

Legal Risks for Employers

Even in the absence of formal legislation, the risks of an always-on culture are real and growing:

Wage and Hour Violations. Non-exempt (“hourly”) employees working off the clock – even voluntarily—can trigger wage claims, class actions, and penalties under the Fair Labor Standards Act and comparable state laws.

Mental Health and Burnout. Constant connectivity can lead to stress-related claims under the Americans with Disabilities Act, Family Medical Leave Act, and comparable state laws as well as workers’ compensation rules.

Data Privacy and Security. After-hours work on personal or unsecured devices increases the risk of data breaches and non-compliance with laws such as the California Privacy Rights Act and the European Union’s General Data Protection Regulation.

Discrimination and Equity Concerns. An always-on culture may disproportionately impact caregivers, parents, and employees in different time zones—raising potential claims of disparate impact or failure to accommodate.

Best Practices

To stay ahead of legal and cultural shifts, employers should consider the following steps:

Establish Clear Boundaries. Define expectations for work hours and after-hours communication in policies and handbooks, especially for non-exempt employees.

Train Managers. Educate leaders on the legal risks and model healthy behavior around availability and responsiveness.

Audit Timekeeping Systems. Ensure all work—especially by non-exempt employees— is accurately tracked and compensated.

Encourage Disconnecting. Promote a culture that values rest and recovery, and discourage after-hours messages unless truly necessary.

Final Thoughts

The infinite workday is here—but it doesn’t have to mean infinite liability. By understanding the evolving legal landscape and implementing thoughtful, proactive polices, employers can protect both their workforce and their business.

Commerce Department’s New BEAD Reform Notice Upends Structure of Program

This is the first of several planned blogs on the recently released NTIA BEAD Restructuring Policy Notice (“Notice”).

In early March, Department of Commerce Secretary Howard Lutnick paused all funding under the $42.5 billion BEAD program pending a “rigorous review” by the new administration. At that time, the Secretary announced his intention to “rip out” many of the Biden Administration’s requirements and “revamp” the BEAD program to take a “tech-neutral approach.”

Now, three months later, true to his word, Secretary Lutnick has released NTIA’s Restructuring Notice, revamping the underlying structure of the prior program toward a new “tech-neutral” approach that gives primacy to low-cost solutions rather than long-term value or infrastructure investment. It is widely anticipated that the restructured program will, in many instances, favor low-earth orbit (“LEO”) satellite as the lowest-cost solution. The Notice also removes several Biden-era grant compliance requirements.

The Notice requires all states and territories to rescind preliminary awards (including those in states already approved under the Biden administration, including Delaware, Louisiana, and Nevada) and conduct a new selection round prioritizing sub-recipients who submit lowest-cost bids in accordance with the NTIA’s new “Benefit of the Bargain” scoring rubric. States and territories will have 90 days to complete this process and submit a Final Proposal that reflects the results of the “Benefit of the Bargain” round. The Notice states that NTIA will complete its review of each Final Proposal within 90 days of submission. According to Secretary Lutnick, the “goal” is to get BEAD funding flowing by the end of the year.

Benefit of the Bargain Round Scoring

The Notice redefines the definition of a “Priority Broadband Project” to remove NTIA’s prior preference for end-to-end fiber solutions. It will now include any technology, including LEO and unlicensed fixed wireless broadband (“ULFW”), that meets the minimum speed and latency requirements – 100 Mbps down and 20 Mbps up; 100ms latency; and scalability. In other words, all broadband projects are now “Priority Broadband Projects,” except those that cannot meet the speed and latency requirement or satisfy the vague scalability standards outlined in the Notice.

The Notice adopts new scoring criteria that heavily prioritizes proposals with the lowest overall cost to the program. This may enable selection of a proposal that is not necessarily the lowest-cost option for an individual broadband service location but is part of the combination of selected locations with the lowest overall cost to the program. When comparing competing proposals, the Notice directs states and territories to assess the total BEAD funding that will be required to complete the project (i.e., the total project cost minus the applicant’s proposed match) and the cost to the program per location (i.e., the total BEAD funding that will be required to complete the project divided by the number of locations the project will serve).

If competing applications to serve the same general project area propose a project cost within 15% of the lowest-cost proposal, the state or territory must evaluate such competing applications based on: (1) speed to deployment; (2) speed of network and performance capabilities; and (3) whether the entities were previously provisionally selected by the state or territory in an earlier selection round.

Challenge Process

The Notice does not explicitly require states and territories to re-run their location eligibility challenge process. States and territories are, however, required to implement the following measures:

Investigate and account for locations that are not eligible for BEAD funding because: (1) the locations are shown as served under the latest version of the FCC Broadband Data Collection map; (2) the locations will be served by an enforceable commitment; and (3) the locations are already served by a privately funded network.

Modify BEAD-eligible location lists to include locations no longer served due to a default or change in service area on a federal enforceable commitment.

Investigate and modify BEAD-eligible location lists that are found to be served by ULFW.

NTIA’s downplaying of the challenge process notwithstanding, the overlay of existing ULFW service, coupled with ongoing fiber deployment that has occurred over the past 18 months or so (and continues), strongly suggests that maps and data relied upon by the states and territories to create their lists of eligible locations do not reflect the current reality, let alone the reality that will exist at the time the BEAD funding is actually awarded. A significant “true-up” would seemingly be needed to reconcile the data.

Elimination of Prior Compliance Requirements

The Notice eliminates several non-statutory compliance requirements instituted by the Biden-era NTIA, including those relating to:

Labor and workforce development

Climate change

Open access/network neutrality

Local coordination and stakeholder engagement

Preference for non-traditional providers

Regulation of low-cost plans

While the Notice eliminates these requirements, applicants are still subject to applicable federal laws related to all the above but may demonstrate its compliance through a certification.

Similarly, while subrecipients still must offer at least one low-cost broadband service option (a requirement under the BEAD statute), the Notice removes NTIA’s previous requirement that states and territories define the parameters of such plans.

Up next in the BEAD Notice series. “Playing Defense Under the New BEAD.”

Privacy Awareness Week 2025

In Australia, last week was the 2025 Privacy Awareness Week (PAW), with this year’s theme ‘Privacy – it’s everyone’s business’. Among other things in PAW, the Office of the Australian Information Commissioner (OAIC) produced a Privacy Foundations self-assessment tool, which provides a privacy maturity score on the basis of tenets such as Accountability, Transparency, Collection and Data breach management. The tool, and PAW more broadly emphasise that privacy is not just about compliance, but good business and building trust. NSW, Vic and QLD state governments have each run parallel PAW events.

The PAW theme is especially fitting considering recent Australian privacy strides, including the new tort for serious invasions of privacy which came into effect on 10 June 2025. Under this new tort, a claim arises where a person invades an individual’s privacy by intruding on their seclusion or misusing their information.

Other notable developments include Tranche 1 of the Privacy Act reforms, enacted late last year, and the new obligation to disclose ransomware payments which we highlighted earlier this month. One of the less visible changes in the Privacy Act reforms is the requirement to describe automated decision making processes in entities’ privacy policies, which will come into effect in December 2026.

At the same time, ever-sophisticated developments in technology such as AI models and tools promise entirely new automated ways of collecting, analysing and presenting data for your business and interacting with your customers. As this year’s PAW draws to a close, it reminds us all of the ongoing need to ensure your business’s policies and practices are updated to enable and support the things you may want to do with the information you collect, to ensure your business is best placed to not only protect that information but also to harness the technology that the future may bring.

Emre Cakmakcioglu also contributed to this article.

Playing Defense Under the New BEAD

Under the BEAD Restructuring Policy Notice issued by NTIA on June 6 (“Policy Notice”),[1] state and territory broadband offices must rescind all preliminary and provisional BEAD awards made under the prior rules and must, in very short order, run a single competitive round with a strong preference for providers that promise to provide 100Mbps / 20 Mbps service for the least amount of BEAD funding support.

Crucially, the Policy Notice puts LEO satellite and unlicensed fixed wireless (ULFW) on the same footing as end-to-end fiber projects. Under the prior rules, only end-to-end fiber projects qualified as “Priority Broadband Projects,” meaning fiber projects would receive support to serve a given area even if another “Reliable Broadband Service” technology (such as cable modem or licensed fixed wireless) might do so less expensively. Under the prior rules, LEO and ULFW service was not even considered “Reliable Broadband Service,” and BEAD funding for such “Alternative Technologies” was only available if the cost to deploy Reliable Broadband Service to a given location exceeded a certain threshold.[2]

The new Policy Notice turned that approach upside down. Now, all technologies that can provide 100/20 Mbps service with sub-100ms latency and that are ostensibly capable of scaling to meet future needs are considered “Priority Broadband Service” – including LEO and ULFW.

At the same time, the Policy Notice requires state broadband offices to “choose the option with the lowest cost based on minimal BEAD program outlay.” Only if an applicant submits a proposal within 15% of the lowest-cost proposal may a state broadband office consider the speed of the network and other factors (i.e., that a futureproof FTTP network that will provide 1Gbps+ with sub-20ms latency is a better long-term infrastructure investment than a ~100/20 Mbps LEO service with ~100ms latency).

To put it mildly, these changes put prior BEAD applicants in a defensive position, especially those that proposed end-to-end fiber projects. Fiber providers that already submitted BEAD applications must now compete against LEO and ULFW service solely on cost. It is essentially a one-round reverse auction, with unequal technologies being treated the same.

The expansion of funding for ULFW also means that locations designated as “served” by ULFW in the FCC National Broadband Map may be removed from eligibility for BEAD funding, but it is not automatic. Under the process outlined in the Policy Notice, an ULFW provider may protect its existing service territory from BEAD funding by submitting evidence that its current service meets the technical requirements of “Priority Broadband Service.” [3] (Or, the ULFW provider could opt not to submit such evidence, and instead compete to obtain BEAD support to upgrade its network.) The Policy Notice does not specifically provide for third-party comment or intervention in this process, and it is not clear that state broadband offices would have enough time to entertain contrary evidence. Most broadband offices have yet to issue substantive guidance following the Policy Notice, and we simply do not know at this point — some might allow it, while others might not.

The Policy Notice is conspicuously silent on the issue of locations currently able to be served by LEO service that qualifies as “Priority Broadband Service,” if any. Logically, any such locations should be removed from BEAD eligibility, but the Policy Notice does not address it. At the same time, it is important to note that any LEO Capacity Subgrant does not require qualifying service immediately: a LEO recipient is only obligated to commence provision of qualifying broadband service within four years from the date of the subgrant.

Prior BEAD applicants (and ULFW and LEO providers) are not the only stakeholders under the new BEAD rules. Any entity that has continued to deploy infrastructure and services while the BEAD process has dragged on should be mindful of the potential for new BEAD-supported competition. Many months have elapsed since the close of state challenge processes, and many providers have continued deploying fiber optic networks and other Priority Broadband Projects in the meantime. If maps of BEAD-eligible locations are not updated before funding decisions are made, BEAD funding will end up supporting significant overbuilding of existing networks.

So, the new BEAD rules will require many to play defense, including: (1) prior BEAD applicants that wish to resubmit, (2) prior BEAD applicants that do not plan to resubmit, but that wish to protect expansion areas from BEAD-supported overbuilds, and (3) non-BEAD applicants whose expanded networks are not accurately reflected in funding maps.

To summarize:

Prior BEAD Applicants That Wish to Resubmit:

The provider may need to aggressively adjust its BEAD proposal budget.

The provider may or may not end up competing against a LEO or ULFW application. For ULFW, check the National Broadband Map for ULFW code 70. For insight on LEO bids, see the recent post from the Benton Institute for Broadband & Society, “What Do We Know About LEO BEAD Bids.”

If the provider is planning to deploy fiber in the area anyway, and can commit to do so, it may be especially feasible to compete against ULFW or LEO service. As an extreme example, a $1 “bid” would presumably win the area and protect it from BEAD-supported overbuilding (but would also obligate the provider to comply with various BEAD program rules going forward).

If the National Broadband Map shows that there is ULFW coverage (code 70) in the proposal footprint:

The provider may wish to explore whether it can rebut any ULFW claim that the ULFW’s provider’s current service meets the Appendix A technical qualifications (see above).

If the ULFW service does not submit a claim and supporting evidence to the state broadband office (under the process outlined in note 1), it is fair to assume the ULFW provider will seek BEAD funding. In that case, a competing applicant might either (1) compete aggressively on BEAD cost, or (2) seek to partner with the ULFW provider somehow, or (3) propose its own ULFW solution.

Prior BEAD (Fiber) Applicants That Do Not Plan to Resubmit:

Assuming BEAD support was sought for expansion of current service area, consider the implications of BEAD-supported competition in adjacent territory.

Can the provider partner with an ULFW provider to obtain targeted BEAD support in the near term, and potentially migrate to fiber?

Can the provider support a “planned service” challenge to BEAD location eligibility, and more importantly, will the state broadband office entertain it (unlikely, absent a new challenge round)?

If the provider intends to serve the area in the near future anyway, and can commit to doing so, it may be worth considering whether to submit an application for nominal support.

Providers That Have Significantly Expanded Networks Over the Past 18 Months:

Post-challenge BEAD funding maps may not accurately reflect the current state of BEAD-eligible locations.

It is unclear whether state broadband offices will have the time or inclination to enable a new “true-up” process to avoid BEAD-funded overbuilds.

Providers should closely watch announcements from their state broadband office in the coming days, and may wish to reach out to the office directly to advocate for an update to BEAD funding maps.

As a final comment, please note that additional clarity may emerge with respect to some of above issues as state broadband offices begin issuing substantive guidance reflecting the dictates of the Policy Notice. Note also that there is a reasonable likelihood of litigation challenging certain aspects of the Policy Notice, with unknown consequences.

[1] For a general overview of the Policy Notice, please refer to our earlier blog post, “Commerce Department’s New BEAD Reform Notice Upends Structure of Program,” June 16, 2025.

[2] NTIA Policy Notice, June 26, 2024.

[3] The Policy Notice requires state broadband offices to undertake a process to “ensure that locations already served by an ULFW service that meets the technical specifications within Appendix A [of the Policy Notice]” are not eligible for BEAD funding. First, the state broadband office must review the National Broadband Map to determine whether any ULFW-served locations overlap with any previous BEAD-eligible locations. If so, the broadband office must notify the ULFW provider that it has seven days to respond that it intends to claim that BEAD funding is not required. After doing so, the ULFW provider has seven additional days to submit evidence substantiating the claim. For example, the Ohio broadband office (“BroadbandOhio”) recently published a notification stating that ULFW providers have until June 20 to submit a claim that their service area meets technical specifications for BEAD performance (as documented in the Policy Notice) and that BEAD funding is not required for the locations. After doing so, ULFW providers have only 7 days – until June 27 – to submit evidence supporting the claim.

Privacy Tip #447 – Understanding Cybersquatting

We are seeing an increase in cybersquatting incidents. What is cybersquatting and how can it affect you?

According to Sentinel One, cybersquatting, or domain squatting, “involves the registration, selling, or use of an Internet domain name in bad faith to profit from the goodwill of a trademark that belongs to someone else.” Cybersquatting spoofs real brands to try to get consumers to click on a fraudulent domain to pay for goods or services that are counterfeit or never sent to the consumer after purchase, or obtaining advertising revenues through pay-per-click advertising, where the cybersquatter collects users’ information to make false purchases.

Cybersquatting is damaging to companies because it confuses consumers because they are unable to identify the real website for a brand. If a company is the victim of cybersquatting, consumers may refuse to purchase items online because they are unsure of the correct website. In addition, cybersquatting directs traffic to false sites, and when consumers purchase something from these sites or provide their credentials to the threat actor, they suffer a loss, and as a result, the consumer will no longer trust the brand, causing financial harm to the brand.

In addition, cybersquatting affects the reputation of a company when it is associated with fraudulent sites.

There are different types of cybersquatting, including domain warehousing, where cybersquatters register expired available domain names, then try to sell them back to the legitimate business. “Businesses should stay alert in renewing their domain registration to avoid such risks.”

Typo cybersquatting is when cybersquatters file domain names that are misspelled but similar to brand names to get consumers to click on the fake domain to launch phishing or malware campaigns.

Name jacking is when the names of celebrities or public figures are registered without the individual’s permission. The name jacker will use that individual’s name to impersonate them to make a profit or attempt to sell the domain at a high price.

Identity theft cybersquatting is when a cybersquatter files a domain that is a close variation to the real company’s domain (such as the name with a transposed letter or additional words attached to the company’s name) in an attempt to get consumers to click on the wrong domain and provide personal information or credentials to steal their identity or personal or financial information.

According to Sentinel One, there are several steps that can be taken in the event of a cybersquatting incident:

Seek the services of legal experts to take legal action

Make a complaint under the Uniform Domain Name Dispute Resolution Policy

Send a cease-and-desist letter to the cybersquatter

Buy the domain

Use a domain monitoring company to track domain registrations and take down false domains

Educate consumers

Cybersquatting incidents increased in 2024 and are expected to increase even more in 2025. It is crucial to understand how cybersquatters can damage your company so you can prepare and prevent your organization from becoming a victim.

California’s SB 690: A Game-Changer for Website Privacy Lawsuits Pushes Forward

On June 3, 2025, the California Senate unanimously passed Senate Bill 690 (SB 690) in a 35-0 vote, a strong show of support for reining in a flood of lawsuits that have taken many companies by surprise over the last few years. The bill now heads to the California Assembly, where it will face further scrutiny. If ultimately signed into law, SB 690 could reshape how privacy law is enforced in the digital space, offering much-needed clarity (and relief) for businesses across the country.

What is SB 690 All About?

SB 690 is aimed squarely at modernizing how California’s Invasion of Privacy Act (CIPA) is applied in today’s online economy. Originally passed in 1967 to address concerns around wiretapping and old-school eavesdropping, CIPA has recently been used in a very different context against websites and online tools for tracking online users’ behavior and use of a website.

In the last few years, plaintiffs’ lawyers have filed a wave of lawsuits alleging that everyday digital tools, such as cookies, chatbots, and analytics software violate CIPA. Some lawsuits even treat these technologies as illegal surveillance devices under the law. The result? A growing number of businesses, from major retailers to small e-commerce shops, have been hit with expensive and time-consuming legal threats.

Many of these cases are class actions, often pushing businesses to settle rather than fight it out in court. Critics have called this a form of legal “gotcha” using a decades-old law to penalize routine online practices that are otherwise regulated by California’s more recent privacy laws.

What SB 690 Would Change

SB 690 seeks to fix this problem by updating CIPA to reflect how digital communication and data collection actually work today. The bill would:

Exempt businesses from CIPA liability when they record or intercept online communications for a “commercial business purpose.”

Clarify that tools like session replay software, chat logs, or web tracking pixels are not “wiretaps” or surveillance devices when used in standard business operations.

Prevent private lawsuits (i.e., lawsuits brought by consumers, not the government) related to these practices, as long as the company is using the data for a valid business reason.

Importantly, SB 690 defines “commercial business purpose” by borrowing language from California’s existing privacy laws—the California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA). That means businesses following those laws’ rules around data collection, marketing, analytics, and consumer opt-outs would be protected under SB 690.

What About Current Lawsuits?

Originally, SB 690 was written to apply retroactively, which would have wiped out many of the CIPA lawsuits already in progress. But that retroactive provision faced resistance and was removed just before the Senate vote. As it stands now, the bill would only apply to future cases, so businesses already facing CIPA lawsuits won’t receive immediate relief, and websites that track users before obtaining consent (particularly in California) could still face these demands and lawsuits in the meantime.

Why This Matters

Supporters of SB 690 argue that it restores legal balance. California already has some of the toughest privacy laws in the country. The CCPA and CPRA give consumers strong rights, including the ability to opt out of having their data sold or shared. But CIPA, which wasn’t designed for the internet era, has created an overlapping (and often conflicting) patchwork of rules.

The business community has been calling for reform, arguing that the CIPA lawsuits are stifling innovation and creating a “tax” on companies just for using standard tools to understand their customers or secure their platforms.

What’s Next?

SB 690 now moves to the California Assembly, where it will go through committee hearings and floor votes. If it passes the Assembly without changes, it will head to Governor Newsom’s desk. If amended, it may need to go back to the Senate for a final vote.

If SB 690 becomes law, businesses that use standard online tools for legitimate purposes (marketing, security, customer service, etc.) and comply with CCPA/CPRA rules will be far less likely to face CIPA lawsuits. That could mean fewer legal headaches, fewer settlements, and more certainty in how businesses can operate online.

But until the bill is fully enacted, the current legal risks under CIPA remain so businesses should stay alert and ensure compliance with existing privacy laws. The battle over online privacy enforcement in California is far from over, but SB 690 could mark a turning point.

Why Dumping Sensitive Data on Network Shares is a Liability

Are you storing sensitive data on a shared network drive? If so, your organization could be at serious risk of a data breach or privacy lawsuit. Shared drives, like the common “S: drive,” are often used to store documents, spreadsheets, customer information, financial records, and even scanned IDs. But here’s the problem: these network shares are rarely encrypted, lack clear data governance policies, and are accessible to dozens—or even hundreds—of employees across different departments. Without proper oversight, unsecured network drives become a data security nightmare.

Don’t let poor information governance put your business at risk; take the time to learn why securing sensitive data on shared drives is critical for avoiding data breaches, maintaining compliance with privacy laws, and safeguarding your company’s reputation.

In today’s environment of rapidly expanding state consumer privacy laws and data breach notification statutes, companies that fail to control where sensitive data lives are sitting on serious legal and reputational risk. Here’s what you need to know—and why unsecured network shares are no longer just an IT headache. It’s a legal liability.

The Rise of State Privacy Laws: More Than Just California

Most people know about California’s Consumer Privacy Act/Consumer Privacy Rights Act, but it’s far from alone. As of 2025, over a dozen states have passed their own consumer privacy laws—including Colorado, Connecticut, Utah, Virginia, Texas, Florida, Oregon, and others. Here’s what these state privacy laws typically grant consumers:

The right to know what personal data companies collect.

The right to access or delete their personal data.

The right to opt-out of data sales or targeted advertising.

The expectation that their data will be securely protected.

“Reasonably protected” sounds vague, but it’s increasingly being interpreted to mean basic security practices—like encryption, access controls, and data governance. Storing Social Security numbers or financial info in an unprotected shared drive with no audit trail? That’s not going to fly.

Data Breach Notification Laws: 50 States, 50 Triggers

Every U.S. state has its own data breach notification law, and many have recently updated them. These laws require businesses to notify affected consumers—and sometimes regulators—when certain types of personal information are accessed or acquired without authorization.

The trigger? Often, it’s exposure of unencrypted data such as:

Social Security numbers

Driver’s license numbers;

Financial account or credit card numbers; and

Health records.

Why Network Shares are High-Risk

If that data lives on an unsecured network share, accessible by anyone on the network—or worse, breached by an outsider—you may have a legal duty to notify, and fast.

Shared network drives are a leftover from a simpler time. They often:

Lack encryption, either at rest or in transit.

Have overly broad access (e.g., “Everyone in Finance” means everyone).

Are unmanaged—no one monitors what’s stored, for how long, or by whom.

Become digital junk drawers: you name it, someone’s dumped it there.

In short, they’re a soft target for internal mishandling or external breaches.

Even if no breach has occurred yet, regulators may still view careless storage as a failure to implement reasonable security measures, something required by many state laws (and by the FTC under its enforcement of Section 5 for unfair practices).

Real World Risk: Enforcement and Lawsuits

Let’s connect the dots:

A former employee downloads a folder full of unencrypted spreadsheets with customer data from a shared drive and walks out the door.

A ransomware attacker gains access to your network and hits a file share containing years’ worth of sensitive HR or payroll data.

A privacy audit reveals that your network share is a free-for-all and your company never implemented access logs or retention policies.

In each case, you’re potentially looking at:

Mandatory breach notifications;

Fines from state attorneys general;

Consumer lawsuits, including class actions; and

Reputational damage, especially if the exposure goes public.

What You Can Do Now

The good news? Much of this risk is preventable. Here are some practical steps:

Encrypt sensitive data at rest and in transit. Don’t assume your internal network is a safe zone.

Limit access based on role or need-to-know. Broad group permissions are a red flag.

Inventory your data. You can’t protect what you don’t know you have.

Establish a governance policy. Set clear rules about what data can be stored, where, and for how long.

Clean up legacy shares. Archive or securely delete outdated files, especially ones with sensitive info.

Train employees. They need to know that dumping sensitive info into a shared folder is no longer acceptable.

State privacy laws are becoming more aggressive, and regulators are increasingly focused on where and how companies store consumer data, not just how they use it. An old network share with no encryption, no oversight, and no purpose may seem like low-hanging fruit from a compliance perspective, but it’s exactly the kind of vulnerability that can turn into a legal firestorm.

If your organization hasn’t taken a hard look at its shared storage practices lately, now is the time. Because in the age of modern data privacy laws, “we didn’t know it was there” is no longer a defense.

CISO’s: Take a Look at CSC’s CISO Outlook 2025 Report

Cybersecurity firm CSC recently issued its CISO Outlook 2025 Report, which predicts cybersecurity challenges CISOs will face in the next year. The report, from a survey of 300 CISOs and cybersecurity professionals globally, finds that CISOs “predict the cybersecurity challenges they face will intensify and the growth of artificial intelligence (AI) is increasing the potency of some domain-related threats.”

Some of the report’s key findings:

70% of CISOs believe security threats will increase in the year ahead

98% believe risks will rise over the next three years

66% expect cyber risks to be ‘significant’

76% say they’re only ‘somewhat confident’ in their ability to mitigate domain attacks

Regulatory compliance will continue to be a challenge

AI-powered attacks are increasing threat levels

These insights for the next year are based on experiences in 2024, with the top three cited risks being cybersquatting, domain and domain name system hijacking, and DDoS attacks. The top three cited risks expected in the next three years were cybersquatting, domain and DNS hijacking, and ransomware and malware.

The CISOs confirmed that cyber threats are evolving and getting more complex, which makes it increasingly difficult to combat. Some of the tips to consider include:

Establish an AI Governance Program

Focus on Shadow AI

Prepare for increased security audits

Consider strategic outsourcing

The bottom line is that protecting organizations will continue to be complex and difficult. Staying on top of new threats and risk will serve CISOs well in 2025.

VPPA Class Action Plaintiffs May Not Waive Arbitration Goodbye

On June 13, 2025, a federal court in the Northern District of California held that a putative Video Privacy Protection Act (VPPA) class action lawsuit belonged in arbitration, thanks to the defendant company’s arbitration clause.

If you’ve been following our blog, you’ve seen the rise in VPPA class action litigation against companies that provide video streaming content and use tracking technologies, such as the Meta Pixel. In this case, too, plaintiffs claimed that media distributors DirectToU LLC and Alliance Entertainment LLC (collectively, the Defendants) shared consumers’ personal data with Meta through the use of the Meta Pixel on their websites.

In response to the complaint, the Defendants filed a motion to compel arbitration, asserting that, since 2021, all website users were required to agree to the Terms of Use by checking a box next to the statement: “I acknowledge that I have read and agree to the Terms of Use” before making any DVD, Blu-ray, or videogame purchase on the websites. The phrase “Terms of Use” was hyperlinked to the full Terms of Use, which included an arbitration clause.

The Federal Arbitration Act (FAA) governs arbitrations. Under the FAA, a district court may find that a party has waived its right to compel arbitration if the opposing party can show that the party seeking arbitration: (1) knew of its right to arbitrate; and (2) acted inconsistently with that right.

Here, plaintiffs argued that the Defendants had acted inconsistently with any existing right to arbitrate, thereby waiving it, because they had engaged in discovery and settlement negotiations. The court considered multiple factors holistically to assess whether the Defendants’ actions “indicate[d] a conscious decision… to seek judicial judgment on the merits of the arbitrable claims, which would be inconsistent with a right to arbitrate.” The court acknowledged that the case was a “close call,” particularly because the Defendants had engaged in settlement negotiations and reached a settlement. However, because the Defendants had not yet litigated the merits of the case at the time of that settlement, the court held that the Defendants had not waived their right to arbitration.

The court also noted that the proposed settlement related to an earlier version of plaintiffs’ complaint, not the current amended version. The court stated, “It is misaligned with the caselaw to allow the party arguing for waiver of arbitration to make significant amendments to its complaint and not allow the party seeking arbitration the possibility of reviving its right to compel arbitration. This is especially true where, as here, that party has not engaged in any steps to determine the merits of the case.”

In today’s landscape of increasing VPPA litigation, companies should carefully consider how well-drafted arbitration clauses can help minimize class action risk. Arbitration clauses are not a cure-all, as this case demonstrates. Companies should focus on crafting valid, enforceable arbitration provisions and, just as importantly, exercise caution in litigation strategy to avoid inadvertently waving farewell to otherwise robust arbitration rights.

Blockchain+ Bi-Weekly; Highlights of the Last Two Weeks in Web3 Law: June 20, 2025

It was a busy two weeks in Congress, as key pieces of digital asset legislation move forward in both the House and Senate. While the stablecoin bill in the Senate looks like it may pass quickly, the overarching market structure bill in the House has been hotly debated and appears to lack bipartisan consensus. In other news, various crypto companies are looking to go public after a major stablecoin issuer went public with great success recently, and the SEC is clearing the way for expected upcoming formal rulemaking on the application of securities laws to digital assets.

These developments and a few other brief notes are discussed below.

GENIUS Act Vote in Senate: June 11, 2025

Background: In the Senate, there was a 68-30 vote to invoke cloture on the GENIUS Act, setting the stablecoin bill up for final passage this week. President Trump has put out a statement saying he would sign the bill into law in its current form if it hits his desk. It is expected that by the time of publication of this latest Bi-Weekly update, the GENIUS Act will have passed the Senate, but the bill will still need to go to the House, and then the Senate again if the House makes any changes, before it can reach the President’s desk. The current House stablecoin legislation differs from the GENIUS Act in various ways, including issuers being regulated at both the state and federal levels and how foreign issuers are regulated.

Analysis: The end of week vote to invoke cloture was a move by Senate Majority Leader Thune to end the effort to pass the bill via “regular order” which opens floor proceedings for submission and debate on various amendment proposals. This means the bill is now moving forward with just the changes negotiated with Democrats which lead to 16 Democrats supporting the GENIUS Act in a procedural vote on the Senate floor last month. The list of Senators who voted in favor of cloture is worth monitoring, with Senate Minority Leader Schumer voting against. This stablecoin bill cloture vote came the same week as Treasury Secretary Bessent testified to the Senate Appropriations committee that the Treasury Department is estimating the U.S. Dollar denominated stablecoin market to grow to $2 trillion by the end of 2028.

House Financial Services and Agriculture Committees Markup CLARITY Act : June 10, 2025

Background: The House Financial Services and Agriculture committees held separate hearings to mark up the CLARITY Act with the Financial Services committee focused on the SEC related-elements, while the Agriculture committee worked through the CFTC-related provisions. The biggest change was the protection for crypto developers, wallet makers, and infrastructure providers (previously a separate bill dubbed the Blockchain Regulatory Certainty Act introduced by Representatives Emmer and Torres). The bill passed through the Agriculture committee on an overwhelming 47-6 vote. The vote in the Financial Services committee was a closer 32-19.

Analysis: The Agriculture committee’s overwhelmingly bipartisan vote came right around the start of the Financial Services committee markup, and this fact was harped on regularly by bill proponents as a reflection of bipartisan bill support. The Financial Services markup process was choppier, going well into the night with roughly 40 amendments offered without any expectation of being approved. The current draft would give the CFTC spot market authority over most digital assets, but there is seemingly a push by opponents to give the SEC more power in this area.

House Financial Services Committee Holds Crypto Hearing: June 4, 2025

Background: The House Financial Services Committee held a hearing entitled American Innovation and the Future of Digital Assets: From Blueprint to a Functional Framework to discuss issues related to digital asset regulation. Witnesses included the Chief Legal Officer for Uniswap Labs, Katherine Minarik, and former CFTC Chair Rostin Behnam. Proponents of passing digital asset legislation aimed at encouraging its development in the United States emphasized in the hearing the need for legislative certainty to protect consumers and ensure companies are not leaving the United States to pursue building products and services with blockchain technologies. Opponents cited concerns with the President’s conflicts of interest and argued digital assets should change to meet existing laws rather than making new laws for digital assets.

Analysis: This was just a warmup to the CLARITY Act markup. This hearing started with Ranking Member Waters stating in reference to the CLARITY Act “the only thing clear about this bill is we need to start over.” Republicans pulled a surprise attendance at minority day as well, where typically only the minority party members would attend. The House Agriculture Committee also held a digital asset hearing, but that was less dramatic. There is still much to be done in the regulatory environment, and further changes can be expected including whether what has been dubbed the “DeFi Purity Test” provisions by some is included in whatever the final bill is.

Briefly Noted:

401K Updates: Our last Bi-Weekly update highlighted recent changes from the Department of Labor related to inclusion of crypto in 401(k) plans. Our employment law colleagues here at Polsinelli wrote a larger update on this and how it affects plan managers worth reading here.

Joint Statement on Validator and Developer Protections: The largest advocacy organizations in the digital asset industry put out a joint statement encouraging the Blockchain Regulatory Certainty Act (a bipartisan bill introduced by Representatives Emmer and Torres) be added to the CLARITY Act. It looks like it worked as it was added to the new bill language, so good work all around on this.

SEC Roundtable on DeFi: The SEC roundtable discussion on the agency’s potential role in decentralized finance is worth going back and watching if you did not catch it live. The intro from Chair Atkins was great, as were the additions from Michael Mosier on privacy and data communications systems.

CFTC Chair Nomination Hearing: Brian Quintenz had his confirmation hearing on June 10. It is widely expected he will be confirmed, but the fact that he will likely be the sole CFTC Commissioner shortly after confirmation (if he is confirmed) is an interesting wrinkle.

Samurai Motion to Dismiss: The developers behind bitcoin privacy tool Samourai Wallet moved to dismiss the DOJ’s unlicensed money transmitter related charges last week. “[The DOJ’s legal theory is] akin to charging an encrypted messaging app developer with conspiracy because it may know that some customers use the app to communicate about financial crimes. Or charging a burner phone manufacturer because it may know some customers use the phones to facilitate drug crimes.” DeFi Education Fund and Blockchain Association also wrote an amicus advocating for dismissal (even though the judge took a rare route and denied requests for amicus submissions).

Crypto Company IPOs: Circle’s shares opened at $69.50 on the New York Stock Exchange after its IPO priced at $31. It joins Coinbase as one of the limited publicly traded crypto companies. Gemini has also apparently has confidentially filed for an IPO with the SEC as did digital asset exchange Bullish. There are also expectations for other businesses in the space to explore going public in the near future.

SOL Spot ETF Filings: All the major players filed their S-1 prospectuses with the SEC to try to be in the first batch of SOL ETFs which everybody expects to happen. The big issue remains staking, which these vehicles need to be able to do to be competitive with spot buying on the open market.

SEC Withdraws Rule Proposals: The SEC has formally withdrawn most of the rule proposals issued under the prior administration, including several proposed rules which would have had significant implications on DeFi and crypto custody. It is a rare move to see rule proposals formally retracted rather than fading silently into the background, so this signifies an attempt to create a “clean slate” for upcoming expected rule proposals under Chair Atkins.

Coinbase State of Crypto Report: The Coinbase yearly State of Crypto research is out. Biggest findings are in the cover photo, including that 60% of Fortune 500 executives surveyed said their companies are currently working on blockchain initiatives. They also did a livestream with various big names in crypto and policy going through the results and plans for the upcoming year.

Conclusion:

As the first half of 2025 wraps up, the digital asset policy landscape is entering a critical phase. Stablecoin legislation appears poised for Senate passage, while the broader market structure bill continues to spark heated debate in the House. Meanwhile, key regulatory and enforcement developments—including the SEC’s rule withdrawals, the DOJ’s evolving theories on developer liability, and growing IPO activity—suggest a transitional moment for Web3 in the United States. With bipartisan momentum behind certain reforms and a growing chorus pushing for clarity, the next few months will be essential in shaping the legal infrastructure for blockchain and digital asset innovation.