Litigation Trend Alert: Breach of Contract and Warranty Claims Based on Privacy Policies

A recent series of articles by the International Association of Privacy Professionals discusses a trend in privacy litigation focused on breach of contract and breach of warranty claims.

Practical Takeaways

Courts are increasingly looking at website privacy policies, terms of use, privacy notices, and other statements from organizations and assessing breach of contract and warranty claims when individuals allege businesses failed to uphold their stated (or unstated) data protection promises (or obligations).

To avoid such claims, businesses should review their data privacy and security policies and public statements to ensure they accurately reflect their data protection practices, invest in robust security measures, and conduct regular audits to maintain compliance.

Privacy policies are no longer just formalities; they can become binding commitments. Courts are scrutinizing these communications to determine whether businesses are upholding their promises regarding data protection. Any discrepancies between stated policies and actual practices can lead to breach of contract claims. In some cases, similar obligations can be implied through behavior or other circumstances and create a contract.

There are several ways these types of claims arise. The following outlines the concepts that plaintiffs are asserting:

Breach of Express Contract: These claims arise when a plaintiff alleges a business failed to adhere to the specific terms outlined in their privacy policies. For example, if a company promises to “never” share user data with third parties but does so.

Breach of Implied Contract: Even in the absence of explicit terms, businesses can face claims based on implied contracts. This occurs when there is an expectation of privacy and/or security based on the nature of the relationship between the business and its customers.

Breach of Express Warranty: Companies that make specific assurances about the security and confidentiality of user data can be held liable if they fail to meet these assurances.

Breach of Implied Warranty: These claims are based on the expectation that a company’s data protection measures will meet certain standards of quality and reliability.

How to avoid being a target:

Ensure Accuracy in Privacy Policies, Notices, Terms: Even if a business takes the steps described below and others to strengthen its data privacy and security safeguards, those efforts still may be insufficient to support strong statements concerning such safeguards made in policies, notices, and terms. Accordingly, businesses should carefully review and scrutinize their privacy policies, notices, terms, and conditions for collecting, processing, and safeguarding personal information. This effort should involve the drafters of those communications working with IT, legal, marketing, and other departments to ensure the communications are clear, accurate, and reflective of their actual data protection practices.

Assess Privacy and Security Expectations and Obligations. As noted above, breach of contract claims may not always arise from express contract terms. Businesses should be aware of circumstances that might suggest an agreement with customers concerning their personal information and then work to address the contours of that promise.

Strengthen Data Privacy and Security Protections. A business may be comfortable with its public privacy policies and notices, feel that it has satisfied implied obligations, but still face breach of contract or warranty claims. In that case, having a mature and documented data privacy and security program can go a long way toward strengthening the business’s defensible position. Such a program includes adopting comprehensive privacy and security practices and regularly updating them to address new threats. At a minimum, the program should comply with applicable regulatory obligations, as well as industry guidelines. The business should regularly review the program, its practices, changes in service, etc., as well as publicly stated policies and notices, as well as customer agreements, to ensure that data protection measures align with stated policies.

Gutride Safier LLP Files Class Action Against Nivea Over Misleading “Natural” Skincare Claims

Gutride Safier LLP Files Class Action Against Nivea Over Misleading “Natural” Skincare Claims. Nivea, one of the most recognizable names in skincare, is facing serious legal trouble over how it markets some of its most popular products. A newly filed class action lawsuit claims the company has been misleading shoppers by labeling certain lotions and […]

EPA Announces Plans to Revisit Certain PFAS Drinking Water Standards

Two weeks after U.S. Environmental Protection Agency (EPA) Administrator Zeldin outlined the agency’s PFAS plan in broad strokes, EPA provided more detail on how it intends to proceed with respect to drinking water standards for certain Per- and Polyfluoroalkyl Substances PFAS. On May 14, 2025, EPA announced that it will “keep the current National Primary Drinking Water Regulations (NPDWR) for perfluorooctanoic acid (PFOA) and perfluorooctane sulfonic acid (PFOS)” but intends “to rescind the regulations and reconsider the regulatory determinations for PFHxS, PFNA, HFPO-DA (commonly known as GenX), and the Hazard Index mixture of these three plus PFBS.” Under the Biden administration, EPA set the NPDWRs, or maximum contaminant levels (MCLs), at 4 parts per trillion (ppt) each for PFOA and PFOS, 10 ppt each for PFHxS, PFNA, and HFPO-DA, and a Hazard Index of 1 for a combination of two or more of PFHxS, PFNA, HFPO-DA, and PFBS. The PFAS NPDWR was the first time EPA used a Hazard Index as a regulatory standard for drinking water.

EPA also announced “its intent to extend compliance deadlines for PFOA and PFOS, establish a federal exemption framework, and initiate enhanced outreach to water systems, especially in rural and small communities, through EPA’s new PFAS OUTreach Initiative (PFAS OUT).” EPA’s new PFAS OUT program is targeted at public water utilities “known to need capital improvements to address PFAS in their systems.” EPA stated it plans to extend the current 2029 deadline to comply with the MCLs for PFOA and PFOS to 2031. EPA has not elaborated on what it means by “a federal exemption framework,” but the announcement referred to drinking water systems as “passive receivers,” a phrase that EPA and others have used in talking about potential exclusions from CERCLA’s liability scheme.

EPA plans to accomplish these regulatory changes by publishing a proposed rule this fall, with an expected final rule in spring 2026. It is unclear whether the proposed extension of the compliance date will be included in the proposed rule or will be a separate action by the agency. Stakeholders should watch for the proposed rule’s publication and submit comments during the public comment period.

In the meantime, litigation regarding the PFAS NPDWR remains pending in the D.C. Circuit. On May 12, 2025, the U.S. Department of Justice requested an additional 21 days to decide how to proceed.

More PFAS-related announcements are expected from EPA as the agency provides more detail regarding the items identified in its April 28, 2025 PFAS plan.

PFAS Reporting Rule Deadlines Extended – and More Changes to Come

The U.S. Environmental Protection Agency (EPA) published an interim final rule on May 13, 2025, extending the reporting deadlines for its Per- and polyfluoroalkyl substances (PFAS) Reporting Rule, 40 C.F.R. Part 705, which mandates submission of data on PFAS. EPA also disclosed that it will soon publish a notice of proposed rulemaking to address other aspects of the rule. The new deadlines appear in amended 40 C.F.R. § 705.20. Most reports on PFAS as chemicals are now due by October 13, 2026. Reports on PFAS in imported articles by small manufacturers are now due by April 13, 2027.

Background

EPA finalized the PFAS Reporting Rule on October 11, 2023, under Section 8(a)(7) of the Toxic Substances Control Act (TSCA), as amended by Section 7351 of the National Defense Authorization Act for Fiscal Year 2020, Pub. L. 116-92. This rule obligates any entity that manufactured or imported PFAS from 2011 through 2022 to submit a one-time report to EPA regarding manufacturing, use, disposal, byproducts, worker exposures, and environmental and health effects of those PFAS. The initial deadline for most submissions was set for May 8, 2025, providing an 18-month reporting window from the rule’s effective date. For article importers qualifying as small manufacturers, the reporting deadline was November 10, 2025.

In September 2024, EPA extended the original reporting deadline by eight months, until January 11, 2026. Under the May 13, 2025 interim final rule, that deadline is extended by another nine months, requiring most manufacturers to submit their reports by October 13, 2026. Under the September 2024 rule, for article importers that are also small manufacturers, EPA extended the deadline to July 11, 2026. In the May 13 rule, that deadline is now April 13, 2027.

Analysis

This extension presumably gives EPA time to finalize its information-collection software. The Full-Year Continuing Appropriations and Extension Act, 2025, Public Law 119-4 (the Continuing Resolution signed by President Trump on March 15, 2025) increased EPA’s appropriation for environmental programs and management by $17 million over the appropriation for Fiscal Year 2024, to $3.195 billion. According to a House Appropriations Committee report, the purpose of the increase was “to modernize the Environmental Protection Agency’s IT system to more efficiently complete chemical reviews, as requested by the Administration.” The extra money will help EPA handle the expected influx of reporting under the PFAS reporting rule, 40 C.F.R. Part 705.

The extension also gives EPA time to decide whether to reopen other portions of the PFAS Reporting Rule for public comment. The interim rule comes after the April 28, 2025, PFAS action plan announcement in which EPA Administrator Lee Zeldin committed to implement Section 8(a)(7) in order “to smartly collect necessary information, as Congress envisioned and consistent with TSCA, without overburdening small businesses and article importers” (see B&D’s alert here). It also follows a May 2, 2025, Section 21 petition from a coalition of chemical manufacturers arguing that standard TSCA exemptions for articles and impurities, a production-volume threshold, and other scope limitations should apply. We expect the proposed rule to incorporate at least some of those requested provisions.

While the delay to the start of the submission period is effective immediately, EPA will accept public comments on the revised reporting timeframe for 30 days, with comments due by June 12, 2025. Beveridge & Diamond is actively monitoring developments in this area and is ready to assist interested stakeholders prepare comments on the interim final rule and the expected notice of proposed rulemaking.

DOE Announces New Wave of Energy Efficiency Rollbacks – Over a Dozen Product Types Could Be Impacted

Following the Trump administration’s previously announced commitment to “slash unnecessary red tape and regulations,” the Department of Energy (DOE) proposed a new suite of deregulatory actions that, if successful, will significantly scale back the Department’s Appliance and Equipment Standards Program. On May 12, 2025, DOE pre-published dozens of proposed rules in the Federal Register (with official publications scheduled for May 16, 2025) that would withdraw covered products determinations and rescind existing test procedures and energy and water conservation standards. While prior administrations, including the first Trump Administration, have sought to slow or even stop the development of new efficiency standards, until now, none have attempted to so significantly weaken or even undo final standards already in force. DOE’s deregulatory efforts will have far-reaching impacts on the companies that manufacture, import, distribute, and sell covered products, the consumers and businesses that purchase such equipment, and the utilities that rely on efficiency standards to reduce demands on the electric grid. Because Congress included a prohibition on “backsliding” in DOE’s authorizing statute, we expect consumer and environmental NGOs and potentially states to challenge these deregulatory efforts in court.

DOE is proposing to revert to statutory efficiency standards for products where the Department subsequently established its own more stringent standards via rulemaking. For example, Congress first set efficiency standards for a subset of external power supplies as part of the Energy Independence and Security Act of 2007. In 2014, DOE completed a rulemaking that strengthened those standards and expanded the scope of covered products. Now, DOE proposes to rescind those 2014 standards, reverting to the standard and scope set out by Congress in 2007, even though manufacturers have been complying with the more stringent standards for many years.

DOE also proposes withdrawing its previously covered product determinations for various products. Congress established certain product categories as “covered products” and empowered DOE to regulate additional product categories if it determined that regulation of those products was warranted. Once the covered products determinations are withdrawn, DOE indicates that standards and test procedures for those products will no longer apply, even where they already exist. For example, DOE is now proposing that portable air conditioners no longer be considered “covered products” under the Energy Policy and Conservation Act (EPCA) and is removing its previously promulgated efficiency standards, which were finalized in 2020 and entered into force in January 2025. In some instances, DOE had previously determined to designate a new covered product category but had not yet finalized a water or energy efficiency standard for that product. DOE is withdrawing a number of those product determinations, meaning that it will not develop standards for those products. For example, in 2021, DOE released a final rule stating that fans and blowers were “covered equipment” and that inclusion was necessary to conserve energy resources. The Biden Administration proposed but eventually declined to finalize efficiency standards for these products. DOE is now proposing to rescind DOE’s determination classifying fans and blowers as covered equipment, which will make it more difficult and time-consuming to regulate these products in the future.

DOE has proposed to rescind efficiency standards or test procedures and/or withdraw applicability determinations for well over a dozen product categories, including

External power supplies

Air cleaners

Fans and blowers

Dehumidifiers

Commercial clothes washers

Compact residential clothes washers

Battery chargers

Automatic commercial ice makers

Commercial prerinse spray valves

Consumer furnace fans

Compressors

Miscellaneous refrigeration products

Portable air conditioners

Microwave ovens

Residential dishwashers

Faucets

Conventional ovens

Conventional cooking tops

Consumer and environmental groups repeatedly sued the first Trump administration over far more modest efforts to slow or stop appliance efficiency rulemakings. The Department’s efforts to roll back or eliminate standards and test procedures in their entirety will no doubt similarly be met with a flurry of legal challenges, primarily concerning whether they run afoul of the anti-backsliding provision of EPCA. The anti-backsliding provision specifies that DOE may not prescribe any amended standard that “increases the maximum allowable energy use . . . of a covered product.” DOE contends that rescinding a rule does not constitute prescribing an amended standard and that the provision “only prevents DOE from setting standards below the statutory maximum or minimum.”

DOE is currently requesting public comments on these proposals, which must be received on or before 60 days after the date of publication in the Federal Register. DOE will also host a webinar to discuss the proposals on May 29, 2025. Beveridge & Diamond is actively monitoring developments in this area and is ready to assist interested stakeholders prepare comments to the proposed rules.

Asbestos Litigation Trust Funds Issue Notices of Destruction

Several asbestos litigation trust funds, including the W.R. Grace and Company Asbestos PI Trust; Babcock & Wilcox Company Asbestos Personal Injury Settlement Trust; Owens Corning/Fibreboard Asbestos Personal Injury Trust; Shook & Fletcher Asbestos Settlement Trust; and at least six others1, issued Notices of Destruction, advising the public that certain documents and data are scheduled to be destroyed on a rolling basis, a process that began April 15, 2025. While some notices have explained the nature of the data and documents to be destroyed, at least one of the trusts (Shook & Flecther) has admitted to not knowing what information is contained within the documents. The trusts assert that the destruction policies are being implemented to protect sensitive personal information of the claimants2. The trusts are permitting parties to download the data that has not yet been destroyed. Without backlash or repercussions to the trusts in these efforts to destroy information, we predict additional trusts will follow suit in announcing their respective intents to destroy data in the future.

Law firms have already begun submitting letters to these trusts, insisting that the proposed destruction seems “designed to avoid the production of data and documents responsive to future subpoenas” and calling for continued preservation3. After receiving notice from some of the trusts that these policies would not be reconsidered, counsel for Johnson & Johnson is among the parties that have also filed with the Chancery Court in Delaware4, seeking an injunction to the destruction5. The complaint asserts that the trusts’ actions “would permanently destroy evidence that is highly relevant to tens of thousands of known asbestos-related personal injury claims and other legal proceedings across the country” and that the trusts “concocted these new policies in violation of their obligation to preserve the highly relevant information to undermine legal precedent and numerous state trust transparency statutes.”

The trusts, borne from post-Chapter 11 bankruptcy proceedings, were established by companies to compensate victims of asbestos-related injuries6. Over $30 billion has been set aside by more than fifty trusts for this purpose7. Payouts from these asbestos trust funds range from four to seven figures, depending on many factors, including the type of exposure, the disease state, and the length of pain and suffering8. Persons alleging harm from exposure to asbestos may collect from multiple asbestos trusts.

Although compensation can be received faster through a trust payout, many victims choose also to file a lawsuit, where the payouts tend to be significantly greater9. Defense counsel in asbestos litigation matters, to the extent permitted by local court rules and statutes, rely on proofs of claims from these trusts during discovery to establish possible cross-claims or defenses that may be asserted at trial. The anticipated destruction of data and documents from the trusts will inhibit such ongoing (and future) discovery efforts as litigants may not have any other sources to obtain significant evidence necessary to establish these cross-claims and related defenses10.

On January 15, 2025, the following trusts submitted Notices of Destruction11:

W.R. Grace and Company Asbestos PI Trust for W.R. Grace and Company, a chemical company based out of Maryland;

Babcock & Wilcox Company Asbestos Personal Injury Settlement Trust for Babcock & Wilcox Company, a New Jersey-based energy technologies company; and

Owens Corning/Fibreboard Asbestos Personal Injury Trust for Pittsburgh Corning, a glass block manufacturer headquartered in Pennsylvania; and Owens Corning, the world’s largest fiberglass manufacturer, originally based in Ohio.

As of April 15, 2025, the W.R. Grace and Company, Babcock & Wilcox Company, Pittsburgh Corning, and Owens Corning (collectively referred to herein as the “April Notice Trusts”) began destroying data and documents related to claimants who (1) have been issued a payment at least ten years before the date of destruction, (2) have had their claim withdrawn by counsel, or (3) have had their claim deemed withdrawn by their respective April Notice Trust.

On March 3, 2025, Shook & Fletcher, an Alabama-based insulation manufacturer, also posted a Notice of Destruction of Documents12. Documents stored at a warehouse in Robbinsville, New Jersey, will be destroyed beginning July 7, 2025. This notice indicated that there are “minimal to no indices” of what documents are contained at the warehouse. The Shook & Fletcher Trust will only entertain requests for inspection of the documents by way of subpoena submitted before July 3, 2025.

Litigants should immediately begin to reach out to the respective point of contact, noting which plaintiffs’ information is sought. Requests for copies of the data and documents from the April Notice Trusts can be submitted directly by email at [email protected] and be limited to the listed categories of data. Requests for review of documents for the Shook & Fletcher Trust must be submitted in the form of a hand-delivered subpoena to the Wilmington Trust, and must include all information regarding the origin of the subpoena, including the name of the law firm, the point of contact at the law firm, and all relevant contact information of both. Questions concerning subpoenas are to be directed to Amy Behm at (513) 579-6944 or [email protected]. With all the trusts, any fees arising from requesting copies of or review of documents will be the requesting party’s responsibility.

[1] Asbestos Defendants Seek to Prevent Deletion of Claim Records, DOW JONES NEWS WIRES (originally published by WALL STREET JOURNAL) (Apr. 3, 2025); Ben Zigterman, J&J, Others Say Asbestos Trusts Can’t Purge Records, LAW360(Apr. 15, 2025 at 8:06 p.m.).

[2] Zigterman, Asbestos Trusts Can’t Purge Records, supra note 1.

[3] Asbestos Defendants Seek to Prevent Deletion of Claim Records, supra note 1.

[4] DBMP LLC v. Delaware Claims Processsing Facility LLC (2025-0404).

[5] Zigterman, Asbestos Trusts Can’t Purge Records, supra note 1.

[6] 11 U.S.C. § 524.

[7] Jennifer Lucarelli, Mesothelioma and Asbestos Trust Funds, MESOTHELIOMA.COM (last updated Mar. 27, 2025).

[8] Samuel Meirowitz, Mesothelioma and Asbestos Trust Funds, ASBESTOS.COM (last updated Mar. 4, 2025).

[9] What’s the Difference Between an Asbestos Lawsuit and a Trust Fund Claim?, FERRELL LAW GROUP (last visited Apr. 3, 2025).

[10] Zigterman, Asbestos Trusts Can’t Purge Records, supra note 1.

[11] Notice of Record Destruction Pursuant to Record Retention Policy, WRG Asbestos PI Trust (Jan. 15, 2025); Notice of Record Destruction Pursuant to Record Retention Policy, BABCOCK & WILCOX COMPANY ASBESTOS PERSONAL INJURY SETTLEMENT TRUST, (Jan. 15, 2025); Notice of Record Destruction Pursuant to Record Retention Policy, OWENS CORNING/FIBREBOARD ASBESTOS PERSONAL INJURY TRUST (Jan. 15, 2025).

[12] Shook & Fletcher Asbestos Settlement Trust Notice of Destruction of Documents (Mar. 3, 2025).

EPA Provides Update on PFAS Drinking Water Standards

On 14 May 2025, Environmental Protection Agency (EPA) Administrator Lee Zeldin announced the agency’s plan to address the National Primary Drinking Water Regulations (NPDWR) for per- and polyfluoroalkyl substances (PFAS) finalized last spring, as discussed in our prior alert (22 April 2024). Administrator Zeldin announced that the agency would continue to defend the “maximum contaminant levels” (MCLs) for perfluorooctanoic acid (PFOA) and perfluorooctane sulfonic acid (PFOS) of 4 ppt, but would propose regulations intended to withdraw MCLs for four additional PFAS that were included in the NPDWR: perfluorohexane sulfonic acid (PFHxS), perfluorononanoic acid (PFNA), hexafluoropropylene oxide dimer acid (HFPO-DA, commonly known as GenX), and perfluorobutane sulfonic acid (PFBS). EPA is expected to reconsider the regulatory determinations for these four PFAS, which are found in various products, including water or stain protectants and surface coatings for fabrics, including carpets and rugs, as well as fire-fighting foams. Though EPA will still need to officially act on the recission, water systems are expected to still need to comply with PFOA and PFOS MCLs at 4 ppt, which will be extremely costly.

Last week’s development follows Administrator Zeldin’s 28 April 2025 announcement, discussed in our prior alert, that EPA would address “the most significant compliance challenges” under the final NPDWR published last year. In line with that statement, EPA plans to propose regulations to extend the deadline for MCL compliance from 2029 to 2031, establish a federal exemption framework, and conduct increased outreach to water systems. EPA also committed to supporting the US Department of Justice in defending ongoing legal challenges to the NPDWR with respect to PFOA and PFOS. These challenges have been stayed in federal appellate court while EPA’s new leadership considered its path forward with the NPDWR.

EPA also appears to be prioritizing “holding polluters accountable” and protecting drinking water systems as passive receivers of PFOA and PFOS through reduction of PFAS sources in the environment. How they plan to do so is one of many open questions surrounding PFAS regulation under the new administration, including withdrawing the regulation designating certain PFAS compounds as Comprehensive Environmental Response, Compensation, and Liability Act (CERCLA) hazardous substances, which is still under EPA consideration. However, while the developments last week—including EPA’s announcement pushing back the Toxic Substances Control Act (TSCA) 8(a)(7) PFAS reporting period from 11 July 2025 to 13 April 2026, with a new submission deadline of 13 October 2026—signals some rollback, PFAS remains an active issue in the new administration.

California Announces Investigative Sweep of Location Data Industry

On March 10, 2025, California Attorney General Rob Bonta announced an investigative sweep targeting the location data industry, emphasizing compliance with the California Consumer Privacy Act (CCPA). This announcement follows the California legislature proposing a bill that, if passed, would impose restrictions on the collection and use of geolocation data.

Of course, concerns about geolocation tracking are not limited to California.

In California, the Attorney General’s investigation involved sending letters to advertising networks, mobile app providers, and data brokers that appear to the Attorney General to be in violation of the CCPA. These letters notify recipients of potential violations and request additional information regarding their business practices. The focus is on how businesses offer and effectuate consumers’ rights to stop the sale and sharing of personal information and to limit the use of sensitive personal information, including geolocation data.

To avoid enforcement actions, businesses in the location data industry must ensure compliance with the CCPA.

Understand Consumer Rights: The CCPA grants California consumers several rights, including the right to know what personal information is being collected, the right to opt out of the sale or sharing of their personal information, and the right to delete their personal information. Because precise geolocation data is “sensitive personal information” under the CCPA, consumer rights also include the right to limit the use or disclosure of such information. Businesses must clearly communicate these rights to consumers (which includes employees).

Implement Opt-Out/Limitation Mechanisms: As noted, businesses must provide consumers with the ability to opt out of the sale and sharing of their personal information, and to limit the use or disclosure of sensitive personal information. This includes implementing clear and accessible opt-out/limitation request mechanisms on websites and mobile apps. Once a consumer opts out, businesses cannot sell or share their personal information unless they receive authorization to do so again.

Transparency and Accountability: Businesses must be transparent about their data collection and disclosure practices. This includes providing detailed privacy policies that explain what data is collected, how it is used, and the categories of third parties to whom it is disclosed. Additionally, businesses should be prepared to respond to inquiries from the Attorney General’s office and provide documentation of their compliance efforts.

Fourth Circuit Ruling Provides New Guidance as to Furnishers’ Duty to Investigate Legal Disputes Under the FCRA

The Fair Credit Reporting Act (FCRA) has been a notoriously active area for litigation, especially class-action litigation, in recent years. One issue that continues to be litigated in FCRA cases involving data furnisher liability is the scope of a review that the furnisher must undertake when a consumer dispute is lodged. In particular, courts have been asked in recent years to decide whether consumer disputes involving legal issues, as opposed to disputes over factual inaccuracies appearing in a consumer’s tradeline, fall within the scope of a review required under the FCRA.

Recent decisions from multiple appellate circuits have taken varying approaches to resolve this issue. Adding to the developing authority on this issue, a recent ruling by the Fourth Circuit determined that furnishers must investigate disputes that are based on information that is objectively and readily verifiable, regardless of whether the dispute involves legal or factual issues.

Background of the Case

The case, Roberts v. Carter-Young, Inc., 131 F.4th 241 (4th Cir. 2025), involved a consumer, Shelby Roberts, who disputed a tradeline on her credit report related to the termination of a prior residential lease. Prior to the tradeline being reported, Roberts’ landlord determined that she was unwilling to pay this debt, and it referred the debt to a collection agency, Carter-Young. Carter-Young later reported the debt on Roberts’ tradeline. Roberts disputed this tradeline with the three major credit reporting agencies (CRAs). When notified of this dispute, Carter-Young limited its investigation to confirming the debt with the landlord.

Believing this tradeline review was insufficient, Roberts brought suit under the FCRA. The District Court dismissed Roberts’ claim, finding that her dispute centered upon legal disputes as opposed to factual inaccuracies, and it was therefore not covered by the FCRA’s investigation requirements. On appeal, however, the Fourth Circuit vacated the District Court’s dismissal and remanded the case for further proceedings.

A New Standard in the Fourth Circuit

The legal holding the Fourth Circuit set forth as to the scope of a data furnisher’s review when a tradeline dispute is lodged is notable. Resolving the legal vs. factual issue for the first time in the Fourth Circuit, the court ruled that furnishers must investigate disputes that are based on information that is objectively and readily verifiable, regardless of whether the dispute involves legal or factual issues. This standard mirrored the standard adopted by the Second Circuit in Sessa v. Trans Union, LLC, 74 F.4th 38 (2d Cir. 2023) and the Eleventh Circuit in Holden v. Holiday Inn Club Vacations, Inc., 98 F.4th 1359 (11th Cir. 2024).

However, the Fourth Circuit did not completely reject the possibility that some legal disputes exceed the purview of the FCRA, recognizing the limitations of a furnisher’s investigation. But under this ruling, a furnisher is expected to make reasonable efforts to verify disputed information — even in a legal dispute — so long as the information to be reviewed is objectively and readily verifiable. As to Roberts’ claims specifically, the Fourth Circuit viewed her underlying dispute about whether the debt was owed as something that needed to be determined at the District Court level under the clear and readily verifiable standard.

Implications for Furnishers

For furnishers subject to a lawsuit in the Fourth Circuit’s geographical area, this ruling seems to impose a greater responsibility to conduct thorough investigations of tradeline disputes where the disputed information is clear and verifiable. This responsibility likely adds a new layer of review for furnishers that had been accustomed to conducting a reinvestigation of facts readily available in their records, as certain legal disputes will now also need to be resolved.

Of course, the adoption of legal verbiage such as “objectively and readily verifiable” isn’t likely to end litigation on this issue. At least in the Fourth Circuit (and in the Second and Eleventh Circuits), litigation will likely now center upon whether specific information is actually objectively and readily verifiable and therefore subject to a furnisher liability claim — or whether it falls outside the scope of the FCRA because it is not objectively and readily verifiable. In some ways, this standard is now even more amorphous than before, where it was easier to identify whether a dispute was over a specific fact or over a legal determination. It will bear watching whether other appellate circuits adopt similar or differing standards in the coming months and whether this issue surfaces before the Supreme Court at some point.

Listen to this article

Financial Services and Technology: Florida Changes Law to Make Clear that Collection-Related Emails Are Not Included in the Prohibition on After-Hours Communications

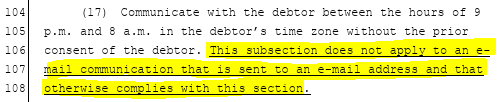

On May 16, Governor Ron DeSantis signed bill CS/CS/SB 232 into law. The bill includes modifications to the Florida Consumer Collection Practices Act (FCCPA) to make clear that the prohibition on communications between “9 p.m. and 8 a.m. without prior consent of the debtor” does not include emails. The financial services plaintiffs’ bar has so far had a field day suing banks, consumer finance companies, and debt collectors for allegedly violating the FCCPA by sending routine loan and account-related emails after 9 p.m. These changes will be welcomed by financial service providers. The Section 559.72(17) changes are set forth below:

EPA Announces Plans to Roll Back Aspects of PFAS Reporting Rule and PFAS Drinking Water Standards

The US Environmental Protection Agency (EPA) recently announced significant changes coming for two of its major rules that regulate per- and-polyfluoroalkyl substances (PFAS). First, EPA announced on May 12, 2025, that it is delaying the reporting period for the Toxic Substances Control Act (TSCA) Section 8(a)(7) PFAS Reporting Rule and is also considering reopening the entire rule to make substantive revisions. Second, on May 14, 2025, EPA also announced that it plans to withdraw its drinking water standards under the Safe Drinking Water Act (SDWA) for four of the six PFAS that EPA sought to regulate.

These announcements are consistent with EPA’s strategic plan released on April 28 to address PFAS across all program offices (see a previous blog post). These actions signal that EPA is taking steps to provide regulated entities more flexibility and much needed relief from overly burdensome requirements pertaining to PFAS.

TSCA PFAS Reporting Rule

EPA released an interim final rule that extends the reporting period for the TSCA PFAS Reporting Rule by nine months. The prior reporting period would have begun on July 11, 2025 and closed on January 11, 2026, with a longer deadline of July 11, 2026, for small article importers. The new reporting period will now run from April 13, 2026, to October 13, 2026, with a deadline of April 13, 2027, for small article importers. The interim rule became effective on May 13, 2025. EPA is accepting comments on the interim final rule until June 12, 2025, on the topic of the reporting period only. After receiving comments, EPA may decide to reopen the rule again and make changes to the reporting period.

The reason for the delay is that EPA needs more time to prepare and beta test the reporting software being developed to collect information that companies will be submitting in response to the reporting rule. EPA believes additional time will also be necessary to take advantage of recently appropriated funds from Congress ($17 million) to improve functionality of the reporting application.

Notably, EPA is also considering reopening certain yet unidentified aspects of the rule for public comment. Stakeholders have been calling for revisions to the PFAS Reporting Rule for years because the rule is one of the most expansive TSCA reporting rules promulgated by EPA. The rule imposes detailed reporting requirements on entities that have manufactured or imported PFAS at any time from January 1, 2011 until December 31, 2022. The reporting rule also applies to importers of articles containing PFAS, which could include many consumer, industrial, and commercial products. The rule does not have any traditional TSCA reporting exclusions such as PFAS that are impurities, byproducts, used in commercial research and development (R&D), or only produced or imported in low volumes (see the previous blog post about the rule for more details). EPA also imposes a complex and highly subjective standard of diligence that companies must consider when gathering PFAS data from their own records and from their supply chains.

While EPA did not indicate what changes it plans to make, its April 28 announcement about PFAS suggests that it may consider easing requirements for small businesses or companies that import articles.

SDWA Drinking Water Standards

EPA also announced that it plans to maintain the national primary drinking water regulations (NPDWRs) promulgated by the prior administration for perfluorooctanoic acid (PFOA) and perfluorooctane sulfonic acid (PFOS), two of the most well known PFAS. The maximum contaminant levels (MCLs) established for PFOA and PFOS, which are legally enforceable levels that are allowed in drinking water, will continue to be 4 parts per trillion (ppt). See our previous blog post for more information on the final rule.

Though it is maintaining the MCLs, EPA intends to propose a rule this fall to extend compliance deadlines for PFOA and PFOS to 2031, establish a federal exemption framework, and initiate outreach to water systems through its new PFAS OUTreach Initiative (PFAS OUT). EPA states that these steps will address the most significant compliance challenges EPA has heard from public water systems, members of Congress, and other stakeholders.

By contrast, EPA will rescind regulations setting MCLs and reconsider the regulatory determinations for four other PFAS that were included in the rulemaking: perfluorohexane sulfonic acid (PFHxS), perfluorononanoic acid (PFNA), HFPO-DA (commonly known as GenX), and the Hazard Index mixture of these three plus perfluorobutane sulfonic acid (PFBS). EPA seeks this rescission to “ensure that the determinations and any resulting drinking water regulations follow the legal process laid out in [SDWA].”

This announcement is in response to a court-ordered deadline for EPA to indicate its plans for the NPDWRs that are currently being challenged in the US Court of Appeals for the District of Columbia (American Water Works Association (AWWA), et al. v. EPA). EPA intends to support the US Department of Justice in defending any ongoing legal challenges to the NPDWRs for PFOA and PFOS.

HANDELED: Dobronski Destroys 1-800-Law-Firm’s Numerous Efforts to End His Case And Its Kind of Absurd Really

So I am no fan of Mark Dobronski, but I am a fan of a good story.

Here’s one.

In Dobronski v. 1-800-Law-Firm, 2025 WL 1386024 (E.D. Mich April 17, 2025) the Court denied a series of motions filed by the Defense seeking to dismiss a TCPA suit filed by Dobronski.

The defendant went to remarkable lengths to attack the case, and while I generally respect the hustle, the arguments here were just not strong.

Starting with the worst argument I have seen in a while, the Defendant accused Dobronski of “deliberate misconduct” for waiting over a year to file his lawsuit, “knowing that industry practice limits third-party vendors’ retention of SMS (Short Message Service) data to one year” and “effectively ensuring that [LAWFIRM] would be unable to access records to defend itself.”

What the hell?

I have not looked at the docket to confirm that argument was actually made but if it was then it is the Defendant who has engaged in “deliberate misconduct.”

Who in the world deletes their records after only one year?

I mean, call recordings sure– those things take up a lot of space– but just deleting all of your records every year sounds like a really bad idea. (Especially since the FTC requires telemarketers to keep records for five years and the FCC requires DNC lists to be maintained basically forever now.)

Unsurprisingly the Court rejected that argument but that was just the start. The Court also rejected the following arguments:

Defendant claims Dobronski filled out a lead form, but since Defendant produced no evidence of the form–and as Dobronski denied filling it out– this argument was rejected;

Defendant argued Dobronski’s failure to “opt out” of their messages somehow meant it had consent to text him– but that’s not how it works. TCPA consent must be obtained before messages are sent;

Defendant argued it had an established business relationship but there was no evidence presented to that effect and Dobronski denied it regardless (the guy, obviously, doesn’t need lawyers);

Defendant argued it is not a telemarketer so the DNC rules do not apply to it but since the messages it sent were, you know, marketing the Court rejected this argument;

Defendant argued the DNC does not apply to calls made for “political, charitable, debt collection, informational, and survey purposes” and that’s true– but Defendant didn’t call for those reasons so this point was utterly frivolous;

Defendant argued Dobronski provided no evidence his number was on the DNC list– but since Dobronski actually did provide a sworn statement to that effect (which would be something called evidence) the Court rejected this argument as well;

Defendant claims Dobronski engaged in an abuse of process by serving discovery demands seeking other businesses to sue– but merely seeking out the names of companies involved in a TCPA violation is plainly within the scope of discovery;

Defendant sought to stay proceedings in the case but the Court found there was no reason to do so;

Defendant also filed a Rule 11 motion but apparently failed to demonstrate by certification that it was served 21 days before it was filed as the rule requires– insane;

Defendant argued “publicly available records” demonstrate Dobronski files meritless cases but Defendant did not produce those records or identify any meritless cases so… yeah;

Defendant also (ironically at this point) claimed Dobronski should be sanctioned because his positions were “unsupported by evidence or legal precedent” although it looks like Defendant should remove the board from its own eye, and all that;

Last Defendant suggested Dobronski had needlessly “multiple the proceedings” with his behavior, but give that it was Defendant who made 13 frivolous or borderline frivolous arguments here the Court rather immediately rejected the motion for sanctions on that basis.

So yeah.

Just absurd how bad some of these arguments are, and while I do respect grit and hustle in the practice of law I do not respect wasting a court’s time. And that’s what appears to have happened here.