Georgia’s Tort Reform Legislation: Key Procedural Changes

Georgia’s tort reform legislation comes at an opportune time, as jury verdicts in recent years have been the stuff of records. Georgia was rated the #1 Judicial Hellhole in 2022 and 2023, and #4 in 2024. The new statutes, signed into law on April 21, 2025, aim to promote fairness in civil litigation procedure in the Georgia state courts, reality in consideration of damages, and commonsense fairness in trials and in liability standards for property owners, managers, and security personnel when crimes occur at their property. Key procedural changes are detailed below.

Motions to Dismiss

If a defendant files a motion to dismiss, then it shall no longer be required to file an answer until 15 days after the court either denies the motion or announces it will postpone deciding the motion until trial. Discovery will be stayed until the court rules on the motion, and the court is required to rule on the motion within 90 days after the conclusion of briefing on the motion. (Amendment to O.C.G.A. § 9-11-12).

Voluntary Dismissals

Plaintiffs are no longer permitted to voluntarily dismiss the complaint at any time before the first witness is sworn at trial. Now, unless all parties stipulate to the voluntary dismissal, a plaintiff must first obtain a court order to dismiss the complaint more than 60 days after the opposing party filed an answer. (Amendment to O.C.G.A. § 9-11-41).

Damages Model

The special damages model in Georgia personal injury cases is amended to remove the collateral source rule. Thus,

Truth in special damages. Special damages shall be limited to the reasonable value of medically necessary care. Juries can now consider amounts paid by health insurance or workers’ compensation. Letters of protection are relevant and discoverable. (New O.C.G.A. § 51-12-1.1).

The general damages (e.g., pain and suffering) available to a plaintiff are subject to these new regulations:

General damages guidelines:

Plaintiffs may not argue or suggest a specific amount of general damages until closing argument.

If the plaintiff elects to open and close the closing arguments, then he/she must make his/her specific amount known during the opening phase of his/her closing argument.

The argument for general damages must be rationally related to the evidence and shall not refer to values having no rational connection to the facts of the case. (Amendment to O.C.G.A. § 9-10-184).

Other Provisions

The playing field at trial is leveled to provide the following:

Seatbelt evidence is admissible.In cases involving motor vehicle accidents, evidence that the plaintiff was not wearing his/her seatbelt is admissible and relevant to the issues of negligence, comparative negligence, proximate causation, assumption of the risk, and apportionment of fault. (Amendment to O.C.G.A. § 40-8-76.1).

Trial bifurcation/trifurcation available upon request.In any personal injury or wrongful death case, any party may elect to have trial bifurcated or trifurcated into separate phases: fault – damages – punitive damages/attorney’s fees.o Exceptions may be made to the right to bifurcation/trifurcation upon motion for cases involving alleged sexual offenses and those involving less than $150,000 in dispute. (New O.C.G.A. § 51-12-15).

Finally,

A new series of statutes provides governance and guidance for negligent security cases.

The new laws provide stricter standards for imposing liability in negligent security cases and clarify the expectations on premises owners in the state.

Now, in order for a premises owner/occupier to be held liable by an injured invitee for negligent security, the plaintiff must prove:

(a) The third person’s wrongful conduct was reasonably foreseeable;

a. “Reasonably foreseeable” may be established by showing that the owner/occupier:

i. Had particularized warning of imminent wrongful conduct by a third person; or

ii. Reasonably should have known that a third person was reasonably likely to engage in such wrongful conduct on the premises based on one of the following:

Substantially similar prior incidents on the premises of which the owner/occupier had actual knowledge;

Substantially similar prior incidents on adjoining premises or otherwise occurring within 500 yards of the premises of which the owner/occupier had actual knowledge; or

Substantially similar prior incidents by the same third person that the owner/occupier had actual knowledge about and the owner/occupier knew or should have known that the third person would be on the premises.

(b) The injury sustained was a reasonably foreseeable consequence of the third person’s wrongful conduct;

(c) The third person’s wrongful conduct was a reasonably foreseeable consequence of the third person exploiting a specific physical condition of the premises known to the owner/occupier, which created a reasonably foreseeable risk of wrongful conduct on the premises that was substantially greater than the general risk of wrongful conduct in the vicinity of the premises;

(d) The owner/occupier failed to exercise ordinary care to remedy or mitigate the specific and known physical conduction and to otherwise keep the premises safe from the third person’s wrongful conduct; and

(e) The owner/occupier’s failure to exercise ordinary care was a proximate cause of the injury sustained.

For a premises owner/occupier to be held liable to an injured licensee (e.g., a tenant’s social guest) for negligent security, the plaintiff must prove:

(a) The third person’s wrongful conduct was reasonably foreseeable because the owner/occupier had particularized warning of imminent wrongful conduct by a third person;

(b) The injury sustained was a reasonably foreseeable consequence of the third person’s wrongful conduct;

(c) The third person’s wrongful conduct was a reasonably foreseeable consequence of the third person exploiting a specific physical condition of the premises known to the owner/occupier, which created a reasonably foreseeable risk of wrongful conduct on the premises that was substantially greater than the general risk of wrongful conduct in the vicinity of the premises;

(d) The owner/occupier willfully and wantonly failed to exercise any care to remedy or mitigate the specific and known physical condition and to otherwise keep the premises safe from the third person’s wrongful conduct; and

(e) The owner/occupier’s failure to exercise any care was a proximate cause of the injury sustained.

Moving forward, in no case will a premises owner/occupier be held liable for negligent security where:

The injured party was a trespasser

The injury was sustained on premises not owned/occupied by the owner/occupier

The wrongful conduct complained of did not occur on the premises and in a place from which the owner/occupier had the authority to exclude the third person

The third-party wrongdoer was either a tenant under eviction or the guest of a tenant under eviction

The injured person came to the premises for the purpose of, or was engaged in committing a felony or theft

The injury occurred at a single-family residence or

The owner/occupier made any reasonable effort to provide information to law enforcement about a particularized warning of imminent wrongful conduct by a third person.

In order to assess whether the owner/occupier breached a duty to exercise ordinary care to keep persons on or around their premises safe from a third party’s wrongful conduct, courts and juries shall consider any relevant circumstances, including but not limited to:

The security measures employed at the premises at the time the injury occurred

The need for any additional or other security measures

The practicality of additional or other security measures

Whether additional or other security measures would have prevented the injuries

The respective responsibilities of owners/occupiers with respect to the premises and government with respect to law enforcement and public safety.

Moreover, juries are now required to apportion fault among all parties, including the criminal wrongdoer. If a jury assigns more fault to the property owner than to the criminal wrongdoer, then the court is required to order a new trial. There shall be a rebuttable presumption that an apportionment of fault is unreasonable if the percentage of fault assigned to the criminal wrongdoer(s) is less than the total percentage of fault assigned to all property owners, occupiers, managers, and security contractors. (New O.C.G.A. §§ 51-3-50 – 51-3-57).

Practical Implications of the Negligent Security Legislation

Given the new guidelines, it is critical that property owners and managers ensure that regular inspections are taking place. If there are fences, the fences should be checked and documented monthly. The same goes for gates, warning/no trespassing signs, locks, cameras, lighting, or other physical conditions or installments on the property.

Property owners and managers should consider current security measures and whether additional or different measures might be appropriate. If multiple reports of similar crimes are received, then property owners and/or managers should consider asking a security consultant to perform a premises security assessment and to make any recommendations for additional or different security measures at the premises.

The process for tenants to communicate with the property manager about any security concerns or reports should be seamless and explained to all current and new tenants. The tenants should be encouraged to provide as much detail as possible, including about the specific location of the property where the crime or other security issue occurred. All such reports should be maintained for at least three years, and a line of communication should be started with local police about tenant security complaints.

Staff should be trained to recognize when a tenant reports concerns about an immediate threat to the tenant by another person and to notify the police immediately by calling 9-1-1. The staff should record such reports to the police and maintain the records for no less than three years.

When Do These Changes Apply?

Thankfully, the majority of changes apply immediately and take effect even in existing cases. There are two exceptions for cases accruing on or after April 21 2025:

New code section O.C.G.A. § 51-12-1.1, limiting recoverable special damages to the reasonable value of medically necessary care, allowing juries to consider the actual costs paid, and making letters of protection relevant and discoverable

The negligent security legislation.

“Accruing” means that the underlying incident giving rise to the claim occurred on or after the effective date.

Health Care Fraud and Abuse 2024 Year in Review

Polsinelli proudly presents the Health Care Fraud and Abuse 2024 Year in Review, offering a comprehensive analysis of False Claims Act (FCA) enforcement and broader fraud and abuse developments over the past year.

Read the Full E-Book Here

Sabrina Marquez, Nicole K. Nielly, and Evan M. Schrode contributed to this article

DOJ Announces Key Corporate Enforcement Changes & White-Collar Priorities

DOJ recently announced white-collar crime enforcement priorities and significant changes to its corporate enforcement policies (here and here). “[O]verbroad and unchecked corporate and white-collar enforcement burdens U.S. businesses and harms U.S. interests,” and “[n]ot all corporate misconduct warrants federal criminal prosecution,” according to the memo. New changes to these DOJ policies are intended to help companies navigate what to expect when making a disclosure and clarify the additional benefits that are available to companies that self-disclose and cooperate.

Priority Enforcement Areas

DOJ’s Criminal Division will prioritize investigating and prosecuting white-collar crimes in certain identified high-impact areas, as summarized below:

Health care and federal program and procurement fraud;

Trade and customs fraud, including tariff evasion;

Fraud perpetrated through variable interest entities, including securities fraud, and other market manipulation schemes;

Market manipulation including, Ponzi schemes, investment fraud, elder and servicemember fraud, and health and safety consumer fraud;

National security threats to the U.S. financial system by financial institutions and their insiders that commit sanctions violations;

Material support by corporations to foreign terrorist organizations, including recently designated cartels;

Complex money laundering, including Chinese Money Laundering Organizations, and other organizations involved in laundering funds used in the manufacturing of illegal drugs;

Controlled Substances Act and the Federal Food, Drug, and Cosmetic Act violations, including counterfeit fentanyl pills and unlawful distribution of opioids by medical professionals and companies;

Bribery and associated money laundering that impact U.S. national interests, undermine U.S. national security, harm the competitiveness of U.S. businesses, and enrich foreign corrupt officials; and

Crimes involving digital assets that victimize investors and consumers; that use digital assets in furtherance of other criminal conduct; and willful violations that facilitate significant criminal activity.

DOJ has also called for an increased investigative pace and directed prosecutors to “move expeditiously to investigate cases and make charging decisions” quickly to ensure “that [investigations] do not linger and are swiftly concluded.”

Corporate Enforcement Policy Changes

New changes to the Corporate Enforcement and Voluntary Self-Disclosure Policy (“CEP”) include the following:

For companies that satisfy all required elements from voluntary self-disclosure, to full cooperation, to timely and appropriate remediation, as well as have no aggravating circumstances, the CEP will make clear there “is a clear path to declination.” This is a marked contrast to prior guidance that entitled companies to a “presumption” of a declination.

Companies that are willing to satisfy the core criteria, but have aggravating circumstances that raise concerns for the company to voluntarily self-disclose, may still qualify for declination under the revised CEP. In these cases, the DOJ will consider “the severity of those aggravating circumstances and the company’s cooperation and remediation,” offering a potential outcome to avoid prosecution.

The revised CEP also clarifies that companies may still qualify for meaningful benefits when self-disclosing in good faith – such as a non-prosecution agreement, a 75 percent reduction in criminal fines, and no requirement to appoint a monitor – even if the disclosure is not considered timely or comes after the DOJ, without the company’s awareness, has already discovered the misconduct.

DOJ also announced revisions to the Criminal Division’s standards and policy for the selection of monitors in matters handled by the Criminal Division.

Monitorships will be limited to those cases where deemed “necessary,” including “when a company cannot be expected to implement an effective compliance program or prevent recurrence of the underlying misconduct without such heavy-handed intervention.” Specifically, the monitor selection standards will be revised to clarify “the factors that prosecutors must consider when determining whether a monitor is appropriate and how those factors should be applied,” as well as require that monitorships be “narrowly tailor[ed]…to address the risk of recurrence of the underlying criminal conduct and to reduce unnecessary costs.” Such factors prosecutors may consider include: (1) “the nature and seriousness of the conduct” and the risk of recurrence; (2) other available regulatory oversight; (3) the effectiveness of the company’s compliance program at resolution; and (4) the maturity of the company’s internal controls, including the company’s ability to test its compliance program as well as make improvements.

DOJ’s corporate whistleblower program will also undergo an evaluation to identify “additional areas of focus” that align with the Administration’s key initiatives. “[P]rocurement and federal program fraud; trade, tariff, and customs fraud; violations of federal immigration law; and violations involving sanctions, material support of foreign terrorist organizations, or those that facilitate cartels and TCOs, including money laundering, narcotics, and Controlled Substances Act violations” have all been included as areas of interest.

Takeaways

Voluntary Self-Disclosure now means “a clear path to declination” rather than a “presumption” of a declination.

Look for an uptick in False Claims Act investigations in the international trade area including, customs fraud and tariff evasion.

DOJ is requiring for prosecutors to “move expeditiously to investigate cases,” and will be actively monitoring investigation timelines and progress to ensure prompt resolution. This means that stakeholders and outside counsel will need to conduct internal investigations and make decisions quicker.

Notwithstanding the 180-day FCPA pause, bribery that enriches foreign officials and undermines U.S. business remains a priority.

Monitorships appear to now be the exception, not the rule for corporate resolutions.

Deferred Prosecution Agreements may be back given that DOJ is making clear “[n]ot all corporate misconduct warrants federal criminal prosecution.” Rather, DOJ is directing Criminal Division prosecutors to weigh “additional factors,” such as whether a company self-disclosed and fully cooperated, as well as its remediation efforts, when assessing whether to prosecute.

Companies will want to stay ahead of these developments and revised corporate enforcement policies. As we have reported, well-designed compliance programs help to mitigate not only bribery and corruption risks, but also money laundering, sanctions issues, human rights violations, and financial fraud risks. Effective and adequately resourced compliance programs also help to foster positive speak-up cultures, ensuring that employees feel comfortable to report suspected misconduct via available internal channels and reporting mechanisms. Maintaining robust internal controls make companies better equipped and prepared to flag potential misconduct early as well as navigate difficult considerations around self-disclosure. Companies should continue to evaluate their compliance programs, including the effectiveness and efficiency of their internal reporting mechanisms and internal investigations processes.

Justices Reject “Moment of Threat” Rule in Police Shooting Case – SCOTUS Today

The most anticipated event at the U.S. Supreme Court today was the oral argument in the birthright citizenship case.

While the question of birthright citizenship, which the Romans called jus soli, is important both in terms of constitutional law and American customs and mores, the underlying question in the case raises a procedural issue that will affect the litigators who follow this blog the most.

That is the question of whether cases involving injunctive or declaratory relief should be resolved by the issuance of nationwide injunctions and orders or just be limited to the actual parties and the district in which the case is at bar. The consolidated cases currently before the Court could be decided expeditiously. In any event, we shall follow developments closely and report promptly when a decision is issued.

For today, only one decision was forthcoming, with Justice Kagan writing for a unanimous Court in Barnes v. Felix, a not-insignificant case that reads like a law school exercise, but a very vivid and immediate one. Law enforcement officer Roberto Felix, Jr., pulled Ashtian Barnes over for suspected toll violations. Barnes ignored the officer’s order to exit the vehicle and began to drive away. Felix immediately jumped onto the doorsill and fired two shots into the car, fatally wounding Barnes, who was able to stop the car before he died. The entire encounter took five seconds, and only two seconds elapsed from the time the officer stepped onto the doorsill of the car until he fired.

On her son’s behalf, Barnes’s mother sued Felix, alleging that Felix had used excessive force in violation of the Fourth Amendment. In granting and upholding summary judgment in favor of Felix, the trial court and the U.S. Court of Appeals for the Fifth Circuit applied the “moment of threat” rule, which asks only if the officer was “in danger at the moment of the threat that resulted in [his] use of deadly force.” That rule renders irrelevant the examination of the events preceding the shooting. Because Felix reasonably could have believed that his life was in danger at the critical moment, the shooting was held to be lawful.

However, the Supreme Court unanimously vacated the Fifth Circuit’s judgment and remanded the case for further proceedings in what, I respectfully suggest, was a reasonable, thoughtful, and well-written opinion by Justice Kagan, whose writing is generally down-to-earth and direct.

The Court’s opinion begins with the recognition that there is no “easy-to-apply legal test” or “on/off switch” in this analysis. “Rather, the Fourth Amendment requires . . . that a court ‘slosh [its] way through’ a ‘factbound morass.’” Having done considerable sloshing, the Court held that a claim of excessive force during a stop or arrest is analyzed under the Fourth Amendment to establish whether the force applied was objectively reasonable from “the perspective of a reasonable officer at the scene.” In turn, this requires analyzing the “totality of the circumstances.”

But, contrary to the lower courts, the Supreme Court noted that the “totality of the circumstances” inquiry has no time limit. “While the situation at the precise time of the shooting will often matter most, earlier facts and circumstances may bear on how a reasonable officer would have understood and responded to later ones. Prior events”—such as the nature of the crime or warnings given to the suspect— “may show why a reasonable officer would perceive otherwise ambiguous conduct as threatening, or instead as innocuous.”

In the post-George Floyd times of fractious controversies over police confrontations with citizens, today’s decision demonstrates that all the Justices recognize the difficulty in recreating the conditions that have led to a police shooting and the mindsets and actions of both officers and suspects. The resolution of this case sensibly requires that analyzing “facts and circumstances” means considering all the facts and circumstances, beyond just the moment in which deadly force is employed.

An Overview of the Department of State’s New ‘Catch-and-Revoke’ Visa Policy

On April 30, 2025, U.S. Secretary of State Marco Rubio announced a shift in U.S. immigration policy by formally introducing the State Department (DOS)’s “catch-and-revoke” visa policy. This new approach underscores the Trump administration’s increased focus on immigration by allowing the government to revoke visas of non-U.S. citizens. While the policy aims to deter criminal activity, employers should be mindful of its potential impact on foreign national employees. Under this one-strike policy, any foreign national caught breaking U.S. laws risks losing their visa status. The DOS announcement emphasizes that a visa is not a right but a privilege, and the DOS is prepared to cancel such visas in cases of legal infractions by foreign nationals. Although the policy primarily targets individuals convicted of crimes such as assault or domestic violence, its implementation remains ambiguous. As it stands, there is a lack of clarity around which types of violations, including minor traffic infractions, might trigger visa revocation.

From an employer’s perspective, the catch-and-revoke policy signals a need for heightened vigilance in compliance efforts. Organizations that sponsor visas may need to reassess their risk management and legal strategies to safeguard their workforce against disruptions. This may be especially relevant for industries that depend on specialized skills often sourced from international talent pools. Importantly, employers should take note of the fact that a visa revocation does not automatically invalidate a foreign national’s underlying non-immigrant status or work authorization in the United States. Visa revocations do invalidate the underlying visa stamp that employees would need to return to the United States after international travel. As such, employees with visa revocations should proceed with extra caution when traveling internationally, as they may face difficulties in attaining a new visa issued post-revocation and reentering the United States.

The catch-and-revoke policy underscores that U.S. immigration policies are evolving rapidly. For employers, careful planning and adaptation may help enhance compliance while continuing to attract and retain global talent. For foreign nationals, understanding and adhering to U.S. laws is more essential than ever.

DOJ Alert: New White-Collar Priorities and Stronger Incentives to Self-Report

DOJ sets out new enforcement priorities for corporate and white-collar crime and emphasizes “focus, fairness and efficiency.”

This week, Matthew R. Galeotti, Head of the Criminal Division at the US Department of Justice, issued a memorandum outlining the Department’s renewed enforcement priorities and updating policies regarding the prosecution of corporate and white-collar crime. In this memorandum, Galeotti emphasized a commitment to addressing “the most urgent criminal threats to the country,” and promoting equality and efficiency throughout the Department. The DOJ will concentrate its efforts and resources on both longstanding areas of focus and new areas identified in the current administration’s “America First Priorities,” memorandum, including:

Material Support by Corporations to Cartels, Transnational Criminal Organizations (TCOs) and Foreign Terrorist Organizations (FTOs): As Bracewell previously reported, the DOJ is intensifying its scrutiny of companies that provide material support or resources to designated FTOs or facilitating the criminal operations of cartels and TCOs through bribery. On his first day in office, President Trump issued a series of executive orders addressing this issue. Accordingly, businesses operating in high-risk regions such as in parts of Mexico and other areas in Central and South America — as well as the Middle East — are encouraged to adopt robust internal controls and compliance protocols to mitigate investigation and enforcement risk.

Waste, Fraud and Abuse (Health Care Fraud and Federal Program and Procurement Fraud): The DOJ will continue its efforts to hold accountable individuals and corporations that defraud vital government programs such as Medicare, Medicaid and defense-related initiatives, thereby resulting in harm to the public fisc.

Trade and Customs Fraud, Including Tariff and Sanctions Evasion: The DOJ will focus its resources on combatting these forms of fraud, as they threaten the US economy, American competitiveness and national security.

Fraud Perpetuated Through Variable Interest Entities (VIEs): The America First Investment Policy highlights the importance of investor protection against fraudulent practices tied to certain foreign adversary companies listed on US exchanges. The DOJ will focus its efforts on VIEs — which are typically “Chinese-affiliated” companies listed on US exchanges — that facilitate fraud in the US markets via schemes such as “ramp and dumps,” elder fraud, securities fraud and other forms of market manipulation.

Fraud that Victimizes US Investors, Individuals and Markets: This includes, but is not limited to, Ponzi schemes, investment fraud, elder fraud, servicemember fraud and fraud that threatens the health and safety of consumers.

Conduct that Threatens US National Security: This includes, but is not limited to, threats to the US financial system by gatekeepers, such as financial institutions and their insiders that commit sanctions violations or enable transactions by cartels, TCOs, hostile nation-states and/or foreign terrorist organizations.

Complex Money Laundering: The DOJ will focus on Chinese money laundering organizations and other organizations involved in laundering funds used in the manufacturing of illegal drugs.

Violations of the Controlled Substances Act and the Federal Food, Drug, and Cosmetic Act (FDCA): This includes the unlawful manufacture and distribution of chemicals and equipment used to create counterfeit pills laced with fentanyl and the unlawful distribution of opioids by medical professionals and companies.

Bribery and Associated Money Laundering Impacting U.S. National Interests: The DOJ recognizes that bribery and money laundering undermine US national security, harm the competitiveness of US businesses and enrich foreign corrupt officials.

Crimes Involving Digital Assets: As provided by the Digital Assets DAG Memorandum, the DOJ will focus on crimes (1) involving digital assets that victimize investors and consumers; (2) that use digital assets in furtherance of criminal conduct; and (3) willful violations that facilitate significant criminal activity. Notably, cases involving cartels, TCOs, or terrorist groups, or that facilitate drug money laundering or sanctions evasion will receive the highest priority.

Related Policy Updates

To address these areas of focus, Galeotti announced that the Department would undergo various policy updates, as summarized below:

Incentives for Corporate Self-Reporting: Acknowledging that not all corporate misconduct warrants prosecution, the DOJ is encouraging companies to self-report violations. In emphasizing its dedication to equality and fairness, the Department has revised the Corporate Enforcement and Voluntary Self-Disclosure Policy (CEP) to now offer clearer benefits — such as potential declinations and fine reductions — for companies that demonstrate transparency, cooperation and a genuine commitment to remediation. In a recent speech, Galeotti stated that companies will have a “clear path to declination” from criminal charges if they “voluntarily self-disclose to the Criminal Division, fully cooperate, timely and appropriately remediate, and have no aggravating circumstances” and that “[c]ompanies that are ready to take responsibility should not be overburdened by enforcement.” Even where a company does not qualify for declination, resolutions resulting in non-prosecution agreements, deferred prosecution agreements or reduced penalties be available. Prosecutors are encouraged to conduct case-by-case assessments of the facts while prioritizing transparency and fairness in their determinations.

Streamlined Corporate Investigations: To promote efficiency in addressing complex, cross-border white-collar investigations, the Department will collaborate closely with relevant authorities to expedite investigations and make timely charging decisions. In fact, the central theme of Galeotti’s memorandum is the emphasis the Department will now place on “three core tenets: (1) focus; (2) fairness; and (3) efficiency.”

Enhanced Whistleblower Protections: Galeotti also directed updates to the Criminal Division’s Corporate Whistleblower Awards Pilot Program to reflect the DOJ’s current enforcement priorities. In addition to existing categories of eligibility, new eligible “Subject Areas” for whistleblower tips that lead to forfeiture will now include:

Corporate violations related to international cartels or TCOs (e.g., money laundering, narcotics, Controlled Substances Act infractions);

Corporate breaches of federal immigration law;

Material support of terrorism;

Corporate sanctions violations;

Trade, tariff and customs fraud; and

Corporate procurement fraud.

Narrowly Tailored Use of Monitorships: Through this memorandum, the DOJ has emphasized that independent compliance monitorships should only be imposed when a company is unlikely to implement an effective compliance program or otherwise address the root causes of misconduct. When monitorships are deemed necessary, they must be narrowly tailored to meet essential objectives while minimizing cost, burden and disruption to legitimate business operations — further exemplifying how the Department’s three core tenets will be practically applied. In support of this principle, Galeotti announced a new monitor selection memorandum that outlines the key factors prosecutors should consider when evaluating the appropriateness of a monitorship and emphasizes the importance of tailoring the monitor’s scope to the specific risks of future criminal conduct, thereby avoiding unnecessary expenses. The Department has also initiated a case-by-case review of all existing monitorships to assess their continued necessity.

Key Takeaways

To what extent these new focus areas and priorities will truly shift the DOJ’s operations remains to be seen. However, in light of the announcement, companies should consider the following measures:

Conduct an updated risk assessment and create or revise internal controls and policies to address specific risks that align with DOJ’s stated enforcement priorities.

Conduct an audit of the company’s compliance program to test and evaluate its effectiveness in preventing and detecting wrongdoing and make necessary policy and internal control changes to addresses any material weaknesses.

Review and update policies and processes around internal complaints. Ensuring that stakeholders are encouraged to raise complaints internally so that the company can review, investigate and can self-report if needed is more important than ever.

Assess and review internal investigation procedures to ensure efficient and thorough internal investigations to capitalize on self-disclosure benefits.

Galeotti’s full memorandum can be reviewed here.

Focus, Fairness and Efficiency: DOJ Reveals Administration’s Plans for Enforcement of Corporate and White-Collar Crime

Key Takeaways

DOJ Criminal Division will prioritize enforcement in key areas, including health care fraud, trade and customs violations and national security-related financial crimes.

Companies that voluntarily self-disclose, cooperate and remediate may qualify for declinations, reduced penalties and shortened compliance obligations even where aggravating factors are present.

Corporate compliance agreements will generally be capped at three years, with early termination available based on remediation, risk reduction and program maturity.

Independent compliance monitors will be imposed only when necessary and must be narrowly tailored to limit burden and business disruption.

On May 12, 2025, the Head of the Department of Justice’s (DOJ) Criminal Division, Matthew R. Galeotti, issued a memorandum detailing the Division’s enforcement priorities and policies for prosecuting corporate and white-collar crimes under the Trump administration.

The memorandum describes the need for the Division’s policies to “strike an appropriate balance” between investigating and prosecuting criminal wrongdoing “while minimizing unnecessary burdens on American enterprise.” In accordance with this position, the memorandum describes areas that the Division will be particularly focused on investigating and prosecuting, while also emphasizing the Division’s willingness to reduce criminal and civil sanctions when corporations self-disclose and cooperate with the government.

Overall, the memorandum describes enforcement priorities and associated policies that align with the Trump administration’s focus on rooting out government waste and abuse, toughening U.S. policy on foreign trade and combatting national security concerns such as drug trafficking and foreign crime organizations, citing to several executive orders on these topics.

The Criminal Division’s “Areas of Focus” include:

Federal program fraud, waste and abuse—specifically health care fraud and procurement fraud;

Trade and customs fraud, including tariff evasion;

Fraud perpetrated through variable interest entities (VIEs), including schemes targeting elders, “ramp and dumps,” and other forms of market manipulation;

Fraud that victimizes U.S. investors, individuals and markets, such as Ponzi schemes, schemes targeting elders and servicemembers and fraud that threatens consumer health and safety;

Financial institutions that commit sanctions violations or enable transactions by Transnational Criminal Organizations (TCOs), drug cartels, hostile nation-states and/or foreign terrorist organizations;

Corporations that provide “material support” to foreign terrorist organizations, cartels and TCOs;

Complex money laundering schemes—specifically referencing “Chinese Money Laundering Organizations” and other organizations involved in laundering money used in the manufacturing of illegal drugs;

Violations of the Controlled Substances Act and the Federal Food, Drug, and Cosmetic Act, including the unlawful manufacture and distribution of products used to create counterfeit pills containing fentanyl and the unlawful distribution of opioids by medical professionals and companies;

Bribery that impacts U.S. national interests, undermines U.S. national security and harms the competitiveness of the U.S.; and

Crimes involving the use of digital assets—with cases impacting victims, involving cartels, TCOs, or terrorist groups or facilitating drug money laundering or sanctions evasion receiving the highest priority.

After outlining the Criminal Division’s investigation and prosecution priorities, the memorandum indicates that the Department will take a more relaxed approach to misconduct committed by corporations that are willing to report such conduct, cooperate with the government and take actions to remediate the misconduct. In fact, these are factors that Criminal Division prosecutors must now consider when determining whether to bring criminal charges against corporations.

The memorandum further states that the Division’s Corporate Enforcement and Voluntary Self-Disclosure Policy will be revised to clarify additional benefits that are available to companies that self-disclose and cooperate with the government and will provide a “more easily understandable” path for declination and fine reductions. As part of this effort, the Criminal Division’s Fraud Section and Money Laundering and Asset Recovery Section have been instructed to review all existing corporate compliance agreements to determine if they should be terminated early. Facts that these Sections may consider when determining whether early termination is warranted include the duration of the post-resolution period, a substantial reduction in the company’s risk profile, the extent of remediation and maturity of the compliance program and whether the company self-reported the misconduct.

Additionally, Division attorneys must consider several factors when imposing terms for corporate compliance agreements, including the severity of the misconduct, the company’s degree of cooperation and remediation and the effectiveness of the company’s compliance program at the time of resolution. The memorandum provides that the terms of such agreements should not exceed three years “except in exceedingly rare cases,” and Division attorneys should assess these agreements regularly to determine if they should be terminated early.

Lastly, the memorandum provides policy changes with respect to the use of independent compliance monitors. Namely, the Division will only impose such monitoring when necessary, for example, when a company cannot be expected to implement an effective compliance program or prevent recurrence of the underlying misconduct, and the monitoring must be narrowly tailored to minimize expense, burden and interference with business.

Mr. Galeotti discussed these policy changes while speaking at the Securities Industry and Financial Markets Association’s Anti-Money Laundering and Financial Crimes Conference on May 13, 2025, stating that companies will now have a “clear path to declination” through self-disclosure, full cooperation with the government and timely remediation. Galeotti stated that even companies with aggravating circumstances may receive declination if the company’s cooperation and remediation outweigh these circumstances. Furthermore, Galeotti indicated that even companies that self-disclose after the government has become aware of their misconduct can still qualify for shorter-term compliance agreements, fine reduction and lessened monitoring.

Taken together, this memorandum and other guidance issued by DOJ under the direction of U.S. Attorney General Pam Bondi, indicate that the Department intends to treat corporate misconduct outside certain areas of focus with a lighter hand, incentivizing corporations to be transparent with the government and, as stated in the Division’s memorandum, “learn from their mistakes.”

DOJ Criminal Division Updates (Part 2): Department of Justice Updates its Corporate Criminal Whistleblower Awards Pilot Program

On August 1, 2024, the Department of Justice’s (DOJ) Criminal Division launched a three-year Corporate Whistleblower Awards Pilot Program (the “Pilot Program”). (See Part 1 and Part 3 of this series for more information.) The Pilot Program marked a significant effort by the DOJ to enhance its ability to fight corporate and white collar crime by enlisting whistleblowers to aid in the effort. On May 12, 2025, the DOJ released updated guidance (the “Updated Guidance”) related to the Pilot Program in order to reflect the updated enforcement priorities and policies of the administration under President Trump, also announced on May 12, 2025. In this article, we provide an overview of the Pilot Program and lay out the recent changes to the guidance.

Overview of the Pilot Program

As originally announced in August 2024, the Pilot Program allowed for financial recovery for whistleblowers who provided successful tips relating to “possible violations of law” for four categories of crimes: (1) foreign corruption and bribery, (2) financial institution crimes, (3) domestic corporate corruption, and (4) health care fraud involving private insurance plans.

Eligibility & Key Terms

To be eligible, potential whistleblowers must meet the following criteria:

Financial Threshold. To qualify under the Pilot Program, the information provided must lead to a successful forfeiture exceeding $1 million.

Originality. The information provided by the whistleblower must be based on the individual’s independent knowledge and cannot be already known to the DOJ. Information obtained through privileged communications is excluded from the DOJ consideration.

Lack of “Meaningful Participation” in the Reported Criminal Activity. A whistleblower is ineligible for an award if they “meaningfully participated” in the activity they are reporting. Pilot Program guidance provides that an individual who was “directing, planning, initiating, or knowingly profiting from” the criminal conduct reported is not eligible. Conversely, someone who was involved in the scheme in such a minimal role that they could be “described as plainly among the least culpable of those involved” would be able to recover an award under the Pilot Program.

Truthful and Complete Information. To qualify for an award, a whistleblower must provide all information of which they have knowledge, including any misconduct they may have participated in. If a whistleblower withholds information, they are ineligible to recover an award under the Pilot Program. This requirement includes full cooperation with the DOJ in any investigation, including providing truthful testimony during interviews, before a grand jury, and at trial or any other court proceedings and producing all documents, records, and other relevant evidence.

Award Structure

If eligible, a whistleblower may be entitled to a discretionary award of up to 30% of the first $100 million in net proceeds forfeited and up to 5% of the next $100–$500 million in net proceeds forfeited. Under relevant criminal forfeiture statutes, proceeds are forfeitable only if they are derived from or substantially involved in commission of an offense. In this way, net proceeds forfeited may be less than actual loss.

Unlike other similar whistleblower programs, any award pursuant to the Pilot Program is fully discretionary — there is no guaranteed minimum amount that a whistleblower will recover. In determining whether a whistleblower will receive an award, it will consider whether the information provided was specific, credible, and timely and also whether the information significantly contributed to forfeiture. The DOJ also assesses the whistleblower’s level of assistance and cooperation throughout the investigation.

Corporate Self-Disclosure

The Pilot Program gives companies a 120-day window to self-disclose information related to an internal whistleblower report. Companies choosing to self-disclose “misconduct” covered by the Pilot Program within the allotted 120-day window will remain eligible for a presumption of declination (i.e., no prosecution) under the Corporate Enforcement and Voluntary Self-Disclosure Policy, which also was updated as announced on May 12, 2025 (the “Self-Disclosure Policy”). This 120-day window applies even if the whistleblower has already reported misconduct to the DOJ.

Companies choosing to self-disclose also must meet the other requirements of the Self-Disclosure Policy to qualify for a presumption of declination. In addition to a timely self-disclosure, companies must cooperate fully with the investigation, identify responsible individuals, remediate all harms, and disgorge ill-gotten gains.

Changes in the May 2025 Updated Guidance

The Updated Guidance reaffirms the DOJ’s commitment to the Pilot Program and does not change that the program will run for three years unless otherwise announced. The majority of the specifics of the Pilot Program remain unchanged, including the requirements for whistleblower eligibility, the self-disclosure policy, and the amount that whistleblowers stand to gain.

The primary update is a change to the subject matter to which a whistleblower’s report must pertain in order to be eligible for recovery. Under the Pilot Program as initially announced, information provided by a whistleblower must have related to the following substantive areas:

Violations by financial institutions such as money laundering, failure to comply with anti-money laundering compliance requirements, and fraud against or non-compliance with financial institution regulators.

Violations related to foreign corruption and bribery, including violations of the Foreign Corrupt Practices Act, money laundering statutes, and the Foreign Extortion Prevention Act.

Violations related to the payment of bribes or kickbacks to domestic public officials.

Violations related to federal health care offenses involving private or non-public health care benefit programs, where the overwhelming majority of claims were submitted to private or other non-public health care benefit programs.

Violations related to fraud against patients, investors, or other non-governmental entities in the health care industry, where these entities experienced the overwhelming majority of the actual or intended loss.

Any other federal violations involving conduct related to health care not covered by the federal False Claims Act (FCA).

In its Updated Guidance, the DOJ removes certain language from these categories thus broadening the substantive reach of the Pilot Program:

Removes the requirement that violations related to federal health care offenses involve “private or non-public” health care benefit programs.

Removes the requirement that the overwhelming majority of claims for federal health care offenses were submitted to private or other non-public health care benefit programs.

Removes the requirement that patients, investors, or other non-governmental entities experience the overwhelming majority of actual or intended loss.

Removes entirely the qualifying category for reports involving health care-related violations not covered by the FCA.

Consistent with the Trump administration’s focus on tariffs, immigration, and cartels, among other enforcement priorities, the DOJ adds priority subject-matter areas that now qualify for a potential whistleblower award:

Violations related to fraud against, or deception of, the United States in connection with federally funded contracting or federal funding that does not involve health care or illegal health care kickbacks.

Violations related to trade, tariff, and customs fraud.

Violations related to federal immigration law.

Violations related to corporate sanctions offenses.

Violations related to international cartels or transnational criminal organizations, including money laundering, narcotics, and Controlled Substance Act violations.

Concurrently with its Updated Guidance, the DOJ issued a memorandum entitled “Focus, Fairness, and Efficiency in the Fight Against White-Collar Crime.” This memo clearly lays out the priorities of the DOJ’s Criminal Division under the Trump administration, including but not limited to “trade and customs fraud,” “conduct that threatens the country’s national security,” and combatting “foreign terrorist organizations” such as “recently designated Cartels and [Transnational Criminal Organizations].” The DOJ stated that amendments to the Pilot Program were intended to “demonstrate the Division’s focus on these priority areas.” The changes in the Updated Guidance closely track the stated priority areas, and they reflect that while the Pilot Program will continue, its focus may shift to reflect the additional goals of the Trump administration.

Recommendations for Minimizing Risk Under the Pilot Program

While the recent changes to the Pilot Program broaden the scope of potential whistleblower reports and may implicate companies in industries that were previously not likely to be subject to the program, the substantive best practices for minimizing risk of a whistleblower seeking to take advantage of the Pilot Program remain the same, even with the Updated Guidance. Companies therefore should take this opportunity to review and update their whistleblower response policies to ensure they are clear, being followed, and effective.

Have a preexisting compliance program that encompasses all relevant subject-matter areas. Given the 120-day window to self-disclose under the Pilot Program, companies must be able to undertake complete internal investigations on a short timeline. Companies should ensure they have strong and robust internal reporting structures for misconduct of any type and that they are prepared to promptly investigate any alleged misconduct. Companies should protect the confidentiality of whistleblowers, not retaliate, and not impede whistleblowers from reporting potential violations to the government. To the extent that a company’s compliance program defines potential “misconduct” more narrowly than the Pilot Program, those companies should consider expanding the scope of their compliance function to ensure all potential violations of criminal law are thoroughly investigated.

Conduct internal investigations under privilege. The Pilot Program provides that information is not “original” if the whistleblower obtained it through a communication subject to the attorney-client privilege. It also disqualifies potential whistleblowers if they learned the information in connection with the company’s process for identifying, reporting, and addressing potential violations of law. Therefore, it is essential for companies to preserve privilege while conducting internal investigations. In-house or outside counsel should guide the investigation, and the scope and purpose of the investigation should be documented in writing. Companies should be careful with the extent to which they involve non-attorneys in the investigation (if at all) and should ensure the investigation is being led by attorneys and for the purpose of obtaining attorney advice.

Consider self-disclosure where appropriate. If a company chooses to self-disclose potential misconduct within the 120-day period provided by the Pilot Program, the company is entitled to a presumption of declination under the Self-Disclosure Policy. Where there is any question regarding whether a company has uncovered “misconduct,” this presumption may put a thumb on the scale for self-disclosing, although note that the program also requires companies to cooperate throughout the ensuing government investigation.

Be aware of pre-existing self-disclosure requirements. In combatting the eligibility of potential whistleblowers, companies should consider whether they have any existing requirement to self-disclose. This may come from requirements imposed on all federal grant recipients. It could stem from serving as a government contractor, where such contractors are already required to disclose evidence of potential violations of federal criminal law. The obligation to self-disclose may also come from a corporate integrity agreement in place following a prior FCA settlement. If any of these scenarios apply, it is less likely that a potential whistleblower will be deemed to have come forward voluntarily with original information, and there may be an argument that they therefore do not qualify for an award under the Pilot Program.

Lori Rubin Garber also contributed to this article.

DOJ Criminal Division Updates (Part 3): New Reasons for Companies to Self-Disclose Criminal Conduct

On May 12, 2025, the U.S. Department of Justice (DOJ) announced revisions to its Criminal Division Corporate Enforcement and Voluntary Self-Disclosure Policy (CEP). (See Part 1 and Part 2 of this series for more information.) As a part of the new administration’s priorities and policies for prosecuting corporate and white collar crimes, the head of the Criminal Division directed the Fraud Section and Money Laundering and Asset Recovery Section to revise the CEP and clarify that additional benefits are available to companies that self-disclose and cooperate.[1]

The CEP encourages companies to self-disclose misconduct, fully cooperate with investigations, and remediate issues — and, in turn, potentially reduce their criminal exposure. Though the scope and criteria for compliance with the CEP have evolved since it was announced in 2016, a constant has been the presumption of declination for a company in compliance. This amorphous “presumption” has long drawn complaints from practitioners and companies, due to its lack of certainty in outcomes — especially weighed against the often-extensive investigations and work corporations do to comply with the policy.

The latest revision to the CEP seeks to address complaints about the lack of certainty by providing specific conditions to companies considering voluntary self-disclosure and a pathway to guaranteed declination. The revised CEP also establishes significant benefits for companies that may not meet the requisite declination requirements but fall into other categories. Understanding the nuances of the revised CEP is crucial for companies to ensure they are well positioned to benefit from the revised CEP, should a company find itself in a position to self-disclose misconduct. The key aspects of the May 2025 Revised CEP are as follows:

Declination of Prosecution

Four conditions must be met for DOJ to decline criminal prosecution of company:

Voluntary Self-Disclosure. The company must have proactively and promptly reported unknown misconduct to the Criminal Division, without having an obligation to do so and without an imminent threat of disclosure or government investigation.

Full Cooperation. The company must have “fully cooperated” throughout the investigation process by, among other things, timely disclosing and voluntarily preserving relevant documents and information as well as making company officers and employees who possess relevant information available for interviews by prosecutors and investigators.

Timely and Appropriate Remediation. The company must have taken prompt and effective corrective actions, including investigating underlying conduct and root causes, appropriately disciplining wrong-doers, and implementing an effective compliance and ethics program to reduce future risks.

No Aggravating Circumstances. There should be no significant aggravating factors related to the misconduct, such as its severity, scope, or repeated occurrence, nor recent criminal adjudications for similar offenses.

Near Miss Cases: Voluntary Self-Disclosures with Aggravating Factors

The revised CEP also creates a middle ground for companies that self-report in good faith but do not meet all other voluntary self-disclosure requirements. In these “near miss” situations, the DOJ may offer a Non-Prosecution Agreement (NPA), which typically provides the following benefits:

A term length of fewer than three years.

No requirement for an independent compliance monitor.

A 75% reduction off the low end of the U.S. Sentencing Guidelines fine range.

Resolutions in Other Cases

The revised CEP also outlines a third route to resolution: If a company’s situation does not qualify for a declination or an NPA, prosecutors still have discretion to determine the appropriate resolution. This includes the imposition of penalties, term lengths, compliance obligations, and monetary fines. Prosecutors typically will apply a reduction from the low end of the fine range for non-recidivist companies that have fully cooperated and remediated the misconduct.

Why This Matters

DOJ’s message through the revised CEP is clear: Do the right thing and you will be rewarded.

A company’s timely and effective voluntary remediation and self-disclosure can now result in guaranteed declination of criminal prosecution, if the company complies with the steps laid out in the revised CEP. Companies should keep these benefits in mind not only when faced with a decision point regarding self-disclosure of misconduct but also as they proactively evaluate the effectiveness of their compliance programs and whether they are adequately resourced. Foley is here to help with your compliance and internal investigations needs, as well as counsel you through evaluating self-disclosure under the revised CEP.

[1] See Foley blog post on May 12, 2025 Criminal Division White Collar Enforcement Plan Memo.

Increased Clarity for White-Collar Clients: The Department of Justice Unveils its Revised Corporate Self-Disclosure Policy

What should U.S. businesses take from the Department of Justice’s (“DOJ”) revisions to its Corporate Enforcement and Voluntary Self-Disclosure Policy (“CEP”)? While DOJ has long promoted self-disclosure of wrongdoing as a key way to obtain leniency, DOJ’s revised policy states clearly and unequivocally that self-disclosure will lead to non-prosecution in certain circumstances.

On May 12, 2025, the Criminal Division released a memorandum detailing the new administration’s goals for prosecuting corporate and white-collar crimes. The memorandum sets forth the government’s view that “overbroad and unchecked corporate and white-collar enforcement burdens U.S. businesses and harms U.S. interests,” and directs federal prosecutors to scrutinize all their investigations to avoid overreach that deters innovation by U.S. businesses. Matthew R. Galeotti, Chief of the DOJ Criminal Division, recently underscored these sentiments on May 12, 2025, at SIFMA’s Anti-Money Laundering and Financial Crimes Conference, stating that under the revised CEP, companies can avoid “burdensome, years-long investigations that inevitably end in a resolution process in which the company feels it must accept the fate the Department has ultimately decided.”

Companies that self-disclose possible misconduct and fully cooperate with the government will not be required to enter into a criminal resolution with the DOJ. Galeotti said that under CEP’s “easy-to-follow” flow chart, companies that (1) voluntarily self-disclose to the Criminal Division (2) fully cooperate, (3) timely and appropriately remediate, and (4) have no aggravating circumstances “will receive a declination, not just a presumption of a declination.” The revised CEP allows that even a company that self-discloses in good faith after the government becomes aware of the misconduct may still be eligible to receive a non-prosecution agreement with a term of fewer than three years, 75% reduction of the criminal fine, and no corporate monitorship.

To be sure, this does not mean that U.S. companies should use these policy changes as an opportunity to take unnecessary risks without fear of prosecution. Indeed, DOJ’s main priority is to prosecute individuals, including executives, officers, or employees of companies, and will “investigate these individual wrongdoers relentlessly to hold them accountable.” Although it remains to be seen how the government will implement its new guidelines, the revised enforcement policy is helpful to U.S. businesses, white-collar clients, and their advisors, who have long hoped for heightened transparency and clearer guidelines for potential outcomes under the DOJ’s corporate self-disclosure program.

Think Compliance Got Easier? Think Again—DOJ’s New Era in White-Collar Enforcement

Many have speculated as to how white-collar enforcement may change during President Trump’s second term. A recent memorandum by the Head of the Department of Justice’s (“Department”) Criminal Division, Matthew R. Galeotti, sheds light on that issue. Specifically, on May 12, Galeotti issued a memorandum—“Focus, Fairness, and Efficiency in the Fight Against White-Collar Crime” (the “Galeotti Memorandum”). Galeotti covers a number of topics in the memorandum, including the “three core tenets” that the Criminal Division will follow when prosecuting white-collar matters. Those tenets are: “(1) focus; (2) fairness; and (3) efficiency.” We will cover each of those pillars in three posts this week. This post delves into the first tenet—focus.

As an initial matter, the Galeotti Memorandum affirms the Department’s commitment to “do justice, uphold the rule of law, protect the American public, and vindicate victims’ rights.” He emphasizes the “significant threat to U.S. interests” that white-collar crime poses. Galeotti explains that the Department is adopting a “targeted and efficient” approach to white collar cases that “does not allow overbroad enforcement to harm legitimate business interests.” Galeotti further cautioned that governmental overreach “punishes risk-taking and hinders innovation.”

Under the focus prong, the Galeotti Memorandum directs prosecutors to concentrate on issues that pose a “significant threat to US interests.” Galeotti first walks through the harms stemming from white-collar crime, including:

The exploitation of governmental programs, including health care fraud and defense spending fraud;

The targeting of U.S. investors or actions that otherwise undermining market integrity, such as elder fraud, investment fraud, and Ponzi schemes;

The targeting of monetary systems that compromise “economic development and innovation;”

Threats to the American economy and national security; and

The corruption of the American financial system.

In light of those harms, Galeotii identifies the following priority areas for the Criminal Division:

Health care fraud and other waste, fraud, and abuse;

Trade and customs fraud;

Elder fraud, securities fraud, and other fraud facilitated by variable interest entities;

Complex money laundering, including “Chinese Money Laundering Organizations;”

Fraud targeting “U.S. investors, individuals, and markets;”

Crimes that compromise national security;

Corporate support of “foreign terrorist organizations;”

Crimes implicating “the Controlled Substances Act and the Federal Food, Drug, and Cosmetic Act;”

Money laundering and bribery implicating “U.S. national interests,” “national security,” competition, and the benefit of “foreign corrupt officials;” and

Criminal conduct that involves “digital assets that victimize investors and consumers,” use those assets to further “other criminal conduct,” and “willful violations that facilitate significant criminal activity.”

In addition, the Department will focus on identifying and seizing the proceeds of crimes included in the list above and using those proceeds “to compensate victims.” Prosecutors will also prioritize crimes “involving senior-level personnel or other culpable actors, demonstrable loss,” and obstruction of justice.

The Department is also expanding its Corporate Whistleblower Awards Pilot Program to prioritize tips that result in forfeiture in areas such as:

Conduct involving “international cartels or transnational criminal organizations;”,

Federal immigration law violations;

Conduct “involving material support of terrorism;”

“Corporate sanctions offenses;”

Corporate conduct involving “[t]rade, tariff, and customs fraud;” and

Procurement fraud by corporations.

As noted above, we will delve into the other two prongs of the Galeotti Memorandum—fairness and efficiency—in two, follow-up posts. The first prong makes clear, however, that the Department is still focused on white collar crime—particularly in the health care industry.

Clearer Carrots and More Restrained Sticks: Key Updates to DOJ Corporate Enforcement Policies

“The Criminal Division is turning a new page on white-collar and corporate enforcement.” So pronounced the head of the US Department of Justice (DOJ) Criminal Division, Matthew Galeotti, in a recent speech rolling out several new policies regarding central elements of DOJ’s approach to corporate enforcement, including self-disclosure, whistleblowers, and corporate monitorships.1

In a new enforcement plan titled “Focus, Fairness, and Efficiency in the Fight Against White-Collar Crime” (Enforcement Plan) and addressed to all Criminal Division personnel,2 Galeotti sets out several areas of focus for the Criminal Division that align with the Trump administration’s already-announced priorities and introduces some key process changes. The Enforcement Plan also recognizes the significant costs and intrusions that accompany federal probes and emphasizes the need for prosecutors to take all reasonable steps to minimize the length and impact of such investigations.

To that end, the Enforcement Plan introduced three particularly significant policy changes. First, and most meaningful, the Enforcement Plan unveiled an updated Corporate Enforcement and Voluntary Self-Disclosure Policy (CEP)3 that provides a path to guaranteed declinations or non-prosecution agreements (NPAs). The intention is apparent: DOJ is continuing its emphasis on self-disclosure, cooperation, and remediation. To spur more self-disclosures, DOJ is offering “carrots” that are bigger and more definite, so that companies know what to expect when they make the decision to call DOJ. Second, the new policies also broaden the conduct covered by the Corporate Whistleblower Awards Pilot Program to cover key administration priorities, such as immigration, transnational criminal organizations (TCOs), sanctions, and tariffs.4 And, third, revised policies now limit the imposition of burdensome compliance monitorships as part of corporate criminal resolutions.5

Focus Areas

The Enforcement Plan directs the Criminal Division to be “laser-focused on the most urgent criminal threats to the country” and identifies 10 “high-impact areas” that will be prioritized in investigating and prosecuting white-collar crimes. These include some long-standing categories as well as some that align with the Trump administration’s earlier-stated goals. They include the following:

Waste, fraud, and abuse, including healthcare fraud and federal program and procurement fraud.

Trade and customs fraud, including tariff evasion.

Fraud in connection with “variable interest entities” (VIEs), defined as typically Chinese-affiliated companies listed on US exchanges.

Investment fraud.

National security threats and material support to foreign terrorist organizations, cartels, and TCOs.

Money laundering offenses.

Violation of federal drug control laws, including as they relate to fentanyl and opioids.

Bribery that impacts US national interests, national security, or competitiveness of US businesses.

Crimes involving digital assets.

The Enforcement Plan also specifies that the Criminal Division will focus on compensating victims and prioritizing schemes that involve senior-level personnel, demonstrable loss, and efforts to obstruct justice—in particular where that harm impacts US citizens.

New Paradigm for Self-Disclosure: Definite and Clear Benefits

In an effort to “transparently [describe] the benefits that a company may earn through voluntarily self-disclosing misconduct,”6 the Criminal Division now guarantees that it will offer a company a declination so long as it meets the following four factors:

The company voluntarily self-discloses misconduct that is not previously known to DOJ, prior to imminent threat of disclosure, and within a reasonably prompt time after the company becomes aware of it;

The company fully cooperates with the Criminal Division’s investigation;

The company timely and appropriately remediates the misconduct; and,

There are no “aggravating circumstances” related to the nature and seriousness of the offense, egregiousness or pervasiveness of the misconduct, severity of the harm, or a prior criminal resolution within the last five years based on similar misconduct.

This assurance of a declination replaced the predecessor version of the CEP, which only offered the “presumption” of a declination under those same factors. Now, assuming the company meets these factors, DOJ will enter into a declination, though the company will still be required to pay all disgorgement and forfeiture, as well as restitution to any victims, and the declination will be made public.

Even when a company does not meet all four criteria for a guaranteed declination, the new policy has defined benefits for what it calls “near miss” scenarios—i.e., those where a company self-disclosed after the government became aware of the conduct or where aggravating factors are present. In such circumstances, companies are promised significant self-disclosure benefits: an NPA with a term fewer than three years, a 75% reduction from the low end of the fine range, and avoidance of a corporate monitor.

Finally, for companies that make no self-disclosure, their full cooperation and remediation may still earn cooperation credit in the discretion of the prosecutor, which may include the form of the resolution, its term, a reduction in the monetary penalty (not to exceed a 50% reduction in the guidelines range), and whether to impose a corporate monitor.

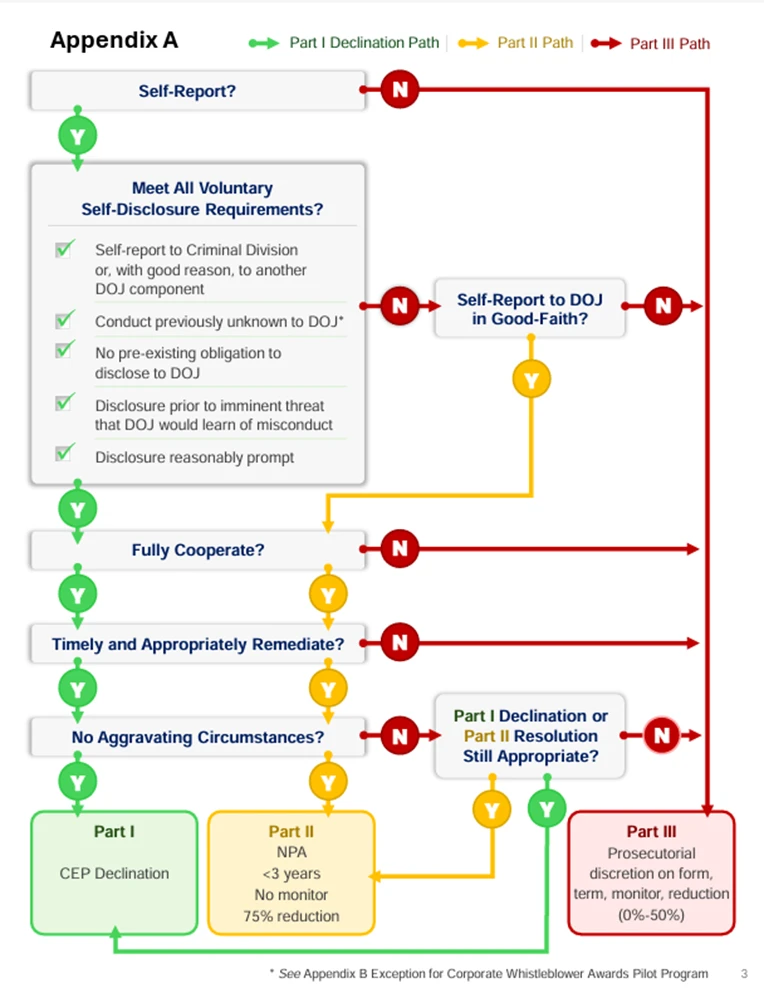

To aid companies as they navigate these benefits, the CEP now includes the following flow chart:

Source: 9-47.120 – Criminal Division Corporate Enforcement and Voluntary Self-Disclosure Policy

An Expanded Whistleblower Pilot Program

The Criminal Division also renewed its commitment to the Corporate Whistleblower Awards Pilot Program, originally unveiled under the last administration, with some modifications, primarily in terms of the types of matters covered, to align them with the Enforcement Plan’s areas of focus.

The three-year pilot program, launched in August 2024, was designed to offer significant payouts to tipsters who provide information on certain frauds that lead to asset forfeitures above US$1 million. While the mechanics of the program remain largely unchanged—including a 120-day window to self-disclose and receive the full benefits of the CEP, even if a whistleblower reported the same allegations to the government—the updated policy adds to its coverage matters in areas that the Trump administration has repeatedly highlighted as areas of priority. These priority areas include: procurement and federal program fraud; trade, tariff, and customs fraud; violations of federal immigration law; violations involving sanctions; and material support of foreign terrorist organizations, cartels, or TCOs, including money laundering, narcotics, and Controlled Substances Act violations. Notably, the program continues to include within its scope violations related to foreign corruption and bribery, including violations of the Foreign Corrupt Practices Act (FCPA) and Foreign Extortion Prevention Act (FEPA).

Monitors Reserved for Egregious Cases

As for independent compliance monitors, they will be fewer and far between, with the new policy noting that the value they add is often outweighed by the substantial costs and distractions that they impose on a company. DOJ now lists several criteria it will evaluate to ensure that an independent monitor will only be imposed when necessary and if the potential benefits justify the significant costs and burden.

In the “limited” circumstances when an independent compliance monitor is imposed, the DOJ will ensure that the monitorship is appropriately tailored, right-sized to the conduct, and focused on bringing the company back into good standing, where it can prevent future misconduct. To that end, DOJ will now require a fee cap, oversee budgets and workplans, and impose regular meetings with the Criminal Division.

Key Takeaways

These policy announcements reveal a much-anticipated shift by the Trump administration toward a corporate enforcement approach that recognizes and places greater emphasis on the practical impacts that DOJ actions have on corporations. By providing clearer guidelines and guaranteed benefits from self-reporting and cooperation—while limiting some of the more burdensome and costly penalties—DOJ is explicitly trying to adjust the carrot-and-stick calculations for companies that may encounter potential misconduct within their ranks. All companies active within the United States must carefully consider the ways that these policies change the risk environment.

Importance of Professional Internal Investigations

Conducting a focused, efficient, and credible internal investigation soon after allegations of misconduct arise remains as important as ever. To obtain the benefits of the revised CEP, including a certain declination or NPA, a company must self-disclose within a reasonably prompt period, which necessarily means that the company needs to know what the issue is and assess whether it warrants a disclosure. Moreover, with the renewed and expanded whistleblower incentives, if someone reports the misconduct both internally and to DOJ, there is only a 120-day window for the self-disclosure to satisfy the CEP requirements. In short, when an allegation arises that may reflect a federal criminal issue, a timely and professional investigation into the matter may yield substantial benefits.

Continue to Assess Compliance Programs

The DOJ announcements recognize the important role that US corporations have and that an effective compliance program is the “first line of defense” against misconduct.7 Such programs should include avenues for internal reporting and investigation of allegations, so that companies are well-positioned to benefit from the enhanced benefits under the CEP. Companies should also consider the “areas of focus” noted in the Enforcement Plan and how their compliance program addresses them, as applicable to the particular circumstances of their business. Consideration should be made to whether programs should now go beyond the historical areas of focus, such as antibribery and conflicts of interest, to policies, procedures, and trainings that cover sanctions, export controls, tariff compliance, and ensure companies avoid any connections to TCOs and cartels.

Carefully Evaluate Self-Disclosure Decisions

Although the “carrots” offered by DOJ are now clearer, more beneficial, and more predictable, decisions on whether, when, and how to self-disclose are always complex and involve many considerations. The prospect of a declination or NPA is attractive and now more apparent, but other consequences remain from self-disclosure in addition to the criminal fines and restitution, including ancillary litigation, business, and reputational risks. Decisions regarding self-reporting should be soberly reviewed with counsel.