New York’s Paid Prenatal Leave: What NYC Employers Need to Know About the DCWP’s Proposed Amendments to the ESSTA Rules

On January 6, 2025, in the wake of the issuance of guidance by the New York State Department of Labor (NYSDOL) about the New York State Paid Prenatal Leave Law, which came into effect on January 1, 2025, the New York City Department of Consumer and Worker Protection (DCWP) proposed amendments to the rules related to New York City’s Earned Safe and Sick Time Act (ESSTA) to incorporate the state’s paid prenatal leave law requirements.

Quick Hits

New York State’s paid prenatal leave law, which went into effect on January 1, 2025, requires that employers provide employees twenty hours of paid leave per year to receive prenatal care.

The NYSDOL recently released new guidance in the form of answers to frequently asked questions (FAQs) to assist employers in understanding and implementing the new requirements under the Paid Prenatal Leave Law.

In part, the DCWP’s proposed amended rules are consistent with the paid prenatal leave law, but if adopted, certain provisions would impose additional requirements that exceed what is otherwise required under the state law.

Overview of the Proposed Rules

Notable provisions of the proposed rules include sections:

requiring employers to maintain and distribute a written policy addressing paid prenatal leave that meets or exceeds all of the requirements of the ESSTA;

authorizing employers to require reasonable written documentation that the use of paid prenatal leave was for purposes authorized by law if the use of such leave results in an absence of more than three consecutive days, and as with the ESSTA, specifying that an employer shall not withhold payment of paid prenatal leave when the required documentation is unattainable by the employee due to associated costs;

requiring the inclusion of information about the amount of paid prenatal leave used and the balance of available paid prenatal leave available for use on pay statements or by electronic means;

providing that upon mutual consent of employer and employee, the employee’s schedule may be changed instead of using paid prenatal leave, and specifying that employers shall not require an employee to work additional hours to make up for time used for paid prenatal leave or search for or find a replacement employee to cover hours during which the employee uses paid prenatal leave; and

specifying that the penalties that may be imposed by the DCWP for violation of the paid prenatal leave requirements include but are not limited to the full amount of any underpayment of wages owed and interest, liquidated damages of up to 100 percent of the total amount of wages found to be due and, for prohibited retaliation, liquidated damages of up to $20,000, reinstatement or front pay in lieu of reinstatement, lost wages, and injunctive relief.

Next Steps

A public hearing is scheduled for February 14, 2025, and interested parties may provide feedback during the comment period, which ends on the same day.

Employers with employees in New York City may want to review the proposed rules to determine what additional obligations they may face if the rules are adopted in substantially the same form as currently proposed.

The Colorado AI Act: Implications for Health Care Providers

Artificial intelligence (AI) is increasingly being integrated into health care operations, from administrative functions such as scheduling and billing to clinical decision-making, including diagnosis and treatment recommendations. Although AI offers significant benefits, concerns regarding bias, transparency, and accountability have prompted regulatory responses. Colorado’s Artificial Intelligence Act (the Act), set to take effect on February 1, 2026, imposes governance and disclosure requirements on entities deploying high-risk AI systems, particularly those involved in consequential decisions affecting health care services and other critical areas.

Given the Act’s broad applicability, including its potential extraterritorial reach for entities conducting business in Colorado, health care providers must proactively assess their AI utilization and prepare for compliance with forthcoming regulations. Below, we discuss the intent of the Act, what types of AI it applies to, future regulation, potential impact on providers, statutory compliance requirements, and enforcement mechanisms.

1. What Is the Act Trying to Protect Against?

The Act primarily seeks to mitigate algorithmic discrimination, defined as AI-driven decision-making that results in unlawful differential treatment or disparate impact on individuals based on certain characteristics, such as race, disability, age, or language proficiency. The Act seeks to prevent AI from reinforcing existing biases or making decisions that unfairly disadvantage particular groups.

Examples of Algorithmic Discrimination in Health Care

Access to Care Issues: AI-powered phone scheduling systems may fail to recognize certain accents or accurately process non-English speakers, making it more difficult for non-native English speakers to schedule medical appointments.

Biased Diagnostic Tools and Treatment Recommendations: Some AI diagnostic tools may recommend different treatments for patients of different ethnicities, not because of medical evidence but due to biases in the training data. For instance, an AI model trained primarily on data from white patients might miss early signs of disease that present differently in Black or Hispanic patients, resulting in inaccurate or less effective treatment recommendations for historically marginalized populations.

By targeting these and other AI-driven inequities, the Act aims to ensure automated systems do not reinforce or exacerbate existing disparities in health care access and outcomes.

2. What Types of AI Are Addressed by the Act?

The Act applies broadly to businesses using AI to interact with or make decisions about Colorado residents. Although certain high-risk AI systems — those that play a substantial factor in making consequential decisions — are subject to more stringent requirements, the Act imposes obligations on most AI systems used in health care.

Key Definitions in the Act

“Artificial Intelligence System” means any machine-based system that generates outputs — such as decisions, predictions, or recommendations — that can influence real-world environments.

“Consequential Decision” means a decision that materially affects a consumer’s access to or cost of health care, insurance, or other essential services.

“High-Risk AI System” means any AI tool that makes or substantially influences a consequential decision.

“Substantial Factor” means a factor that assists in making a consequential decision or is capable of altering the outcome of a consequential decision and is generated by an AI system.

“Developers” means creators of AI systems.

“Deployers” means users of high-risk AI systems.

3. How Can Health Care Providers Ensure Compliance?

Although the Act sets out broad obligations, specific regulations are still forthcoming. The Colorado Attorney General has been tasked with developing rules to clarify compliance requirements. These regulations may address:

Risk management and compliance frameworks for AI systems.

Disclosure requirements for AI usage in consumer-facing applications.

Guidance on evaluating and mitigating algorithmic discrimination.

Health care providers should monitor developments as the regulatory framework evolves to ensure their AI-related practices align with state law.

4. How Could the Act Impact Health Care Operations?

The Act will require health care providers to specifically evaluate how they use AI across various operational areas, as the Act applies broadly to any AI system that influences decision-making. Given AI’s growing role in patient care, administrative functions, and financial operations, health care organizations should anticipate compliance obligations in multiple domains.

Billing and Collections

AI-driven billing and claims processing systems should be reviewed for potential biases that could disproportionately target specific patient demographics for debt collection efforts.

Deployers should ensure that their AI systems do not inadvertently create financial barriers for specific patient groups.

Scheduling and Patient Access

AI-powered scheduling assistants must be designed to accommodate patients with disabilities and limited English proficiency to prevent inadvertent discrimination and delayed access to care.

Providers must evaluate whether their AI tools prioritize certain patients over others in a way that could be deemed discriminatory.

Clinical Decision-Making and Diagnosis

AI diagnostic tools must be validated to ensure they do not produce biased outcomes for different demographic groups.

Health care organizations using AI-assisted triage tools should establish protocols for reviewing AI-generated recommendations to ensure fairness and accuracy.

5. If You Use AI, With What Do You Need to Comply?

The Act establishes different obligations for Developers and Deployers. Health care providers will in most cases be “Deployers” of AI systems as opposed to Developers. Health care providers will want to scrutinize contractual relationships with Developers for appropriate risk allocation and information sharing as providers implement AI tools into their operations.

Obligations of Developers (AI Vendors)

Disclosures to Deployers: Developers must provide transparency about the AI system’s training data, known biases, and intended use cases.

Risk Mitigation: Developers must document efforts to minimize algorithmic discrimination.

Impact Assessments: Developers must evaluate whether the AI system poses risks of discrimination before deploying it.

Obligations of Deployers (e.g., Health Care Providers)

Duty to Avoid Algorithmic Discrimination

Deployers of high-risk AI systems must use reasonable care to protect consumers from known or foreseeable risks of algorithmic discrimination.

Risk Management Policy & Program

Deployers must implement a risk management policy and program that identifies, documents, and mitigates risks of algorithmic discrimination.

The program must be iterative, regularly updated, and aligned with recognized AI risk management frameworks.

Requirements vary based on the deployer’s size, complexity, AI system scope, and data sensitivity.

Impact Assessments (Regular & Event-Triggered Reviews)

Timing Requirements: Deployers must conduct impact assessments:

Before deploying any high-risk AI system.

At least annually for each deployed high-risk AI system.

Within 90 days after any intentional and substantial modification to the AI system.

Required Content: Each impact assessment must include the AI system’s purpose, intended use, and benefits, an analysis of risks of algorithmic discrimination and mitigation measures, a description of data processed (inputs, outputs, and any customization data), performance metrics and system limitations, transparency measures (including consumer disclosures), and details on post-deployment monitoring and safeguards.

Special Requirements for Modifications: If an impact assessment is conducted due to a substantial modification, it must also include an explanation of how the AI system’s actual use aligned with or deviated from its originally intended purpose.

Notifications & Transparency

Public Notice: Deployers must publish a statement on their website describing the high-risk AI systems they use and how they manage discrimination risks.

Notices to Patients/Employees: Before an AI system makes a consequential decision, individuals must be notified of its use.

Post-Decision Explanation: If AI contributes to an adverse decision, deployers must explain its role and allow the individual to appeal or correct inaccurate data.

Attorney General Notifications: If AI is found to have caused algorithmic discrimination, deployers must notify the Attorney General within 90 days.

Small deployers (those with fewer than 50 employees) who do not train AI models with their own data are exempt from many of these compliance obligations.

6. How is the Act Enforced?

Only the Colorado Attorney General has enforcement authority.

A rebuttable presumption of compliance exists if Deployers follow recognized AI risk management frameworks.

There is no private right of action, meaning consumers cannot sue directly under the Act.

Health care providers should take early action to assess their AI usage and implement compliance measures.

Final Thoughts: What Health Care Providers Should Do Now

The Act represents a significant shift in AI regulation, particularly for health care providers who increasingly rely on AI-driven tools for patient care, administrative functions, and financial operations.

Although the Act aims to enhance transparency and mitigate algorithmic discrimination, it also imposes substantial compliance obligations. Health care organizations will have to assess their AI usage, implement risk management protocols, and maintain detailed documentation.

Given the evolving regulatory landscape, health care providers should take a proactive approach by auditing existing AI systems, training staff on compliance requirements, and establishing governance frameworks that align with best practices. As rulemaking by the Colorado Attorney General progresses, staying informed about additional regulatory requirements will be critical to ensuring compliance and avoiding enforcement risks.

Ultimately, the Act reflects a broader trend toward AI regulation that is likely to extend beyond state borders. Health care organizations that invest in AI governance now will not only mitigate legal risks but also maintain patient trust in an increasingly AI-driven industry.

If health care providers plan to integrate AI systems into their operations, conducting a thorough legal analysis is essential to determine whether the Act applies to their specific use cases. This should also include careful review and negotiation of service agreements with AI Developers to ensure that the provider has sufficient information and cooperation from the Developer to comply with the Act and to properly allocate risk between the parties.

Compliance is not a one-size-fits-all process. It requires careful evaluation of AI tools, their functions, and their potential to influence consequential decisions. Organizations should work closely with legal counsel to navigate the Act’s complexities, implement risk management frameworks, and establish protocols for ongoing compliance. As AI regulations evolve, proactive legal assessment will be crucial to ensuring that health care providers not only meet regulatory requirements but also uphold ethical and equitable AI practices that align with broader industry standards.

Finally, FDA’s Final Word on Unapproved Use Communications

On January 7, 2025, the U.S. Food and Drug Administration (“FDA” or “Agency”) released a long-awaited guidance titled, “Communications From Firms to Health Care Providers Regarding Scientific Information on Unapproved Uses of Approved/Cleared Medical Products: Questions and Answers” (the “Guidance”).[1] The Guidance is a finalized version of the draft guidance released in 2023 (the “Draft Guidance”), which we covered here, and updates FDA’s collection of guidances on the topic, including its 2014 draft guidance titled, “Distributing Scientific and Medical Publications on Unapproved New Uses — Recommended Practices” (the “2014 Draft Guidance”)[2] and its 2009 guidance titled, “Good Reprint Practices for the Distribution of Medical Journal Articles and Medical or Scientific Reference Publications on Unapproved New Uses of Approved Drugs and Approved or Cleared Medical Devices” (the “2009 Guidance”).[3]

This is a long time coming, and the changes from the Draft Guidance appear to be a step toward a more permissive policy that facilitates critical scientific exchange within the life sciences industry. In the Guidance just issued, FDA updates its framework for communicating scientific information about unapproved uses of approved or cleared medical products to healthcare providers (“HCPs”) and outlines its enforcement policy and recommendations to ensure that such communications are informative, truthful, and non-misleading.

Background on FDA’s Regulation of SIUU Communications

HCPs often prescribe FDA-cleared and/or -approved products for uses other than the intended use cleared and/or approved by FDA for the product (i.e., “off-label” uses) when medically appropriate for specific patients, especially when no proven alternative treatments exist – and under the Food, Drug, and Cosmetics Act (the “FDCA”), they’re free to do so. However, the FDCA generally prohibits manufacturers of FDA-cleared and/or -approved drugs, devices, and biologics from promoting these products for any off-label use. Therefore, FDA is tasked with protecting public health by balancing HCPs’ need for information on unapproved uses with its general prohibition against the promotion of medical products for off-label uses.

In the 2009 Guidance and subsequent 2014 Draft Guidance, FDA established a narrow exception to its general prohibition against off-label promotion for scientific information – including scientific or medical reference texts, and/or clinical practice guidelines – that discusses off-label uses for FDA-cleared or -approved products provided by manufacturers to HCPs. In the 2009 Guidance and 2014 Draft Guidance, FDA outlined then-current parameters and best practices for providing scientific information to HCPs in a compliant manner under the FDCA.

The Draft Guidance further revised these previous guidances, most notably by expanding the scope, and clarifying important definitions, including scientific information on unapproved uses of a medical product (“SIUU”).

The final Guidance largely reflects the best practices for disseminating SIUU established throughout the prior guidances, but makes important changes that ease certain requirements and clarify definitions and parameters of FDA’s policy.

Key Changes in Final Guidance

The recent Guidance largely mirrors the Draft Guidance, with some significant revisions and additions penned in response to important criticisms raised during the Draft Guidance’s comment period. For example, commenters encouraged FDA to limit the scope of its enforcement policy and refrain from suggesting that early stage clinical trial data is insufficient to support SIUU communications, alleging that these features of the guidance may have a chilling effect on the communication of truthful, scientific information from manufacturers to HCPs.

In response to these and other comments, FDA adjusted and more clearly defined the scope of the final Guidance. Perhaps more significantly, FDA also modified its stance on the use of clinical trial data in SIUU communications. In the Draft Guidance, FDA stated that SIUU communications should be “based on studies and analyses that are scientifically sound and provide clinically relevant information,” and cautioned against using early-stage clinical data in SIUU communications,[4] specifically citing instances in which Phase 3 results diverged from Phase 2 results, illustrating that Phase 2 data used in SIUU communications could be misleading or inaccurate. However, in the new Guidance, FDA loosened its standard, requiring only that SIUU communications be based on studies and analyses that are “statistically sound” (but omitting the clinical relevance requirement set forth in the Draft Guidance). Moreover, FDA clarified that data from a properly-conducted, early-phase clinical study may be used to form a so-called “scientifically sound” source publication for an SIUU communication, removing its previous discourse on divergent results altogether. These changes signify a marked change in tone for FDA that gives manufacturers greater freedom in sourcing supporting information for SIUU communications.

Additionally, FDA clarified that so-called “persuasive marketing techniques” – which are not allowed in SIUU communications – include emotional appeals unrelated to the scientific content, jingles, and promotional tag lines. We have previously noted FDA’s apparent grudge against dancing in advertising and promotion[5] and jingles appear to be FDA’s newest gripe with respect to more whimsical product promotion.

Further, FDA clarified that SIUU communications should be separated from promotional materials and excluded from specific media platforms. Notably, online platforms with character limitations could restrict a manufacturer from fully disclosing all necessary information in a SIUU communication. However, despite these SIUU-specific limitations, FDA identifies some of the same themes as it has in guidances for other types of drug and device promotion, such as sufficient disclosure and balancing of competing interests.

An important addition to the final Guidance is communicated through a footnote and essentially allows for the sharing of SIUU communications to HCPs by anyone with specialized training.[6] Significantly, sharing SIUU communications is not limited to someone in scientific or medical affairs – allowing any representative from a firm to share so long as they have the requisite training in providing truthful, non-misleading scientific information about unapproved uses of the firm’s approved medical products and training in handling potential questions that might arise from the information shared, including directing HCPs to the best qualified to respond.

Finally, in the new final Guidance, FDA included a glossary of defined terms, as well as more detailed examples of compliant SIUU communications, including reprints, clinical practice guidelines, reference texts, and manufacturer-generated presentations.

Takeaways

While both the Draft Guidance and the new final Guidance aim to guide manufacturers in their communications with HCPs regarding off-label uses of FDA-cleared or -approved medical products, the new final Guidance establishes a more permissive approach to SIUU communications, addressing the First Amendment concerns about chilling speech that were raised in numerous comments from industry stakeholders.

Interestingly, FDA seems to have significantly backed off on the hardline stances it had previously taken regarding the types of data it deems sufficient to support SIUU communications. By permitting, in certain circumstances, the use of “early-phase clinical data” and/or “preliminary scientific data,” and eliminating the “clinically relevant” requirement, FDA is being more permissive with the type of support manufacturers may use to back their communications with HCPs. Here, FDA is not holding manufacturers to produce clinical findings that live up to the standard needed to, for example, be granted approval to market a drug; rather, FDA has whittled its expectation down to the “scientifically sound” standard. Based on the changes between the Draft and final Guidance, manufacturers may consider expanding their SIUU communications policies to include statements supported by early-stage clinical trial data, even if the data is not “clinically relevant,” as long as it is “statistically sound.”

Furthermore, FDA has opened up to firms the option of having any personnel share these SIUU communications with HCPs, requiring only that those who share the information have specialized training. These expanded options comport with FDA’s overall more liberal tone to the final Guidance, but, firms should still take care to ensure that those sharing SIUU communications are properly trained in providing truthful, non-misleading information, and handling questions that might arise about the information. What this training looks like will largely be driven by internal discussions between legal, compliance, and business stakeholders.

However, FDA hasn’t let the reins go completely. As reflected in the new Guidance, FDA continues to plainly restrict certain SIUU communications, such as the use of emotional appeal language, and – of course – SIUU communications must still be informative, truthful, and not misleading. Ultimately, manufacturers should continue to approach SIUU communications with caution, taking care to ensure that the source publications in SIUU communications are scientifically sound and avoiding the use of crafty, emotionally-driven marketing techniques.

FOOTNOTES

[1] Guidance, Communications From Firms to Health Care Providers Regarding Scientific Information on Unapproved Uses of Approved/Cleared Medical Products: Questions and Answers | FDA, FDA (Jan. 2025).

[2] Draft Guidance, Distributing Scientific and Medical Publications on Risk Information for Approved Prescription Drugs and Biological Products—Recommended Practices, FDA (June 2014).

[3] Guidance, Good Reprint Practices for the Distribution of Medical Journal Articles and Medical or Scientific Reference Publications on Unapproved New Uses of Approved Drugs and Approved or Cleared Medical Devices, FDA (Jan. 2009).

[4] See Draft Guidance, supra FN 2, at FN 27.

[5] Key Takeaways From FDA’s Latest Social Media Warnings, Law360 (Dec. 2024).

[6] See Guidance, supra FN 1, at FN 48.

McDermott+ Check-Up: February 7, 2025

THIS WEEK’S DOSE

Key Nominations Move Forward. Having advanced from the Senate Finance Committee this week, Robert F. Kennedy Jr.’s nomination for secretary of Health & Human Services will now go to the Senate floor.

Pathway on Reconciliation Is Uncertain. While the House Budget Committee was expected to start the reconciliation process this week with a markup of a budget resolution, that did not happen.

House Energy & Commerce Health Subcommittee Holds Hearing on Drug Threats. Members examined solutions to the opioid crisis.

Trump Issues EO Modifying the Regulatory Process. The executive order (EO) calls for fewer regulations and rescinds changes to the regulatory cost-benefit analysis.

Administration Modifies Public Health Data. The Centers for Disease Control & Prevention scrubbed its websites of mentions of gender identity and diversity, equity, and inclusion.

Legal Challenges Continue in Response to Trump Administration Actions. Federal judges have taken additional actions to block efforts to freeze certain federal funding, and a lawsuit was filed in response to a Trump EO on care for transgender youth.

CONGRESS

Key Nominations Move Forward. The Senate Finance Committee advanced Robert F. Kennedy (RFK) Jr.’s nomination to lead the US Department of Health & Human Services (HHS) in a 14 – 13 vote along party lines. The nomination now moves to the Senate floor. If every Democrat opposes the nomination in the floor vote, RFK Jr. could lose up to three Republican votes and still be confirmed as HHS secretary.

The Senate confirmed Russell Vought as director of the Office of Management & Budget (OMB) by a 53 – 47 vote. The Senate voted to confirm Doug Collins to lead the US Department of Veterans Affairs in a vote of 77 – 23. Dr. Mehmet Oz, President Trump’s nominee to lead the Centers for Medicare & Medicaid Services (CMS), began meeting with senators on Capitol Hill this week, the first step in his nomination process. He has not spoken publicly on Medicaid much before, but he was quoted this week as saying, “We have to take care of the most vulnerable among us. It’s a social calling for all of us . . . that funding freeze was not designed to affect Medicaid at all.”

Pathway on Reconciliation Is Uncertain. While the House was expected to start the reconciliation process with a markup of a budget resolution in the Budget Committee this week, that did not happen. House Republicans continue to negotiate with one another about the level of spending cuts and held a meeting at the White House with President Trump, after which they announced they were moving closer to an agreement. Because this will be a partisan process, Republicans need to be largely unified in order to proceed. During this uncertainty, Senate Budget Committee Chairman Lindsey Graham (R-SC) made it clear that the Senate is prepared to move forward with its own budget resolution, which would begin a two-bill approach to reconciliation, with an immigration, energy, and defense bill completed first and a tax bill tackled later in the year. Senate Republicans are set to meet with President Trump Friday night at Mar-a-Lago to promote this approach. The budget resolution is a necessary first step in the reconciliation process, and healthcare programs are expected to be on the table for spending cuts. For an overview on the budget reconciliation process and its impact on health policies, read our +Insight.

House Energy & Commerce Health Subcommittee Holds Hearing on Drug Threats. The hearing discussed the importance of expanding access to addiction and mental health services and ensuring wide availability of Narcan. Republican members primarily focused on the importance of border security in combatting the opioid crisis, and Democratic members emphasized the funding freeze’s adverse impacts on medical research and the critical roles that Medicaid and federally qualified health centers play in enabling access to care for substance use disorders.

ADMINISTRATION

Trump Issues EO Modifying the Regulatory Process. This EO requires that whenever an agency promulgates a new rule, regulation, or guidance, it must identify at least 10 existing rules, regulations, or guidance documents to be repealed. The EO tasks the director of OMB with ensuring standardized measurement and estimation of regulatory costs, and it requires that, for fiscal year 2025, the total incremental cost of all new regulations, including repealed regulations, be significantly less than zero. It is unclear what this 10 – 1 ratio means in practice or how it will be implemented.

Administration Modifies Public Health Data. To comply with President Trump’s EOs related to gender identity and diversity, equity, and inclusion, the Centers for Disease Control & Prevention first removed, then uploaded a modified version of, public information and data related to HIV and health information for teens and LGBTQ+ people on both its data directory and main webpage. In response, a medical advocacy group has sued multiple health agencies, stating that the removal of data deprives physicians and researchers of access to necessary information.

COURTS

Legal Challenges Continue in Response to Trump Administration Actions. Following legal challenges last week to the Trump administration’s now-rescinded OMB memo directing agencies to freeze certain federal funding, more federal judges have acted to halt the federal freeze. On January 31, a federal judge in Rhode Island granted a temporary restraining order to block the freeze, following a lawsuit from Democratic attorneys general from 22 states and the District of Columbia. On February 3, a federal judge in the District of Columbia issued a similar injunction in a lawsuit filed by several coalitions of nonprofits.

PFLAG National, GLMA, and transgender individuals and their families filed a federal lawsuit against the Trump administration’s EO “Protecting Children from Chemical and Surgical Mutilation.” The EO states that federal agencies shall not “fund, sponsor, promote, assist, or support the so-called ‘transition’ of a child from one sex to another.” Plaintiffs in the lawsuit, filed in the US District Court for the District of Maryland, argue that the EO will create harm by denying access to physician-prescribed, medically recommended care. Read the press release here.

In response to a lawsuit brought by unions representing federal workers, a federal judge in Massachusetts paused the deadline for the administration’s buyout program for federal workers. There is another hearing set for next week.

QUICK HITS

HHS OCR Announces Action on Anti-Semitism. The HHS Office for Civil Rights (OCR) will initiate compliance reviews related to reported incidents of anti-Semitism at four medical schools.

House Democratic Leaders Send Letter to GAO on Medicare Drug Price Negotiation. In the letter, Energy & Commerce Ranking Member Frank Pallone (D-NJ), Ways & Means Ranking Member Richard Neal (D-MA), and Education & Workforce Ranking Member Bobby Scott (D-VA) urged the US Government Accountability Office (GAO) to monitor the Medicare Drug Price Negotiation Program to ensure the Trump administration complies with the Inflation Reduction Act, which directed and authorized the GAO to conduct oversight of the program.

CMS Releases Statement on DOGE Collaboration. The short statement notes that two senior CMS officials are working with Elon Musk’s Department of Government Efficiency (DOGE).

NEXT WEEK’S DIAGNOSIS

Both chambers will be in session next week. The House Veterans’ Affairs Health Subcommittee will hold another hearing on community care, the House Ways & Means Health Subcommittee will hold a hearing on modernizing healthcare, and the Senate Special Committee on Aging will hold a hearing on optimizing longevity. As noted, the House Budget Committee may mark up a budget resolution that would start the budget reconciliation process, or the Senate could move first. The full Senate is on track to approve RFK Jr.’s nomination, and we expect the administration to continue taking executive action related to healthcare.

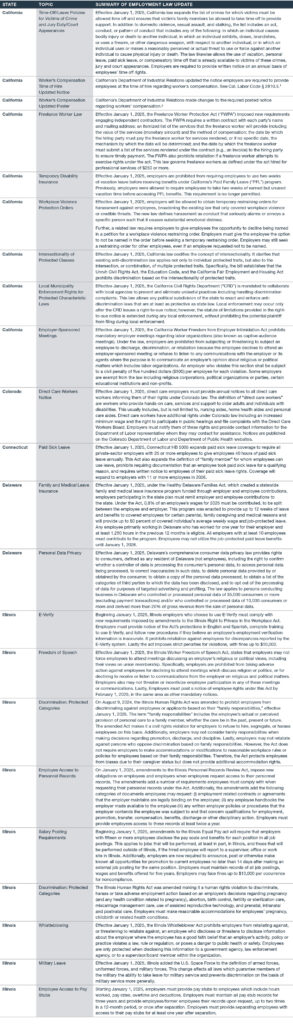

2025 Employment Law Updates

Many state and local government employment laws went into effect January 1, 2025. Here is a non-exhaustive list of 2025 employment law updates.

The Worker’s Compensation Time of Hire Notice can be found here.

The Worker’s Compensation Updated Poster can be found here.

Employers should also be aware that numerous hourly minimum wage rate increases are set to take effect in various jurisdictions on January 1, 2025, as previously detailed here.

Again, this is a non-exhaustive list of employment law updates. Contact your Polsinelli attorney if you have any questions or need assistance regarding employment law compliance in 2025, as well as to get up to speed on the latest employment law updates.

The BR Privacy & Security Download: February 2025

STATE & LOCAL LAWS & REGULATIONS

New York Legislature Passes Comprehensive Health Privacy Law: The New York state legislature passed SB-929 (the “Bill”), providing for the protection of health information. The Bill broadly defines “regulated health information” as “any information that is reasonably linkable to an individual, or a device, and is collected or processed in connection with the physical or mental health of an individual.” Regulated health information includes location and payment information, as well as inferences derived from an individual’s physical or mental health. The term “individual” is not defined. Accordingly, the Bill contains no terms restricting its application to consumers acting in an individual or household context. The Bill would apply to regulated entities, which are entities that (1) are located in New York and control the processing of regulated health information, or (2) control the processing of regulated health information of New York residents or individuals physically present in New York. Among other things, the Bill would restrict regulated entities to processing regulated health information only with a valid authorization, or when strictly necessary for certain specified activities. The Bill also provides for individual rights and requires the implementation of reasonable administrative, physical, and technical safeguards to protect regulated health information. The Bill would take effect one year after being signed into law and currently awaits New York Governor Kathy Hochul’s signature.

New York Data Breach Notification Law Updated: Two bills, SO2659 and SO2376, that amended the state’s data breach notification law were signed into law by New York Governor Kathy Hochul. The bills change the timing requirement in which notice must be provided to New York residents, add data elements to the definition of “private information,” and adds the New York Department of Financial Services to the list of regulators that must be notified. Previously, New York’s data breach notification statute did not have a hard deadline within which notice must be provided. The amendments now require affected individuals to be notified no later than 30 days after discovery of the breach, except for delays arising from the legitimate needs of law enforcement. Additionally, as of March 25, 2025, “private information” subject to the law’s notification requirements will include medical information and health insurance information.

California AG Issues Legal Advisory on Application of California Law to AI: California’s Attorney General has issued legal advisories to clarify that existing state laws apply to AI development and use, emphasizing that California is not an AI “wild west.” These advisories cover consumer protection, civil rights, competition, data privacy, and election misinformation. AI systems, while beneficial, present risks such as bias, discrimination, and the spread of disinformation. Therefore, entities that develop or use AI must comply with all state, federal, and local laws. The advisories highlight key laws, including the Unfair Competition Law and the California Consumer Privacy Act. The advisories also highlight new laws effective on January 1, 2025, which include disclosure requirements for businesses, restrictions on the unauthorized use of likeness, and regulations for AI use in elections and healthcare. These advisories stress the importance of transparency and compliance to prevent harm from AI.

New Jersey AG Publishes Guidance on Algorithmic Discrimination: On January 9, 2025, New Jersey’s Attorney General and Division on Civil Rights announced a new civil rights and technology initiative to address the risks of discrimination and bias-based harassment in AI and other advanced technologies. The initiative includes the publication of a Guidance Document, which addresses the applicability of New Jersey’s Law Against Discrimination (“LAD”) to automated decision-making tools and technologies. It focuses on the threats posed by automated decision-making technologies in the housing, employment, healthcare, and financial services contexts, emphasizing that the LAD applies to discrimination regardless of the technology at issue. Also included in the announcement is the launch of a new Civil Rights Innovation lab, which “will aim to leverage technology responsibly to advance [the Division’s] mission to prevent, address, and remedy discrimination.” The Lab will partner with experts and relevant industry stakeholders to identify and develop technology to enhance the Division’s enforcement, outreach, and public education work, and will develop protocols to facilitate the responsible deployment of AI and related decision-making technology. This initiative, along with the recently effective New Jersey Data Protection Act, shows a significantly increased focus from the New Jersey Attorney General on issues relating to data privacy and automated decision-making technologies.

New Jersey Publishes Comprehensive Privacy Law FAQs: The New Jersey Division of Consumer Affairs Cyber Fraud Unit (“Division”) published FAQs that provide a general summary of the New Jersey Data Privacy Law (“NJDPL”), including its scope, key definitions, consumer rights, and enforcement. The NJDPL took effect on January 15, 2025, and the FAQs state that controllers subject to the NJDPL are expected to comply by such date. However, the FAQs also emphasize that until July 1, 2026, the Division will provide notice and a 30-day cure period for potential violations. The FAQs also suggest that the Division may adopt a stricter approach to minors’ privacy. While the text of the NJDPL requires consent for processing the personal data of consumers between the ages of 13 and 16 for purposes of targeted advertising, sale, and profiling, the FAQs state that when a controller knows or willfully disregards that a consumer is between the ages of 13 and 16, consent is required to process their personal data more generally.

CPPA Extends Formal Comment Period for Automated Decision-Making Technology Regulations: The California Privacy Protection Agency (“CPPA”) extended the public comment period for its proposed regulations on cybersecurity audits, risk assessments, automated decision-making technology (“ADMT”), and insurance companies under the California Privacy Rights Act. The public comment period opened on November 22, 2024, and was set to close on January 14, 2025. However, due to the wildfires in Southern California, the public comment period was extended to February 19, 2025. The CPPA will also be holding a public hearing on that date for interested parties to present oral and written statements or arguments regarding the proposed regulations.

Oregon DOJ Publishes Toolkit for Consumer Privacy Rights: The Oregon Department of Justice announced the release of a new toolkit designed to help Oregonians protect their online information. The toolkit is designed to help families understand their rights under the Oregon Consumer Privacy Act. The Oregon DOJ reminded consumers how to submit complaints when businesses are not responsive to privacy rights requests. The Oregon DOJ also stated it has received 118 complaints since the Oregon Consumer Privacy Act took effect last July and had sent notices of violation to businesses that have been identified as non-compliant.

California, Colorado, and Connecticut AGs Remind Consumers of Opt-Out Rights: California Attorney General Rob Bonta published a press release reminding residents of their right to opt out of the sale and sharing of their personal information. The California Attorney General also cited the robust privacy protections of Colorado and Connecticut laws that provide for similar opt-out protections. The press release urged consumers to familiarize themselves with the Global Privacy Control (“GPC”), a browser setting or extension that automatically signals to businesses that they should not sell or share a consumer’s personal information, including for targeted advertising. The Attorney General also provided instructions for the use of the GPC and for exercising op-outs by visiting the websites of individual businesses.

FEDERAL LAWS & REGULATIONS

FTC Finalizes Updates to COPPA Rule: The FTC announced the finalization of updates to the Children’s Online Privacy Protection Rule (the “Rule”). The updated Rule makes a number of changes, including requiring opt-in consent to engage in targeted advertising to children and to disclose children’s personal information to third parties. The Rule also adds biometric identifiers to the definition of personal information and prohibits operators from retaining children’s personal information for longer than necessary for the specific documented business purposes for which it was collected. Operators must maintain a written data retention policy that documents the business purpose for data retention and the retention period for data. The Commission voted 5-0 to adopt the Rule, but new FTC Chair Andrew Ferguson filed a separate statement describing “serious problems” with the rule. Ferguson specifically stated that it was unclear whether an entirely new consent would be required if an operator added a new third party with whom personal information would be shared, potentially creating a significant burden for businesses. The Rule will be effective 60 days after its publication in the Federal Register.

Trump Rescinds Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence: President Donald Trump took action to rescind former President Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (“AI EO”). According to a Biden administration statement released in October, many action items from the AI EO have already been completed. Recommendations, reports, and opportunities for research that were completed prior to revocation of the AI EO may continue in place unless replaced by additional federal agency action. It remains unclear whether the Trump Administration will issue its own executive orders relating to AI.

U.S. Justice Department Issues Final Rule on Transfer of Sensitive Personal Data to Foreign Adversaries: The U.S. Justice Department issued final regulations to implement a presidential Executive Order regarding access to bulk sensitive personal data of U.S. citizens by foreign adversaries. The regulations restrict transfers involving designated countries of concern – China, Cuba, Iran, North Korea, Russia, and Venezuela. At a high level, transfers are restricted if they could result in bulk sensitive personal data access by a country of concern or a “covered person,” which is an entity that is majority-owned by a country of concern, organized under the laws of a country of concern, has its principle place of business in a country of concern, or is an individual whose primary residence is in a county of concern. Data covered by the regulation includes precise geolocation data, biometric identifiers, genetic data, health data, financial data, government-issued identification numbers, and certain other identifiers, including device or hardware-based identifiers, advertising identifiers, and demographic or contact data.

First Complaint Filed Under Protecting Americans’ Data from Foreign Adversaries Act: The Electronic Privacy Information Center (“EPIC”) and the Irish Counsel for Civil Liberties (“ICCL”) Enforce Unit filed the first-ever complaint under the Protecting Americans’ Data from Foreign Adversaries Act (“PADFAA”). PADFAA makes it unlawful for a data broker to sell, license, rent, trade, transfer, release, disclose, or otherwise make available specified personally identifiable sensitive data of individuals residing in the United States to North Korea, China, Russia, Iran, or an entity controlled by one of those countries. The complaint alleges that Google’s real-time bidding system data includes personally identifiable sensitive data, that Google executives were aware that data from its real-time bidding system may have been resold, and that Google’s public list of certified companies that receive real-time bidding bid request data include multiple companies based in foreign adversary countries.

FDA Issues Draft Guidance for AI-Enabled Device Software Functions: The U.S. Food and Drug Administration (“FDA”) published its January 2025 Draft Guidance for Industry and FDA Staff regarding AI-enabled device software functionality. The Draft provides recommendations regarding the contents of marketing submissions for AI-enabled medical devices, including documentation and information that will support the FDA’s evaluation of their safety and effectiveness. The Draft Guidance is designed to reflect a “comprehensive approach” to the management of devices through their total product life cycle and includes recommendations for the design, development, and implementation of AI-enabled devices. The FDA is accepting comments on the Draft Guidance, which may be submitted online until April 7, 2025.

Industry Coalition Pushes for Unified National Data Privacy Law: A coalition of over thirty industry groups, including the U.S. Chamber of Commerce, sent a letter to Congress urging it to enact a comprehensive national data privacy law. The letter highlights the urgent need for a cohesive federal standard to replace the fragmented state laws that complicate compliance and stifle competition. The letter advocates for legislation based on principles to empower startups and small businesses by reducing costs and improving consumer access to services. The letter supports granting consumers the right to understand, correct, and delete their data, and to opt out of targeted advertising, while emphasizing transparency by requiring companies to disclose data practices and secure consent for processing sensitive information. It also focuses on the principles of limiting data collection to essential purposes and implementing robust security measures. While the principles aim to override strong state laws like that in California, the proposal notably excludes data broker regulation, a previous point of contention. The coalition cautions against legislation that could lead to frivolous litigation, advocating for balanced enforcement and collaborative compliance. By adhering to these principles, the industry groups seek to ensure legal certainty and promote responsible data use, benefiting both businesses and consumers.

Cyber Trust Mark Unveiled: The White House launched a labeling scheme for internet-of-things devices designed to inform consumers when devices meet certain government-determined cybersecurity standards. The program has been in development for several months and involves collaboration between the White House, the National Institute of Standards and Technology, and the Federal Communications Commission. UL Solutions, a global safety and testing company headquartered in Illinois, has been selected as the lead administrator of the program along with 10 other firms as deputy administrators. With the main goal of helping consumers make more cyber-secure choices when purchasing products, the White House hopes to have products with the new cyber trust mark hit shelves before the end of 2025.

U.S. LITIGATION

Texas Attorney General Sues Insurance Company for Unlawful Collection and Sharing of Driving Data: Texas Attorney General Ken Paxton filed a lawsuit against Allstate and its data analytics subsidiary, Arity. The lawsuit alleges that Arity paid app developers to incorporate its software development kit that tracked location data from over 45 million consumers in the U.S. According to the lawsuit, Arity then shared that data with Allstate and other insurers, who would use the data to justify increasing car insurance premiums. The sale of precise geolocation data of Texans violated the Texas Data Privacy and Security Act (“TDPSA”) according to the Texas Attorney General. The TDPSA requires the companies to provide notice and obtain informed consent to use the sensitive data of Texas residents, which includes precise geolocation data. The Texas Attorney General sued General Motors in August of 2024, alleging similar practices relating to the collection and sale of driver data.

Eleventh Circuit Overturns FCC’s One-to-One Consent Rule, Upholds Broader Telemarketing Practices: In Insurance Marketing Coalition, Ltd. v. Federal Communications Commission, No. 24-10277, 2025 WL 289152 (11th Cir. Jan. 24, 2025), the Eleventh Circuit vacated the FCC’s one-to-one consent rule under the Telephone Consumer Protection Act (“TCPA”). The court found that the rule exceeded the FCC’s authority and conflicted with the statutory meaning of “prior express consent.” By requiring separate consent for each seller and topic-related call, the rule was deemed unnecessary. This decision allows businesses to continue using broader consent practices, maintaining shared consent agreements. The ruling emphasizes that consent should align with common-law principles rather than be restricted to a single entity. While the FCC’s next steps remain uncertain, the decision reduces compliance burdens and may challenge other TCPA regulations.

California Judge Blocks Enforcement of Social Media Addiction Law: The California Protecting Our Kids from Social Media Addiction Act (the “Act”) has been temporarily blocked. The Act was set to take effect on January 1, 2025. The law aims to prevent social media platforms from using algorithms to provide addictive content to children. Judge Edward J. Davila initially declined to block key parts of the law but agreed to pause enforcement until February 1, 2025, to allow the Ninth Circuit to review the case. NetChoice, a tech trade group, is challenging the law on First Amendment grounds. NetChoice argues that restricting minors’ access to personalized feeds violates the First Amendment. The group has appealed to the Ninth Circuit and is seeking an injunction to prevent the law from taking effect. Judge Davila’s decision recognized the “novel, difficult, and important” constitutional issues presented by the case. The law includes provisions to restrict minors’ access to personalized feeds, limit their ability to view likes and other feedback, and restrict third-party interaction.

U.S. ENFORCEMENT

FTC Settles Enforcement Action Against General Motors for Sharing Geolocation and Driving Behavior Data Without Consent: The Federal Trade Commission (“FTC”) announced a proposed order to settle FTC allegations against General Motors that it collected, used, and sold driver’s precise geolocation data and driving behavior information from millions of vehicles without adequately notifying consumers and obtaining their affirmative consent. The FTC specifically alleged General Motors used a misleading enrollment process to get consumers to sign up for its OnStar-connected vehicle service and Smart Driver feature without proper notice or consent during that process. The information was then sold to third parties, including consumer reporting agencies, according to the FTC. As part of the settlement, General Motors will be prohibited from disclosing driver data to consumer reporting agencies, required to allow consumers to obtain and delete their data, required to obtain consent prior to collection, and required to allow consumers to limit data collected from their vehicles.

FTC Releases Proposed Order Against GoDaddy for Alleged Data Security Failures: The Federal Trade Commission (“FTC”) has announced it had reached a proposed settlement in its action against GoDaddy Inc. (“GoDaddy”) for failing to implement reasonable and appropriate security measures, which resulted in several major data breaches between 2019 and 2022. According to the FTC’s complaint, GoDaddy misled customers of its data security practices, through claims on its websites and in email and social media ads, and by representing it was in compliance with the EU-U.S. and Swiss-U.S. Privacy Shield Frameworks. However, the FTC found that GoDaddy failed to inventory and manage assets and software updates, assess risks to its shared hosting services, adequately log and monitor security-related events, and segment its shared hosting from less secure environments. The FTC’s proposed order against GoDaddy prohibits GoDaddy from misleading its customers about its security practices and requires GoDaddy to implement a comprehensive information security program. GoDaddy must also hire a third-party assessor to conduct biennial reviews of its information security program.

CPPA Reaches Settlements with Additional Data Brokers: Following their announcement of a public investigative sweep of data broker registration compliance, the CPPA has settled with additional data brokers PayDae, Inc. d/b/a Infillion (“Infillion”), The Data Group, LLC (“The Data Group”), and Key Marketing Advantage, LLC (“KMA”) for failing to register as a data broker and pay an annual fee as required by California’s Delete Act. Infillion will pay $54,200 for failing to register between February 1, 2024, and November 4, 2024. The Data Group will pay $46,600 for failing to register between February 1, 2024, and September 20, 2024. KMA will pay $55,800 for failing to register between February 1, 2024, and November 5, 2024. In addition to the fines, the companies have agreed to injunctive terms. The Delete Act imposes fines of $200 per day for failing to register by the deadline.

Mortgage Company Fined by State Financial Regulators for Cybersecurity Breach: Bayview Asset Management LLC and three affiliates (collectively, “Bayview”) agreed to pay a $20 million fine and improve their cybersecurity programs to settle allegations from 53 state financial regulators. The Conference of State Bank Supervisors (“CSBS”) alleged that the mortgage companies had deficient cybersecurity practices and did not fully cooperate with regulators after a 2021 data breach. The data breach compromised data for 5.8 million customers. The coordinated enforcement action was led by financial regulators in California, Maryland, North Carolina, and Washington State. The regulators said the companies’ information technology and cybersecurity practices did not meet federal or state requirements. The firms also delayed the supervisory process by withholding requested information and providing redacted documents in the initial stages of a post-breach exam. The companies also agreed to undergo independent assessments and provide three years of additional reporting to the state regulators.

SEC Reaches Settlement over Misleading Cybersecurity Disclosures: The SEC announced it has settled charges with Ashford Inc., an asset management firm, over misleading disclosures related to a cybersecurity incident. This enforcement action stemmed from a ransomware attack in September 2023, compromising over 12 terabytes of sensitive hotel customer data, including driver’s licenses and credit card numbers. Despite the breach, Ashford falsely reported in its November 2023 filings that no customer information was exposed. The SEC alleged negligence in Ashford’s disclosures, citing violations of the Securities Act of 1933 and the Exchange Act of 1934. Without admitting or denying the allegations, Ashford agreed to a $115,231 penalty and an injunction. This case highlights the critical importance of accurate cybersecurity disclosures and demonstrates the SEC’s commitment to ensuring transparency and accountability in corporate reporting.

FTC Finalizes Data Breach-Related Settlement with Marriott: The FTC has finalized its order against Marriott International, Inc. (“Marriott”) and its subsidiary Starwood Hotels & Resorts Worldwide LLC (“Starwood”). As previously reported, the FTC entered into a settlement with Marriott and Starwood for three data breaches the companies experienced between 2014 and 2020, which collectively impacted more than 344 million guest records. Under the finalized order, Marriott and Starwood are required to establish a comprehensive information security program, implement a policy to retain personal information only for as long as reasonably necessary, and establish a link on their website for U.S. customers to request deletion of their personal information associated with their email address or loyalty rewards account number. The order also requires Marriott to review loyalty rewards accounts upon customer request and restore stolen loyalty points. The companies are further prohibited from misrepresenting their information collection practices and data security measures.

New York Attorney General Settles with Auto Insurance Company over Data Breach: The New York Attorney General settled with automobile insurance company, Noblr, for a data breach the company experienced in January 2021. Noblr’s online insurance quoting tool exposed full, plaintext driver’s license numbers, including on the backend of its website and in PDFs generated when a purchase was made. The data breach impacted the personal information of more than 80,000 New Yorkers. The data breach was part of an industry-wide campaign to steal personal information (e.g., driver’s license numbers and dates of birth) from online automobile insurance quoting applications to be used to file fraudulent unemployment claims during the COVID-19 pandemic. As part of its settlement, Noblr must pay the New York Attorney General $500,000 in penalties and strengthen its data security measures such as by enhancing its web application defenses and maintaining a comprehensive information security program, data inventory, access controls (e.g., authentication procedures), and logging and monitoring systems.

FTC Alleges Video Game Maker Violated COPPA and Engaged in Deceptive Marketing Practices: The Federal Trade Commission (“FTC”) has taken action against Cognosphere Pte. Ltd and its subsidiary Cognosphere LLC, also known as HoYoverse, the developer of the game Genshin Impact (“HoYoverse”). The FTC alleges that HoYoverse violated the Children’s Online Privacy Protection Act (“COPPA”) and engaged in deceptive marketing practices. Specifically, the company is accused of unfairly marketing loot boxes to children and misleading players about the odds of winning prizes and the true cost of in-game transactions. To settle these charges, HoYoverse will pay a $20 million fine and is prohibited from allowing children under 16 to make in-game purchases without parental consent. Additionally, the company must provide an option to purchase loot boxes directly with real money and disclose loot box odds and exchange rates. HoYoverse is also required to delete personal information collected from children under 13 without parental consent. The FTC’s actions aim to protect consumers, especially children and teens, from deceptive practices related to in-game purchases.

OCR Finalizes Several Settlements for HIPAA Violations: Prior to the inauguration of President Trump, the U.S. Department of Health and Human Services Office for Civil Rights (“OCR”) brought enforcement actions against four entities, USR Holdings, LLC (“USR”), Elgon Information Systems (“Elgon”), Solara Medical Supplies, LLC (“Solara”) and Northeast Surgical Group, P.C. (“NESG”), for potential violations of the Health Insurance Portability and Accountability Act’s (“HIPAA”) Security Rule due to the data breaches the entities experienced. USR reported that between August 23, 2018, and December 8, 2018, a database containing the electronic protected health information (“ePHI”) of 2,903 individuals was accessed by an unauthorized third party who was able to delete the ePHI in the database. Elgon and NESG each discovered a ransomware attack in March 2023, which affected the protected health information (“PHI”) of approximately 31,248 individuals and 15,298 individuals, respectively. Solara experienced a phishing attack that allowed an unauthorized third party to gain access to eight of Solara’s employees’ email accounts between April and June 2019, resulting in the compromise of 114,007 individuals’ ePHI. As part of their settlements, each of the entities is required to pay a fine to OCR: USR $337,750, Elgon $80,000, Solara $3,000,000, and NESG $10,000. Additionally, each of the entities is required to implement certain data security measures such as conducting a risk analysis, implementing a risk management plan, maintaining written policies and procedures to comply with HIPAA, and distributing such policies or providing training on such policies to its workforce.

Virgina Attorney General Sues TikTok for Addictive Fees and Allowing Chinese Government to Access Data: Virginia Attorney General Jason Miyares announced his office had filed a lawsuit against TikTok and ByteDance Ltd, the Chinese-based parent company of TikTok. The lawsuit alleges that TikTok was intentionally designed to be addictive for adolescent users and that the company deceived parents about TikTok content, including by claiming the app is appropriate for children over the age of 12 in violation of the Virginia Consumer Protection Act.

INTERNATIONAL LAWS & REGULATIONS

UK ICO Publishes Guidance on Pay or Consent Model: On January 23, the UK’s Information Commissioner’s Office (“ICO”) published its Guidance for Organizations Implementing or Considering Implementing Consent or Pay Models. The guidance is designed to clarify how organizations can deploy ‘consent or pay’ models in a manner that gives users meaningful control over the privacy of their information while still supporting their economic viability. The guidance addresses the requirements of applicable UK laws, including PECR and the UK GDPR, and provides extensive guidance as to how appropriate fees may be calculated and how to address imbalances of power. The guidance includes a set of factors that organizations can use to assess their consent models and includes plans to further engage with online consent management platforms, which are typically used by businesses to manage the use of essential and non-essential online trackers. Businesses with operations in the UK should carefully review their current online tracker consent management tools in light of this new guidance.

EU Commission to Pay Damages for Sending IP Address to Meta: The European General Court has ordered the European Commission to pay a German citizen, Thomas Bindl, €400 in damages for unlawfully transferring his personal data to the U.S. This decision sets a new precedent regarding EU data protection litigation. The court found that the Commission breached data protection regulations by operating a website with a “sign in with Facebook” option. This resulted in Bindl’s IP address, along with other data, being transferred to Meta without ensuring adequate safeguards were in place. The transfer happened during the transition period between the EU-U.S. Privacy Shield and the EU-U.S. Data Protection Framework. The court determined that this left Bindl in a position of uncertainty about how his data was being processed. The ruling is significant because it recognizes “intrinsic harm” and may pave the way for large-scale collective redress actions.

European Data Protection Board Releases AI Bias Assessment and Data Subject Rights Tools: The European Data Protection Board (“EDPB”) released two AI tools as part of the AI: Complex Algorithms and effective Data Protection Supervision Projects. The EDPB launched the project in the context of the Support Pool of Experts program at the request of the German Federal Data Protection Authority. The Support Pool of Experts program aims to help data protection authorities increase their enforcement capacity by developing common tools and giving them access to a wide pool of experts. The new documents address best practices for bias evaluation and the effective implementation of data subject rights, specifically the rights to rectification and erasure when AI systems have been developed with personal data.

European Data Protection Board Adopts New Guidelines on Pseudonymization: The EDPB released new guidelines on pseudonymization for public consultation (the “Guidelines”). Although pseudonymized data still constitutes personal data under the GDPR, pseudonymization can reduce the risks to the data subjects by preventing the attribution of personal data to natural persons in the course of the processing of the data, and in the event of unauthorized access or use. In certain circumstances, the risk reduction resulting from pseudonymization may enable controllers to rely on legitimate interests as the legal basis for processing personal data under the GDPR, provided they meet the other requirements, or help guarantee an essentially equivalent level of protection for data they intend to export. The Guidelines provide real-world examples illustrating the use of pseudonymization in various scenarios, such as internal analysis, external analysis, and research.

CJEU Issues Ruling on Excessive Data Subject Requests: On January 9, the Court of Justice of the European Union (“CJEU”) issued its ruling in the case Österreichische Datenschutzbehörde (C‑416/23). The primary question before the Court was when a European data protection authority may deny consumer requests due to their excessive nature. Rather than specifying an arbitrary numerical threshold of requests received, the CJEU found that authorities must consider the relevant facts to determine whether the individual submitting the request has “an abusive intention.” While the number of requests submitted may be a factor in determining this intention, it is not the only factor. Additionally, the CJEU emphasized that Data Protection Authorities should strongly consider charging a “reasonable fee” for handling requests they suspect may be excessive prior to simply denying them.

Daniel R. Saeedi, Rachel L. Schaller Gabrielle N. Ganz, Ana Tagvoryan, P. Gavin Eastgate, Timothy W. Dickens, Jason C. Hirsch, Tianmei Ann Huang, Adam J. Landy, Amanda M. Noonan, and Karen H. Shin contributed to this article

Health-e Law Episode 15: Healthcare Security is Homeland Security with Jonathan Meyer, former DHS GC and Partner at Sheppard Mullin [Podcast]

Welcome to Health-e Law, Sheppard Mullin’s podcast exploring the fascinating health tech topics and trends of the day. In this episode, Jonathan Meyer, former general counsel of the Department of Homeland Security and Leader of Sheppard Mullin’s National Security Team, joins us to discuss cyberthreats and data security from the perspective of national security, including the implications for healthcare.

What We Discussed in This Episode

How do cyberattacks and data privacy impact national security?

How can personal data be weaponized to cause harm to an individual, and why should people care?

Many adults are aware they need to keep their own personal data secure for financial reasons, but what about those who aren’t financially active, such as children?

How is healthcare particularly vulnerable to cyberthreats, even outside the hospital setting?

What can stakeholders do better at the healthcare level?

What can individuals do better to ensure their personal data remains secure?

HHS Publishes Notice of Proposed Rulemaking to Amend HIPAA Security Rule Requirements – Comments Due March 7, 2025

Summary

On December 27, 2024, the U.S. Department of Health and Human Services, Office for Civil Rights (“HHS”) published its Notice of Proposed Rulemaking (“NPRM”) titled HIPAA Security Rule to Strengthen the Cybersecurity of Electronic Protected Health Information. HHS seeks comments on proposed modifications to the Security Standards for the Protection of Electronic Protected Health Information comprising 45 C.F.R. Parts 160 and 164, Subpart C, commonly known as the “Security Rule”, to address modern breach and cybersecurity risks to electronic protected health information (“ePHI”)[1] and common deficiencies observed by HHS in Security Rule compliance investigations, and to incorporate current industry best practices[2] and court decisions affecting enforcement of the Security Rule[3].[4] As summarized below, the proposed modifications signal HHS’s commitment to aligning the Security Rule requirements with current cybersecurity standards and addressing areas of non-compliance with more prescriptive measures to enhance ePHI security in the face of evolving cyber threats and technological advancements. HHS invites interested parties to submit comments by March 7, 2025.

Two weeks after the NPRM was published in the Federal Register, President Trump issued an Executive Order requiring a “Regulatory Freeze Pending Review.” The regulatory freeze makes the fate of the proposed Security Rule amendments unclear. If the proposed Security Rule amendments proceed unchanged, regulated entities and health plan sponsors could incur significant combined costs, which HHS estimates at approximately $9.3 billion in the first year of implementation.[5]

HIPAA Framework

The statutory and regulatory framework that governs the privacy and security of (most) health information in the United States is codified under the Health Insurance Portability and Accountability Act of 1996, Public Law 104-191, enacted on August 21, 1996 (“HIPAA”). Changes and additional requirements to this statutory and regulatory framework were included in the Health Information Technology for Economic and Clinical Health Act (“HITECH Act”), enacted as part of the American Recovery and Reinvestment Act of 2009, Public Law 111-5, signed into law on February 17, 2009. Additionally, the Genetic Information and Nondiscrimination Act of 2008 (“GINA”), Public Law 110-233, signed into law on May 21, 2008, included provisions governing the use of genetic data.

In addition to the Security Rule, HHS issued regulations under HIPAA on Standards for Privacy of Individually Identifiable Health Information comprising 45 C.F.R. Parts 160 and 164, Subparts A and E (“Privacy Rule”), Standards for Notification in the Case of Breach of Unsecured Protected Health Information comprising 45 C.F.R. Parts 160 and 164, Subpart D (“Breach Notification Rule”), and Rules for Compliance and Investigations, Impositions of Civil Monetary Penalties, and Procedures for Hearings comprising 45 C.F.R. Part 160, Subparts C, D, and E (”Enforcement Rule”). These rules, developed through successive waves of the administrative rulemaking process, are extensive and complex.

Summary of the NPRM and Specific Requests for Comment

The Security Rule applies only to ePHI transmitted by or maintained in electronic media by covered entities and business associates (“regulated entities”). The NPRM proposes several modifications to the Security Rule in recognition of the “significant changes in which health care is provided and how the health care industry operates”[6] since the Security Rule was last revised in 2013. As is common for significant rulemaking, HHS often requests comments on its proposed rule changes, including perceived benefits, drawbacks, unintended consequences, and specific considerations for each proposal.

Security Rule Requirements Are Not Optional. The Security Rule currently distinguishes between “addressable” and “required” implementation specifications to provide regulated entities with flexibility to implement administrative, physical, and technical safeguards that are reasonable and appropriate based on their risk analysis, risk mitigation strategies, existing security measures, and implementation costs. HHS has observed that, despite extensive guidance and regulation, some regulated entities have incorrectly interpreted “addressable” implementation specifications to be “optional” requirements, resulting in compliance gaps and increased risks to ePHI.[7] HHS proposes to eliminate the distinction between “addressable” and “required” implementation specifications to simplify and clarify the baseline mandatory security measures that regulated entities must meet in order to demonstrate they are reasonably and appropriately safeguarding ePHI.[8] With respect to this proposed modification, HHS requests comment on whether removing the distinction between required and addressable implementation specifications would result in unintended negative consequences for regulated entities and recommendations for how HHS may clarify that regulated entities are required to implement the security measures proposed in the NPRM.[9]

Routine Review and Testing of Security Measures. The proposed amendments to the Security Rule would require regulated entities to review and test the effectiveness of their required security measures “on a specified cadence” and to modify them as reasonable and appropriate.[10] Some of the proposed measures for reviewing and testing measures are undertaking tabletop exercises to assess how effectively personnel follow incident response and security procedures, conducting knowledge assessments after training on policies and procedures, and reviewing system logs and access records to evaluate whether personnel are properly complying with policies and procedures governing access to ePHI.[11]

Data Inventory, Network Map, and Risk Analysis.[12] The proposed Security Rule amendments include replacing the existing standard for security management process (45 C.F.R. 164.308(a)(1)) with a new requirement that a regulated entity conduct and maintain a written technology asset inventory. This inventory would demonstrate the regulated entity’s awareness of the location of ePHI it records, maintains, or processes. Additionally, regulated entities would be required to maintain a network map of their “electronic information systems”, including all technology assets that may impact the confidentiality, integrity, or availability of ePHI. The network map must detail the movement of ePHI within the regulated entity’s electronic information systems, showing how ePHI enters, exits, and is accessed from outside the electronic information systems. HHS also proposes to require a regulated entity to use information from the data inventory and network map to conduct a risk analysis to identify the potential risks and vulnerabilities to ePHI and related electronic information systems.[13] These proposed changes to the administrative safeguard requirements align with HHS’s objective of harmonizing HIPAA standards with familiar concepts from other data privacy and security frameworks and laws[14] that require organizations to understand the flow of the data they process. The changes also aim to enhance a regulated entity’s ability to identify and manage risks to the confidentiality, integrity, and availability of ePHI.

Encryption as a Standard.[15]HHS proposes to redesignate encryption and decryption from an implementation specification for access control (45 C.F.R. 164.312(a)) and transmission security (45 C.F.R. 164.312(e)) to a standalone standard for technical safeguards in order “to increase its visibility and prominence.” The proposed amendments would require a regulated entity to use widely accepted encryption standards to protect ePHI at rest and in transit, update encryption methods as standards evolve, and maintain up-to-date risk analyses and security plans, subject to limited exceptions. For example, if a regulated entity is currently using a technology asset that does not support prevailing encryption standards, the regulated entity may still be in compliance with the encryption requirement provided that it “establish[es] a written plan to migrate ePHI to technology assets that support encryption consistent with prevailing [encryption] standards and to implement such a plan… within a reasonable and appropriate period of time.”[16] Another proposed exception would be when a regulated entity is transmitting unencrypted ePHI in response to an individual’s request pursuant to 45 CFR 164.524 (HIPAA Right of Access), wherein the individual instructs the regulated entity to submit responsive data in an unencrypted format (e.g., some types of text messaging instant messaging, or via an unencrypted app).[17]